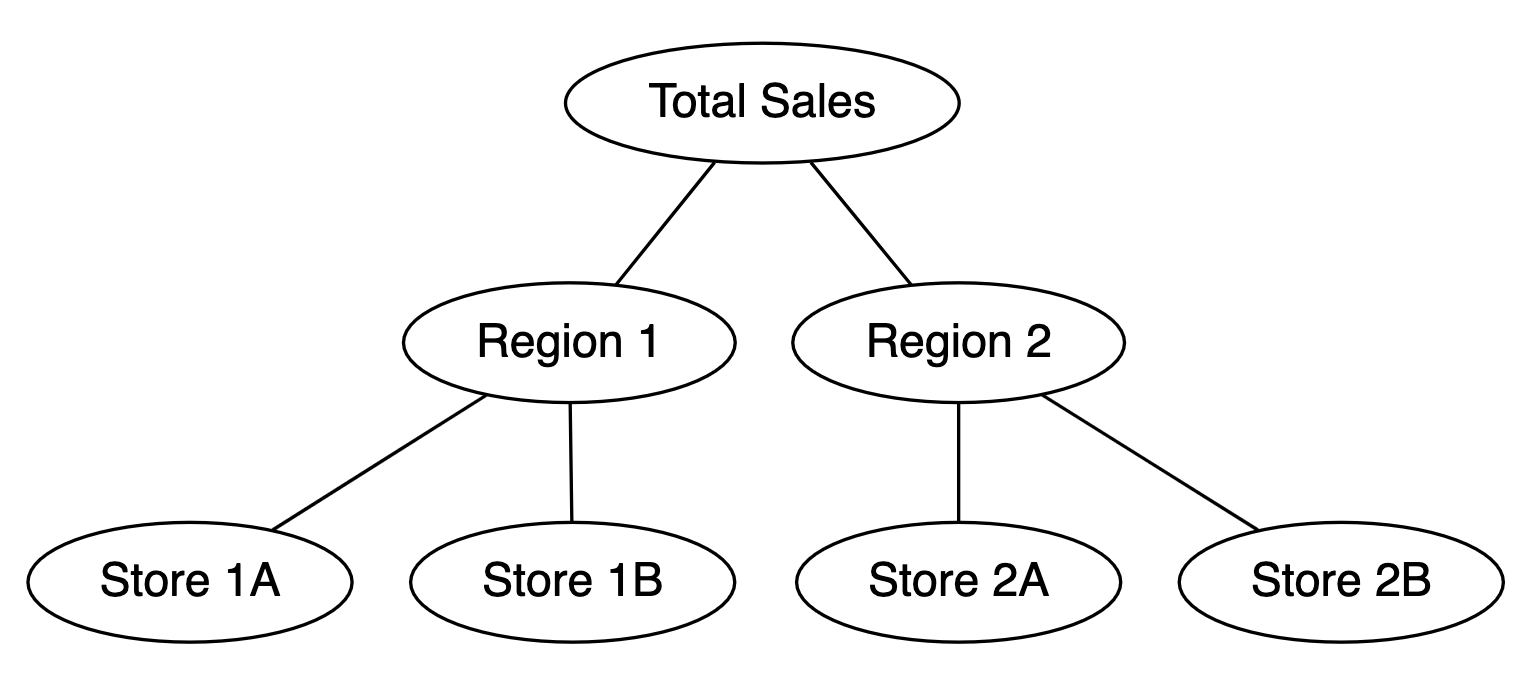

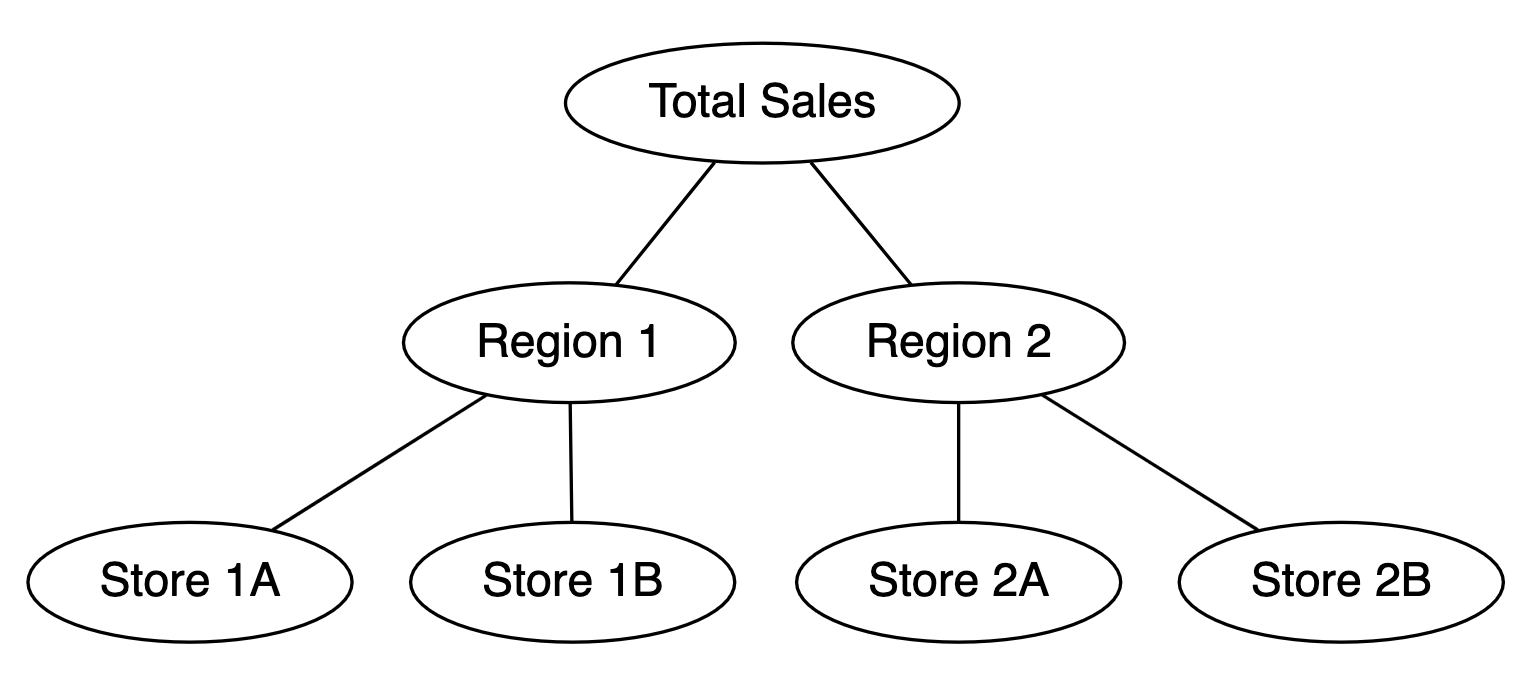

layout: true --- class: title-slide background-image: url("figs/titlebg.png") background-position: 100% 50% background-size: 100% 100% .content-box-green-trans[ .pull-left-1[  ] .pull-right-2[ # Forecast combinations: ## Modern perspectives and approaches ### Yanfei Kang ]] --- class: center, hide-slide-number background-image: url(figs/retail.jpeg) background-size: cover # Retail --- class: center, hide-slide-number background-image: url("figs/smart-meter.jpeg") background-size: cover # Smart meter --- class: inverse, left, middle *"If we know that learning algorithm `\(A\)` is superior to `\(B\)` averaged over some set of targets `\(F\)`, then the No Free Lunch theorems tell us that `\(B\)` must be superior to `\(A\)` if one averages over all targets not in `\(F\)`. This is true even if algorithm `\(B\)` is the algorithm of purely random guessing." * .left[-- Wolpert (1996)] --- class: inverse, left, middle *“The No Free Lunch Theorem argues that, without having substantive information about the modeling problem, there is no single model that will always do better than any other model.”* .left[-- Kuhn and Johnson (2013)] --- # Algorithm selection problem - Using measurable features of the problem instances to **predict which algorithm is likely to perform best**. - Applied to e.g., classification, regression, constraint satisfaction, forecasting and optimization (Smith-Miles, 2009). --- # Forecasting - One automatic way would be to resort to statistical model selection approaches, like information criteria or cross-validation. - Combining the forecasts across multiple models is often a better approach than identifying a single “best forecast”. --- # Perspectives of combination 1. Combining **multiple forecasts** derived from different methods for a given time series. 2. Combining the base forecasts of each series in a **hierarchy**. 3. Combining forecasts computed on different perspectives of the **same data**. --- # Perspective 1 .content-box-green[Combining multiple methods (point forecasts)] <img src="figure/unnamed-chunk-2-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 .content-box-green[Combining multiple methods (point forecasts)] Suppose we have `\(M\)` forecasting models. `\(\mathbf{f}_h\)` denotes `\(M\)`-vector of `\(h\)`-step-ahead forecasts. `\(\mathbf{\Sigma}_h\)` is the `\(M \times M\)` covariance matrix of the `\(h\)`-step forecast errors. - Simple combination: `\(f_{ch} = M^{-1}\mathbf{1}^\prime\mathbf{f}_h\)`. - Linear combination: `\(f_{ch} = \mathbf{w}_h^\prime\mathbf{f}_h\)`. - Optimal weights: `\(\mathbf{w}_h = \frac{\mathbf{\Sigma}_h^{-1}\mathbf{1}}{\mathbb{1}^\prime\mathbf{\Sigma}_h^{-1}\mathbf{1}}\)` (Bates and Granger, 1969). --- # Proportion of papers on forecast combination in WOS <center> <img src="figs/prop.png" height="450px"/> </center> --- # Perspective 1 .content-box-green[Combining multiple methods (point forecasts)] - Regression-based weights: regress past observations on past individual forecasts. - Performance-based weights: more weight on methods with better historical performance. - Criteria-based weights: more weight on methods with lower AIC values. - More variations including Bayesian weights, nonlinear combinations, combining by learning, etc. --- # Perspective 1 .content-box-green[Combining multiple methods (probabilistic forecasts)] <img src="figure/ensembles-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 .content-box-green[Combining multiple methods (probabilistic forecasts)] <img src="figure/unnamed-chunk-3-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 .content-box-green[Combining multiple methods (probabilistic forecasts)] <img src="figure/unnamed-chunk-4-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 .content-box-green[Combining multiple methods (probabilistic forecasts)] <img src="figure/unnamed-chunk-5-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 Combining probabilistic forecasts: mix the forecast distributions from multiple models (**linear pooling**) <img src="figure/unnamed-chunk-6-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 ### Linear pooling: a finite mixture - Forecasts obtained from a weighted mixture distribution of the component forecasts. - Equivalent to a weighted average of the distribution functions `$$F_{ch}(y) = \sum_{i = 1}^Mw_iF_{ih}(y),$$` where `\(F_{ih}(y)\)` is the `\(h\)`-step forecast distribution for the `\(i\)`th method. - Works best when individual models are over-confident and use different data sources. - Allows us to accommodate skewness and kurtosis (fat tails), and also multi-modality. --- # Perspective 1 ### Linear pooling: a finite mixture <img src="figure/unnamed-chunk-7-1.svg" width="864" style="display: block; margin: auto;" /> --- # Perspective 1 .content-box-green[Combining multiple methods (probabilistic forecasts)] - Weights from historical performance, represented by logarithmic scores etc. - Optimizing logarithmic scores of the combined probabilistic forecasts. - Similarly, optimizing other scoring rules also work (such as Brier score, CRPS). - Bayesian model averaging. - Quantile forecast combinations. --- # Perspective 2 .content-box-green[Hierarchical forecasting] .pull-left[  ] .pull-right[ - Multivariate forecasting problem. - Variables follow linear constraints. - Forecast reconciliation: combining forecasts. ] --- # Perspective 3 .content-box-green[Wisdom of the data] .pull-left[ <img src="figure/unnamed-chunk-8-1.svg" width="576" style="display: block; margin: auto;" /> ] -- .pull-right[ <img src="figure/unnamed-chunk-9-1.svg" width="576" style="display: block; margin: auto;" /> ] --- # Modern perspectives - Combining multiple methods: feature-based forecasting. - Hierarchical forecasting: optimal reconciliation with immutable forecasts. - Wisdom of the data: improving forecasting by subsampling seasonal time series. --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Feature-based forecasting] ]] --- # Feature-based forecasting - One way to forecast: manually inspect the time series `\(\rightarrow\)` understand its characteristics `\(\rightarrow\)` manually select an appropriate method according to the forecaster’s experience. - Not scalable for **large collections of series**. - **An automatic framework** is need! - Pioneer studies: rule-based methods (e.g., Collopy and Armstrong, 1992; Wang, Smith-Miles, and Hyndman, 2009) - More recently: **feature-based forecasting** via meta-learning. --- .pull-left[###Raw data <img src="figure/unnamed-chunk-10-1.svg" width="504" style="display: block; margin: auto;" /> ] .pull-right[ ### Feature representation <img src="figure/unnamed-chunk-11-1.svg" width="504" style="display: block; margin: auto;" /> ] --- .pull-left[###Raw data <img src="figure/unnamed-chunk-12-1.svg" width="504" style="display: block; margin: auto;" /> ] .pull-right[ ### Feature representation <img src="figure/unnamed-chunk-13-1.svg" width="504" style="display: block; margin: auto;" /> ] --- # More features .content-box-gray[ ### STL decomposition based features By STL, `\(x_t = S_t + T_t + R_t\)`. 1. Strength of trend: `\(F_1 = 1- \frac{\text{var}(R_t)}{\text{var}(x_t - S_t)}.\)` 2. Strength of seasonality: `\(F_2 = 1- \frac{\text{var}(R_t)}{\text{var}(x_t - T_t)}.\)` ] .content-box-green[ ### More available at - [`tsfeatures` ](https://cran.r-project.org/web/packages/tsfeatures/index.html) (Hyndman, Kang, Montero-Manso, Talagala, Wang, Yang, and O'Hara-Wild, 2020) - [`feasts`](https://feasts.tidyverts.org/) (O'Hara-Wild, Hyndman, and Wang, 2021) ] --- .pull-left[ ### Raw data <img src="figure/unnamed-chunk-14-1.svg" width="504" style="display: block; margin: auto;" /> ] .pull-right[ ### Feature extraction ``` r library(tsfeatures) M3.selected %>% tsfeatures(c("frequency", "stl_features", "entropy", "acf_features", "pacf_features", "heterogeneity", "hw_parameters", "lumpiness", "stability", "max_level_shift", "max_var_shift", "unitroot_pp", "unitroot_kpss", "hurst", "crossing_points")) #> # A tibble: 100 × 41 #> frequency nperiods seasonal_period trend spike linearity curvature e_acf1 e_acf10 seasonal_strength peak trough #> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> #> 1 4 1 4 0.999 1.68e-9 5.41 3.53 -0.113 0.130 0.258 2 3 #> 2 4 1 4 0.989 2.32e-7 -4.88 -1.23 0.201 0.756 0.134 3 1 #> 3 4 1 4 0.950 3.63e-6 3.08 -3.72 -0.135 0.366 0.569 1 4 #> 4 4 1 4 0.951 1.27e-6 -6.05 3.21 -0.418 0.557 0.712 1 4 #> 5 4 1 4 0.994 5.14e-8 6.14 1.86 -0.260 0.242 0.0800 4 2 #> 6 4 1 4 0.989 5.43e-7 3.92 -3.83 0.0405 0.298 0.466 1 3 #> 7 4 1 4 0.999 1.08e-9 6.39 -0.515 0.103 0.0955 0.229 4 2 #> 8 4 1 4 0.610 1.23e-6 0.815 -0.0690 -0.488 0.350 0.979 4 1 #> 9 4 1 4 0.966 7.81e-7 5.79 1.11 -0.117 0.357 0.671 1 4 #> 10 4 1 4 0.958 2.71e-6 6.13 -0.488 -0.131 0.293 0.297 2 4 #> # ℹ 90 more rows #> # ℹ 29 more variables: entropy <dbl>, x_acf1 <dbl>, x_acf10 <dbl>, diff1_acf1 <dbl>, diff1_acf10 <dbl>, #> # diff2_acf1 <dbl>, diff2_acf10 <dbl>, seas_acf1 <dbl>, x_pacf5 <dbl>, diff1x_pacf5 <dbl>, diff2x_pacf5 <dbl>, #> # seas_pacf <dbl>, arch_acf <dbl>, garch_acf <dbl>, arch_r2 <dbl>, garch_r2 <dbl>, alpha <dbl>, beta <dbl>, #> # gamma <dbl>, lumpiness <dbl>, stability <dbl>, max_level_shift <dbl>, time_level_shift <dbl>, max_var_shift <dbl>, #> # time_var_shift <dbl>, unitroot_pp <dbl>, unitroot_kpss <dbl>, hurst <dbl>, crossing_points <dbl> ``` ] --- # PCA `\(\Rightarrow\)` 2d <center> <img src="figs/instancespace.png" height="550px"/> </center> --- # The framework for feature-based forecasting <center> <img src="figs/forecastdiag.png" height="550px"/> </center> --- # Challenge 1: Training data .pull-left[ We need diverse training data. <center> <img src="figs/forbes.png" height="350px"/> </center> ] -- .pull-right[ Our work: .tiny.content-box-gray[ - Yanfei Kang, Rob J. Hyndman, Kate Smith-Miles. (2017). Visualising Forecasting Algorithm Performance using Time Series Instance Space, *International Journal of Forecasting* 33(2): 345–358. - Yanfei Kang, Rob J Hyndman, Feng Li (2020). GRATIS: GeneRAting TIme Series with diverse and controllable characteristics,* Statistical Analysis and Data Mining* 13(4): 354-376.] ] --- # Challenge 2: Features - Manual choice and estimation of features, which vary from tens to thousands (Fulcher and Jones, 2014). - Extracted using the historical data that are doomed to *change*. - Not robust in the case of limited historical data. -- .content-box-gray[ - Yanfei Kang, Wei Cao, Fotios Petropoulos, Feng Li (2021). Forecast with forecasts: Diversity matters, *European Journal of Operational Research* 301(1): 180-190. - Xixi Li, Yanfei Kang, Feng Li (2020). Forecasting with time series imaging, *Expert Systems with Applications* 160: 113680. ] --- # Other challenges .content-box-green[Intermittent data] .content-box-red[Uncertainty estimation] .content-box-gray[Meta-learners] -- .content-box-gray.tiny[ - Li Li, Yanfei Kang, Fotios Petropoulos, Feng Li (2022). Feature-based intermittent demand forecast combinations: bias, accuracy and inventory implications, *International Journal of Production Research* 61(22): 7557-7572. - Evangelos Theodorou, Shengjie Wang, Yanfei Kang, et al. (2021). Exploring the representativeness of the M5 competition data, *International Journal of Forecasting* 38(4): 1500-1506. - Xiaoqian Wang, Yanfei Kang, Fotios Petropoulos, Feng Li (2021). The uncertainty estimation of feature-based forecast combinations, *Journal of the Operational Research Society* 73(5): 979-993. - Li Li, Yanfei Kang, Feng Li (2022). Bayesian forecast combination using time-varying features, *International Journal of Forecasting* 39(3): 1187-1302. - Thiyanga S. Talagala, Feng Li, Yanfei Kang (2021). FFORMPP: Feature-based forecast model performance prediction, *International Journal of Forecasting* 38(3): 920-943. - Li Li, Feng Li and Yanfei Kang (2023), “Forecasting Large Collections of Time Series: Feature-Based Methods”, In Forecasting with Artificial Intelligence: Theory and Applications. Cham , pp. 251-276. Springer Nature Switzerland. ] --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Feature-based forecasting] .white.font110[*Training data generation*] ]] --- # Gaussian Mixure Autoregressive (MAR) models .content-box-gray[ ### `\(\text{MAR}(K;p_1, \cdots, p_K)\)` model `\(x_t=\phi_{k0}+\phi_{k1}x_{t-1}+\cdots+\phi_{kp_k}x_{t-p_k}+\epsilon_t, \epsilon_t \sim N(0, \sigma_k^2)\)` <br> with probability `\(\alpha_k\)`, where `\(\sum_{k=1}^K \alpha_k= 1\)`. ] .content-box-green[ ### Merits of MAR models ✨ - Consist of multiple stationary or non-stationary autoregressive components. - Possible to capture many (or any) time series features, e.g., non-stationarity, nonlinearity, non-Gaussianity, cycles and heteroskedasticity ] --- class: split-two # What do they look like? .pull-left[ ### Yearly ``` r *library(gratis) # library(feasts) set.seed(1) *mar_model(seasonal_periods=1) %>% # generate(length=30, nseries=3) %>% autoplot(value) + theme(legend.position="none") ``` ] .pull-right[ <img src="figure/unnamed-chunk-16-1.svg" width="504" style="display: block; margin: auto;" /> ] --- class: split-two # What do they look like? .pull-left[ ### Quarterly ``` r library(gratis) library(feasts) set.seed(2) *mar_model(seasonal_periods=4) %>% # generate(length=40, nseries=3) %>% autoplot(value) + theme(legend.position="none") ``` ] .pull-right[ <img src="figure/unnamed-chunk-17-1.svg" width="504" style="display: block; margin: auto;" /> ] --- class: split-two # What do they look like? .pull-left[ ### Monthly ``` r library(gratis) library(feasts) set.seed(123) *mar_model(seasonal_periods=12) %>% # generate(length=120, nseries=3) %>% autoplot(value) + theme(legend.position="none") ``` ] .pull-right[ <img src="figure/unnamed-chunk-18-1.svg" width="504" style="display: block; margin: auto;" /> ] --- # Visualisation in 2D space <center> <img src="figs/coverage.png" height="550px"/> </center> --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Feature-based forecasting] .white.font110[*Automatic feature extraction*] ]] --- # How to use diversity to forecast? - Input: the forecasts from a pool of models. - Measure their diversity, a **feature** that has been identified as a decisive factor in forecast combination (Thomson, Pollock, Onkal, and Gonul, 2019; Lichtendahl and Winkler, 2020). - Through meta-learning, we link the diversity of the forecasts with their out-of-sample performance to fit combination models based on diversity. --- # Diversity matters? .pull-left[ <img src="figure/unnamed-chunk-20-1.svg" width="576" style="display: block; margin: auto;" /> ] .pull-right[ ``` #> # A tibble: 9 × 2 #> Method MASE #> <chr> <dbl> #> 1 nnetar_forec 0.351 #> 2 auto_arima_forec 0.363 #> 3 tbats_forec 0.408 #> 4 snaive_forec 0.412 #> 5 ets_forec 0.435 #> 6 naive_forec 0.53 #> 7 rw_drift_forec 0.603 #> 8 thetaf_forec 0.744 #> 9 stlm_ar_forec 0.822 ``` ] --- # Combining two methods .pull-left[ <div class="plotly html-widget html-fill-item" id="htmlwidget-1f7a2c27f26023b73143" style="width:504px;height:504px;"></div> <script type="application/json" data-for="htmlwidget-1f7a2c27f26023b73143">{"x":{"data":[{"x":[0.0089999999999999993,0,0.0070000000000000001,0.13600000000000001,0.0080000000000000002,0.019,0.0030000000000000001,0.010999999999999999,0.0089999999999999993,0.001,0.13900000000000001,0.012999999999999999,0.021000000000000001,0.01,0.0080000000000000002,0.0070000000000000001,0.13300000000000001,0.0080000000000000002,0.02,0.0040000000000000001,0.010999999999999999,0.13600000000000001,0.010999999999999999,0.02,0.0080000000000000002,0.0080000000000000002,0.20399999999999999,0.249,0.17899999999999999,0.129,0.002,0.001,0.02,0.0060000000000000001,0.031,0.016],"y":[0.38200000000000001,0.35699999999999998,0.38100000000000001,0.35399999999999998,0.48199999999999998,0.55100000000000005,0.44500000000000001,0.36299999999999999,0.376,0.41999999999999998,0.435,0.503,0.57299999999999995,0.46700000000000003,0.41399999999999998,0.375,0.35799999999999998,0.47499999999999998,0.54500000000000004,0.439,0.35899999999999999,0.41899999999999998,0.495,0.56399999999999995,0.45800000000000002,0.40200000000000002,0.309,0.29399999999999998,0.32400000000000001,0.438,0.67200000000000004,0.56699999999999995,0.48099999999999998,0.63600000000000001,0.55100000000000005,0.44500000000000001],"text":["div: 0.009<br />mase: 0.382<br />colour: Other","div: 0.000<br />mase: 0.357<br />colour: Other","div: 0.007<br />mase: 0.381<br />colour: Other","div: 0.136<br />mase: 0.354<br />colour: Other","div: 0.008<br />mase: 0.482<br />colour: Other","div: 0.019<br />mase: 0.551<br />colour: Other","div: 0.003<br />mase: 0.445<br />colour: Other","div: 0.011<br />mase: 0.363<br />colour: Other","div: 0.009<br />mase: 0.376<br />colour: Other","div: 0.001<br />mase: 0.420<br />colour: Other","div: 0.139<br />mase: 0.435<br />colour: Other","div: 0.013<br />mase: 0.503<br />colour: Other","div: 0.021<br />mase: 0.573<br />colour: Other","div: 0.010<br />mase: 0.467<br />colour: Other","div: 0.008<br />mase: 0.414<br />colour: Other","div: 0.007<br />mase: 0.375<br />colour: Other","div: 0.133<br />mase: 0.358<br />colour: Other","div: 0.008<br />mase: 0.475<br />colour: Other","div: 0.020<br />mase: 0.545<br />colour: Other","div: 0.004<br />mase: 0.439<br />colour: Other","div: 0.011<br />mase: 0.359<br />colour: Other","div: 0.136<br />mase: 0.419<br />colour: Other","div: 0.011<br />mase: 0.495<br />colour: Other","div: 0.020<br />mase: 0.564<br />colour: Other","div: 0.008<br />mase: 0.458<br />colour: Other","div: 0.008<br />mase: 0.402<br />colour: Other","div: 0.204<br />mase: 0.309<br />colour: Other","div: 0.249<br />mase: 0.294<br />colour: Other","div: 0.179<br />mase: 0.324<br />colour: Other","div: 0.129<br />mase: 0.438<br />colour: Other","div: 0.002<br />mase: 0.672<br />colour: Other","div: 0.001<br />mase: 0.567<br />colour: Other","div: 0.020<br />mase: 0.481<br />colour: Other","div: 0.006<br />mase: 0.636<br />colour: Other","div: 0.031<br />mase: 0.551<br />colour: Other","div: 0.016<br />mase: 0.445<br />colour: Other"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(205,133,0,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(205,133,0,1)"}},"hoveron":"points","name":"Other","legendgroup":"Other","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[0.0089999999999999993,0,0.0070000000000000001,0.13600000000000001,0.0080000000000000002,0.019,0.0030000000000000001,0.010999999999999999,0.0089999999999999993,0.001,0.13900000000000001,0.012999999999999999,0.021000000000000001,0.01,0.0080000000000000002,0.0070000000000000001,0.13300000000000001,0.0080000000000000002,0.02,0.0040000000000000001,0.010999999999999999,0.13600000000000001,0.010999999999999999,0.02,0.0080000000000000002,0.0080000000000000002,0.20399999999999999,0.249,0.17899999999999999,0.129,0.002,0.001,0.02,0.0060000000000000001,0.031,0.016],"y":[0.38200000000000001,0.35699999999999998,0.38100000000000001,0.35399999999999998,0.48199999999999998,0.55100000000000005,0.44500000000000001,0.36299999999999999,0.376,0.41999999999999998,0.435,0.503,0.57299999999999995,0.46700000000000003,0.41399999999999998,0.375,0.35799999999999998,0.47499999999999998,0.54500000000000004,0.439,0.35899999999999999,0.41899999999999998,0.495,0.56399999999999995,0.45800000000000002,0.40200000000000002,0.309,0.29399999999999998,0.32400000000000001,0.438,0.67200000000000004,0.56699999999999995,0.48099999999999998,0.63600000000000001,0.55100000000000005,0.44500000000000001],"text":["div: 0.009<br />mase: 0.382<br />colour: Other<br />auto_arima_forec + ets_forec","div: 0.000<br />mase: 0.357<br />colour: Other<br />auto_arima_forec + nnetar_forec","div: 0.007<br />mase: 0.381<br />colour: Other<br />auto_arima_forec + tbats_forec","div: 0.136<br />mase: 0.354<br />colour: Other<br />auto_arima_forec + stlm_ar_forec","div: 0.008<br />mase: 0.482<br />colour: Other<br />auto_arima_forec + rw_drift_forec","div: 0.019<br />mase: 0.551<br />colour: Other<br />auto_arima_forec + thetaf_forec","div: 0.003<br />mase: 0.445<br />colour: Other<br />auto_arima_forec + naive_forec","div: 0.011<br />mase: 0.363<br />colour: Other<br />auto_arima_forec + snaive_forec","div: 0.009<br />mase: 0.376<br />colour: Other<br />ets_forec + nnetar_forec","div: 0.001<br />mase: 0.420<br />colour: Other<br />ets_forec + tbats_forec","div: 0.139<br />mase: 0.435<br />colour: Other<br />ets_forec + stlm_ar_forec","div: 0.013<br />mase: 0.503<br />colour: Other<br />ets_forec + rw_drift_forec","div: 0.021<br />mase: 0.573<br />colour: Other<br />ets_forec + thetaf_forec","div: 0.010<br />mase: 0.467<br />colour: Other<br />ets_forec + naive_forec","div: 0.008<br />mase: 0.414<br />colour: Other<br />ets_forec + snaive_forec","div: 0.007<br />mase: 0.375<br />colour: Other<br />nnetar_forec + tbats_forec","div: 0.133<br />mase: 0.358<br />colour: Other<br />nnetar_forec + stlm_ar_forec","div: 0.008<br />mase: 0.475<br />colour: Other<br />nnetar_forec + rw_drift_forec","div: 0.020<br />mase: 0.545<br />colour: Other<br />nnetar_forec + thetaf_forec","div: 0.004<br />mase: 0.439<br />colour: Other<br />nnetar_forec + naive_forec","div: 0.011<br />mase: 0.359<br />colour: Other<br />nnetar_forec + snaive_forec","div: 0.136<br />mase: 0.419<br />colour: Other<br />tbats_forec + stlm_ar_forec","div: 0.011<br />mase: 0.495<br />colour: Other<br />tbats_forec + rw_drift_forec","div: 0.020<br />mase: 0.564<br />colour: Other<br />tbats_forec + thetaf_forec","div: 0.008<br />mase: 0.458<br />colour: Other<br />tbats_forec + naive_forec","div: 0.008<br />mase: 0.402<br />colour: Other<br />tbats_forec + snaive_forec","div: 0.204<br />mase: 0.309<br />colour: Other<br />stlm_ar_forec + rw_drift_forec","div: 0.249<br />mase: 0.294<br />colour: Other<br />stlm_ar_forec + thetaf_forec","div: 0.179<br />mase: 0.324<br />colour: Other<br />stlm_ar_forec + naive_forec","div: 0.129<br />mase: 0.438<br />colour: Other<br />stlm_ar_forec + snaive_forec","div: 0.002<br />mase: 0.672<br />colour: Other<br />rw_drift_forec + thetaf_forec","div: 0.001<br />mase: 0.567<br />colour: Other<br />rw_drift_forec + naive_forec","div: 0.020<br />mase: 0.481<br />colour: Other<br />rw_drift_forec + snaive_forec","div: 0.006<br />mase: 0.636<br />colour: Other<br />thetaf_forec + naive_forec","div: 0.031<br />mase: 0.551<br />colour: Other<br />thetaf_forec + snaive_forec","div: 0.016<br />mase: 0.445<br />colour: Other<br />naive_forec + snaive_forec"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(205,133,0,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(205,133,0,1)"}},"hoveron":"points","name":"Other","legendgroup":"Other","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null}],"layout":{"margin":{"t":23.305936073059364,"r":7.3059360730593621,"b":37.260273972602747,"l":43.105022831050235},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[-0.012450000000000001,0.26145000000000002],"tickmode":"array","ticktext":["0.00","0.05","0.10","0.15","0.20","0.25"],"tickvals":[0,0.050000000000000003,0.10000000000000001,0.15000000000000002,0.20000000000000001,0.25],"categoryorder":"array","categoryarray":["0.00","0.05","0.10","0.15","0.20","0.25"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"y","title":{"text":"Diversity","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[0.27509999999999996,0.69090000000000007],"tickmode":"array","ticktext":["0.3","0.4","0.5","0.6"],"tickvals":[0.30000000000000004,0.40000000000000002,0.5,0.60000000000000009],"categoryorder":"array","categoryarray":["0.3","0.4","0.5","0.6"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"x","title":{"text":"MASE","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":true,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.8897637795275593,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.68949771689498},"title":{"text":"","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"orientation":"h","y":-0.20000000000000001,"x":0.10000000000000001},"hovermode":"closest","barmode":"relative"},"config":{"doubleClick":"reset","modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"source":"A","attrs":{"805843a8d402":{"x":{},"y":{},"colour":{},"type":"scatter"},"8058f6f202":{"x":{},"y":{},"colour":{},"text":{}}},"cur_data":"805843a8d402","visdat":{"805843a8d402":["function (y) ","x"],"8058f6f202":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ] .pull-right[ ``` #> # A tibble: 9 × 2 #> Method MASE #> <chr> <dbl> #> 1 nnetar_forec 0.351 #> 2 auto_arima_forec 0.363 #> 3 tbats_forec 0.408 #> 4 snaive_forec 0.412 #> 5 ets_forec 0.435 #> 6 naive_forec 0.53 #> 7 rw_drift_forec 0.603 #> 8 thetaf_forec 0.744 #> 9 stlm_ar_forec 0.822 ``` ] --- # Combining two methods .pull-left[ <div class="plotly html-widget html-fill-item" id="htmlwidget-271bb754353678d6a3d1" style="width:504px;height:504px;"></div> <script type="application/json" data-for="htmlwidget-271bb754353678d6a3d1">{"x":{"data":[{"x":[0.0089999999999999993,0,0.0070000000000000001,0.13600000000000001,0.0080000000000000002,0.019,0.0030000000000000001,0.010999999999999999,0.0089999999999999993,0.001,0.13900000000000001,0.012999999999999999,0.021000000000000001,0.01,0.0080000000000000002,0.0070000000000000001,0.13300000000000001,0.0080000000000000002,0.02,0.0040000000000000001,0.010999999999999999,0.13600000000000001,0.010999999999999999,0.02,0.0080000000000000002,0.0080000000000000002,0.20399999999999999,0.249,0.17899999999999999,0.129,0.002,0.001,0.02,0.0060000000000000001,0.031,0.016],"y":[0.38200000000000001,0.35699999999999998,0.38100000000000001,0.35399999999999998,0.48199999999999998,0.55100000000000005,0.44500000000000001,0.36299999999999999,0.376,0.41999999999999998,0.435,0.503,0.57299999999999995,0.46700000000000003,0.41399999999999998,0.375,0.35799999999999998,0.47499999999999998,0.54500000000000004,0.439,0.35899999999999999,0.41899999999999998,0.495,0.56399999999999995,0.45800000000000002,0.40200000000000002,0.309,0.29399999999999998,0.32400000000000001,0.438,0.67200000000000004,0.56699999999999995,0.48099999999999998,0.63600000000000001,0.55100000000000005,0.44500000000000001],"text":["div: 0.009<br />mase: 0.382<br />colour: Other","div: 0.000<br />mase: 0.357<br />colour: Other","div: 0.007<br />mase: 0.381<br />colour: Other","div: 0.136<br />mase: 0.354<br />colour: Other","div: 0.008<br />mase: 0.482<br />colour: Other","div: 0.019<br />mase: 0.551<br />colour: Other","div: 0.003<br />mase: 0.445<br />colour: Other","div: 0.011<br />mase: 0.363<br />colour: Other","div: 0.009<br />mase: 0.376<br />colour: Other","div: 0.001<br />mase: 0.420<br />colour: Other","div: 0.139<br />mase: 0.435<br />colour: Other","div: 0.013<br />mase: 0.503<br />colour: Other","div: 0.021<br />mase: 0.573<br />colour: Other","div: 0.010<br />mase: 0.467<br />colour: Other","div: 0.008<br />mase: 0.414<br />colour: Other","div: 0.007<br />mase: 0.375<br />colour: Other","div: 0.133<br />mase: 0.358<br />colour: Other","div: 0.008<br />mase: 0.475<br />colour: Other","div: 0.020<br />mase: 0.545<br />colour: Other","div: 0.004<br />mase: 0.439<br />colour: Other","div: 0.011<br />mase: 0.359<br />colour: Other","div: 0.136<br />mase: 0.419<br />colour: Other","div: 0.011<br />mase: 0.495<br />colour: Other","div: 0.020<br />mase: 0.564<br />colour: Other","div: 0.008<br />mase: 0.458<br />colour: Other","div: 0.008<br />mase: 0.402<br />colour: Other","div: 0.204<br />mase: 0.309<br />colour: Other","div: 0.249<br />mase: 0.294<br />colour: Other","div: 0.179<br />mase: 0.324<br />colour: Other","div: 0.129<br />mase: 0.438<br />colour: Other","div: 0.002<br />mase: 0.672<br />colour: Other","div: 0.001<br />mase: 0.567<br />colour: Other","div: 0.020<br />mase: 0.481<br />colour: Other","div: 0.006<br />mase: 0.636<br />colour: Other","div: 0.031<br />mase: 0.551<br />colour: Other","div: 0.016<br />mase: 0.445<br />colour: Other"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(205,133,0,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(205,133,0,1)"}},"hoveron":"points","name":"Other","legendgroup":"Other","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[0.0089999999999999993,0,0.0070000000000000001,0.13600000000000001,0.0080000000000000002,0.019,0.0030000000000000001,0.010999999999999999,0.0089999999999999993,0.001,0.13900000000000001,0.012999999999999999,0.021000000000000001,0.01,0.0080000000000000002,0.0070000000000000001,0.13300000000000001,0.0080000000000000002,0.02,0.0040000000000000001,0.010999999999999999,0.13600000000000001,0.010999999999999999,0.02,0.0080000000000000002,0.0080000000000000002,0.20399999999999999,0.249,0.17899999999999999,0.129,0.002,0.001,0.02,0.0060000000000000001,0.031,0.016],"y":[0.38200000000000001,0.35699999999999998,0.38100000000000001,0.35399999999999998,0.48199999999999998,0.55100000000000005,0.44500000000000001,0.36299999999999999,0.376,0.41999999999999998,0.435,0.503,0.57299999999999995,0.46700000000000003,0.41399999999999998,0.375,0.35799999999999998,0.47499999999999998,0.54500000000000004,0.439,0.35899999999999999,0.41899999999999998,0.495,0.56399999999999995,0.45800000000000002,0.40200000000000002,0.309,0.29399999999999998,0.32400000000000001,0.438,0.67200000000000004,0.56699999999999995,0.48099999999999998,0.63600000000000001,0.55100000000000005,0.44500000000000001],"text":["div: 0.009<br />mase: 0.382<br />colour: Other<br />auto_arima_forec + ets_forec","div: 0.000<br />mase: 0.357<br />colour: Other<br />auto_arima_forec + nnetar_forec","div: 0.007<br />mase: 0.381<br />colour: Other<br />auto_arima_forec + tbats_forec","div: 0.136<br />mase: 0.354<br />colour: Other<br />auto_arima_forec + stlm_ar_forec","div: 0.008<br />mase: 0.482<br />colour: Other<br />auto_arima_forec + rw_drift_forec","div: 0.019<br />mase: 0.551<br />colour: Other<br />auto_arima_forec + thetaf_forec","div: 0.003<br />mase: 0.445<br />colour: Other<br />auto_arima_forec + naive_forec","div: 0.011<br />mase: 0.363<br />colour: Other<br />auto_arima_forec + snaive_forec","div: 0.009<br />mase: 0.376<br />colour: Other<br />ets_forec + nnetar_forec","div: 0.001<br />mase: 0.420<br />colour: Other<br />ets_forec + tbats_forec","div: 0.139<br />mase: 0.435<br />colour: Other<br />ets_forec + stlm_ar_forec","div: 0.013<br />mase: 0.503<br />colour: Other<br />ets_forec + rw_drift_forec","div: 0.021<br />mase: 0.573<br />colour: Other<br />ets_forec + thetaf_forec","div: 0.010<br />mase: 0.467<br />colour: Other<br />ets_forec + naive_forec","div: 0.008<br />mase: 0.414<br />colour: Other<br />ets_forec + snaive_forec","div: 0.007<br />mase: 0.375<br />colour: Other<br />nnetar_forec + tbats_forec","div: 0.133<br />mase: 0.358<br />colour: Other<br />nnetar_forec + stlm_ar_forec","div: 0.008<br />mase: 0.475<br />colour: Other<br />nnetar_forec + rw_drift_forec","div: 0.020<br />mase: 0.545<br />colour: Other<br />nnetar_forec + thetaf_forec","div: 0.004<br />mase: 0.439<br />colour: Other<br />nnetar_forec + naive_forec","div: 0.011<br />mase: 0.359<br />colour: Other<br />nnetar_forec + snaive_forec","div: 0.136<br />mase: 0.419<br />colour: Other<br />tbats_forec + stlm_ar_forec","div: 0.011<br />mase: 0.495<br />colour: Other<br />tbats_forec + rw_drift_forec","div: 0.020<br />mase: 0.564<br />colour: Other<br />tbats_forec + thetaf_forec","div: 0.008<br />mase: 0.458<br />colour: Other<br />tbats_forec + naive_forec","div: 0.008<br />mase: 0.402<br />colour: Other<br />tbats_forec + snaive_forec","div: 0.204<br />mase: 0.309<br />colour: Other<br />stlm_ar_forec + rw_drift_forec","div: 0.249<br />mase: 0.294<br />colour: Other<br />stlm_ar_forec + thetaf_forec","div: 0.179<br />mase: 0.324<br />colour: Other<br />stlm_ar_forec + naive_forec","div: 0.129<br />mase: 0.438<br />colour: Other<br />stlm_ar_forec + snaive_forec","div: 0.002<br />mase: 0.672<br />colour: Other<br />rw_drift_forec + thetaf_forec","div: 0.001<br />mase: 0.567<br />colour: Other<br />rw_drift_forec + naive_forec","div: 0.020<br />mase: 0.481<br />colour: Other<br />rw_drift_forec + snaive_forec","div: 0.006<br />mase: 0.636<br />colour: Other<br />thetaf_forec + naive_forec","div: 0.031<br />mase: 0.551<br />colour: Other<br />thetaf_forec + snaive_forec","div: 0.016<br />mase: 0.445<br />colour: Other<br />naive_forec + snaive_forec"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(205,133,0,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(205,133,0,1)"}},"hoveron":"points","name":"Other","legendgroup":"Other","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249],"y":[0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998],"text":"div[min_index]: 0.249<br />mase[min_index]: 0.294<br />colour: theta + stlm_ar","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(255,0,0,1)","opacity":1,"size":15.118110236220474,"symbol":"circle-open","line":{"width":1.8897637795275593,"color":"rgba(255,0,0,1)"}},"hoveron":"points","name":"theta + stlm_ar","legendgroup":"theta + stlm_ar","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null}],"layout":{"margin":{"t":23.305936073059364,"r":7.3059360730593621,"b":37.260273972602747,"l":43.105022831050235},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[-0.012450000000000001,0.26145000000000002],"tickmode":"array","ticktext":["0.00","0.05","0.10","0.15","0.20","0.25"],"tickvals":[0,0.050000000000000003,0.10000000000000001,0.15000000000000002,0.20000000000000001,0.25],"categoryorder":"array","categoryarray":["0.00","0.05","0.10","0.15","0.20","0.25"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"y","title":{"text":"Diversity","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[0.27509999999999996,0.69090000000000007],"tickmode":"array","ticktext":["0.3","0.4","0.5","0.6"],"tickvals":[0.30000000000000004,0.40000000000000002,0.5,0.60000000000000009],"categoryorder":"array","categoryarray":["0.3","0.4","0.5","0.6"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"x","title":{"text":"MASE","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":true,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.8897637795275593,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.68949771689498},"title":{"text":"","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"orientation":"h","y":-0.20000000000000001,"x":0.10000000000000001},"hovermode":"closest","barmode":"relative"},"config":{"doubleClick":"reset","modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"source":"A","attrs":{"80587b9d7449":{"x":{},"y":{},"colour":{},"type":"scatter"},"80581e3da406":{"x":{},"y":{},"colour":{},"text":{}},"805860db956c":{"x":{},"y":{},"colour":{}}},"cur_data":"80587b9d7449","visdat":{"80587b9d7449":["function (y) ","x"],"80581e3da406":["function (y) ","x"],"805860db956c":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ] .pull-right[ ``` #> # A tibble: 9 × 2 #> Method MASE #> <chr> <dbl> #> 1 nnetar_forec 0.351 #> 2 auto_arima_forec 0.363 #> 3 tbats_forec 0.408 #> 4 snaive_forec 0.412 #> 5 ets_forec 0.435 #> 6 naive_forec 0.53 #> 7 rw_drift_forec 0.603 #> 8 thetaf_forec 0.744 #> 9 stlm_ar_forec 0.822 ``` ] --- # Combining two methods .pull-left[ <div class="plotly html-widget html-fill-item" id="htmlwidget-2d9b5e0b0ad71d8afdd7" style="width:504px;height:504px;"></div> <script type="application/json" data-for="htmlwidget-2d9b5e0b0ad71d8afdd7">{"x":{"data":[{"x":[0.0089999999999999993,0,0.0070000000000000001,0.13600000000000001,0.0080000000000000002,0.019,0.0030000000000000001,0.010999999999999999,0.0089999999999999993,0.001,0.13900000000000001,0.012999999999999999,0.021000000000000001,0.01,0.0080000000000000002,0.0070000000000000001,0.13300000000000001,0.0080000000000000002,0.02,0.0040000000000000001,0.010999999999999999,0.13600000000000001,0.010999999999999999,0.02,0.0080000000000000002,0.0080000000000000002,0.20399999999999999,0.249,0.17899999999999999,0.129,0.002,0.001,0.02,0.0060000000000000001,0.031,0.016],"y":[0.38200000000000001,0.35699999999999998,0.38100000000000001,0.35399999999999998,0.48199999999999998,0.55100000000000005,0.44500000000000001,0.36299999999999999,0.376,0.41999999999999998,0.435,0.503,0.57299999999999995,0.46700000000000003,0.41399999999999998,0.375,0.35799999999999998,0.47499999999999998,0.54500000000000004,0.439,0.35899999999999999,0.41899999999999998,0.495,0.56399999999999995,0.45800000000000002,0.40200000000000002,0.309,0.29399999999999998,0.32400000000000001,0.438,0.67200000000000004,0.56699999999999995,0.48099999999999998,0.63600000000000001,0.55100000000000005,0.44500000000000001],"text":["div: 0.009<br />mase: 0.382<br />colour: Other","div: 0.000<br />mase: 0.357<br />colour: Other","div: 0.007<br />mase: 0.381<br />colour: Other","div: 0.136<br />mase: 0.354<br />colour: Other","div: 0.008<br />mase: 0.482<br />colour: Other","div: 0.019<br />mase: 0.551<br />colour: Other","div: 0.003<br />mase: 0.445<br />colour: Other","div: 0.011<br />mase: 0.363<br />colour: Other","div: 0.009<br />mase: 0.376<br />colour: Other","div: 0.001<br />mase: 0.420<br />colour: Other","div: 0.139<br />mase: 0.435<br />colour: Other","div: 0.013<br />mase: 0.503<br />colour: Other","div: 0.021<br />mase: 0.573<br />colour: Other","div: 0.010<br />mase: 0.467<br />colour: Other","div: 0.008<br />mase: 0.414<br />colour: Other","div: 0.007<br />mase: 0.375<br />colour: Other","div: 0.133<br />mase: 0.358<br />colour: Other","div: 0.008<br />mase: 0.475<br />colour: Other","div: 0.020<br />mase: 0.545<br />colour: Other","div: 0.004<br />mase: 0.439<br />colour: Other","div: 0.011<br />mase: 0.359<br />colour: Other","div: 0.136<br />mase: 0.419<br />colour: Other","div: 0.011<br />mase: 0.495<br />colour: Other","div: 0.020<br />mase: 0.564<br />colour: Other","div: 0.008<br />mase: 0.458<br />colour: Other","div: 0.008<br />mase: 0.402<br />colour: Other","div: 0.204<br />mase: 0.309<br />colour: Other","div: 0.249<br />mase: 0.294<br />colour: Other","div: 0.179<br />mase: 0.324<br />colour: Other","div: 0.129<br />mase: 0.438<br />colour: Other","div: 0.002<br />mase: 0.672<br />colour: Other","div: 0.001<br />mase: 0.567<br />colour: Other","div: 0.020<br />mase: 0.481<br />colour: Other","div: 0.006<br />mase: 0.636<br />colour: Other","div: 0.031<br />mase: 0.551<br />colour: Other","div: 0.016<br />mase: 0.445<br />colour: Other"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(205,133,0,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(205,133,0,1)"}},"hoveron":"points","name":"Other","legendgroup":"Other","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[0.0089999999999999993,0,0.0070000000000000001,0.13600000000000001,0.0080000000000000002,0.019,0.0030000000000000001,0.010999999999999999,0.0089999999999999993,0.001,0.13900000000000001,0.012999999999999999,0.021000000000000001,0.01,0.0080000000000000002,0.0070000000000000001,0.13300000000000001,0.0080000000000000002,0.02,0.0040000000000000001,0.010999999999999999,0.13600000000000001,0.010999999999999999,0.02,0.0080000000000000002,0.0080000000000000002,0.20399999999999999,0.249,0.17899999999999999,0.129,0.002,0.001,0.02,0.0060000000000000001,0.031,0.016],"y":[0.38200000000000001,0.35699999999999998,0.38100000000000001,0.35399999999999998,0.48199999999999998,0.55100000000000005,0.44500000000000001,0.36299999999999999,0.376,0.41999999999999998,0.435,0.503,0.57299999999999995,0.46700000000000003,0.41399999999999998,0.375,0.35799999999999998,0.47499999999999998,0.54500000000000004,0.439,0.35899999999999999,0.41899999999999998,0.495,0.56399999999999995,0.45800000000000002,0.40200000000000002,0.309,0.29399999999999998,0.32400000000000001,0.438,0.67200000000000004,0.56699999999999995,0.48099999999999998,0.63600000000000001,0.55100000000000005,0.44500000000000001],"text":["div: 0.009<br />mase: 0.382<br />colour: Other<br />auto_arima_forec + ets_forec","div: 0.000<br />mase: 0.357<br />colour: Other<br />auto_arima_forec + nnetar_forec","div: 0.007<br />mase: 0.381<br />colour: Other<br />auto_arima_forec + tbats_forec","div: 0.136<br />mase: 0.354<br />colour: Other<br />auto_arima_forec + stlm_ar_forec","div: 0.008<br />mase: 0.482<br />colour: Other<br />auto_arima_forec + rw_drift_forec","div: 0.019<br />mase: 0.551<br />colour: Other<br />auto_arima_forec + thetaf_forec","div: 0.003<br />mase: 0.445<br />colour: Other<br />auto_arima_forec + naive_forec","div: 0.011<br />mase: 0.363<br />colour: Other<br />auto_arima_forec + snaive_forec","div: 0.009<br />mase: 0.376<br />colour: Other<br />ets_forec + nnetar_forec","div: 0.001<br />mase: 0.420<br />colour: Other<br />ets_forec + tbats_forec","div: 0.139<br />mase: 0.435<br />colour: Other<br />ets_forec + stlm_ar_forec","div: 0.013<br />mase: 0.503<br />colour: Other<br />ets_forec + rw_drift_forec","div: 0.021<br />mase: 0.573<br />colour: Other<br />ets_forec + thetaf_forec","div: 0.010<br />mase: 0.467<br />colour: Other<br />ets_forec + naive_forec","div: 0.008<br />mase: 0.414<br />colour: Other<br />ets_forec + snaive_forec","div: 0.007<br />mase: 0.375<br />colour: Other<br />nnetar_forec + tbats_forec","div: 0.133<br />mase: 0.358<br />colour: Other<br />nnetar_forec + stlm_ar_forec","div: 0.008<br />mase: 0.475<br />colour: Other<br />nnetar_forec + rw_drift_forec","div: 0.020<br />mase: 0.545<br />colour: Other<br />nnetar_forec + thetaf_forec","div: 0.004<br />mase: 0.439<br />colour: Other<br />nnetar_forec + naive_forec","div: 0.011<br />mase: 0.359<br />colour: Other<br />nnetar_forec + snaive_forec","div: 0.136<br />mase: 0.419<br />colour: Other<br />tbats_forec + stlm_ar_forec","div: 0.011<br />mase: 0.495<br />colour: Other<br />tbats_forec + rw_drift_forec","div: 0.020<br />mase: 0.564<br />colour: Other<br />tbats_forec + thetaf_forec","div: 0.008<br />mase: 0.458<br />colour: Other<br />tbats_forec + naive_forec","div: 0.008<br />mase: 0.402<br />colour: Other<br />tbats_forec + snaive_forec","div: 0.204<br />mase: 0.309<br />colour: Other<br />stlm_ar_forec + rw_drift_forec","div: 0.249<br />mase: 0.294<br />colour: Other<br />stlm_ar_forec + thetaf_forec","div: 0.179<br />mase: 0.324<br />colour: Other<br />stlm_ar_forec + naive_forec","div: 0.129<br />mase: 0.438<br />colour: Other<br />stlm_ar_forec + snaive_forec","div: 0.002<br />mase: 0.672<br />colour: Other<br />rw_drift_forec + thetaf_forec","div: 0.001<br />mase: 0.567<br />colour: Other<br />rw_drift_forec + naive_forec","div: 0.020<br />mase: 0.481<br />colour: Other<br />rw_drift_forec + snaive_forec","div: 0.006<br />mase: 0.636<br />colour: Other<br />thetaf_forec + naive_forec","div: 0.031<br />mase: 0.551<br />colour: Other<br />thetaf_forec + snaive_forec","div: 0.016<br />mase: 0.445<br />colour: Other<br />naive_forec + snaive_forec"],"type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(205,133,0,1)","opacity":1,"size":5.6692913385826778,"symbol":"circle","line":{"width":1.8897637795275593,"color":"rgba(205,133,0,1)"}},"hoveron":"points","name":"Other","legendgroup":"Other","showlegend":false,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249,0.249],"y":[0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998,0.29399999999999998],"text":"div[min_index]: 0.249<br />mase[min_index]: 0.294<br />colour: theta + stlm_ar","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(255,0,0,1)","opacity":1,"size":15.118110236220474,"symbol":"circle-open","line":{"width":1.8897637795275593,"color":"rgba(255,0,0,1)"}},"hoveron":"points","name":"theta + stlm_ar","legendgroup":"theta + stlm_ar","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null},{"x":[0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0],"y":[0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998,0.35699999999999998],"text":"div[which(combn_names == twobest)]: 0<br />mase[which(combn_names == twobest)]: 0.357<br />colour: arima + nnetar","type":"scatter","mode":"markers","marker":{"autocolorscale":false,"color":"rgba(0,205,0,1)","opacity":1,"size":15.118110236220474,"symbol":"circle-open","line":{"width":1.8897637795275593,"color":"rgba(0,205,0,1)"}},"hoveron":"points","name":"arima + nnetar","legendgroup":"arima + nnetar","showlegend":true,"xaxis":"x","yaxis":"y","hoverinfo":"text","frame":null}],"layout":{"margin":{"t":23.305936073059364,"r":7.3059360730593621,"b":37.260273972602747,"l":43.105022831050235},"plot_bgcolor":"rgba(235,235,235,1)","paper_bgcolor":"rgba(255,255,255,1)","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724},"xaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[-0.012450000000000001,0.26145000000000002],"tickmode":"array","ticktext":["0.00","0.05","0.10","0.15","0.20","0.25"],"tickvals":[0,0.050000000000000003,0.10000000000000001,0.15000000000000002,0.20000000000000001,0.25],"categoryorder":"array","categoryarray":["0.00","0.05","0.10","0.15","0.20","0.25"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"y","title":{"text":"Diversity","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"yaxis":{"domain":[0,1],"automargin":true,"type":"linear","autorange":false,"range":[0.27509999999999996,0.69090000000000007],"tickmode":"array","ticktext":["0.3","0.4","0.5","0.6"],"tickvals":[0.30000000000000004,0.40000000000000002,0.5,0.60000000000000009],"categoryorder":"array","categoryarray":["0.3","0.4","0.5","0.6"],"nticks":null,"ticks":"outside","tickcolor":"rgba(51,51,51,1)","ticklen":3.6529680365296811,"tickwidth":0.66417600664176002,"showticklabels":true,"tickfont":{"color":"rgba(77,77,77,1)","family":"","size":11.68949771689498},"tickangle":-0,"showline":false,"linecolor":null,"linewidth":0,"showgrid":true,"gridcolor":"rgba(255,255,255,1)","gridwidth":0.66417600664176002,"zeroline":false,"anchor":"x","title":{"text":"MASE","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"hoverformat":".2f"},"shapes":[{"type":"rect","fillcolor":null,"line":{"color":null,"width":0,"linetype":[]},"yref":"paper","xref":"paper","x0":0,"x1":1,"y0":0,"y1":1}],"showlegend":true,"legend":{"bgcolor":"rgba(255,255,255,1)","bordercolor":"transparent","borderwidth":1.8897637795275593,"font":{"color":"rgba(0,0,0,1)","family":"","size":11.68949771689498},"title":{"text":"","font":{"color":"rgba(0,0,0,1)","family":"","size":14.611872146118724}},"orientation":"h","y":-0.20000000000000001,"x":0.10000000000000001},"hovermode":"closest","barmode":"relative"},"config":{"doubleClick":"reset","modeBarButtonsToAdd":["hoverclosest","hovercompare"],"showSendToCloud":false},"source":"A","attrs":{"8058b860089":{"x":{},"y":{},"colour":{},"type":"scatter"},"8058a8d2848":{"x":{},"y":{},"colour":{},"text":{}},"8058394f9461":{"x":{},"y":{},"colour":{}},"8058179689ac":{"x":{},"y":{},"colour":{}},"80581c28874d":{"x":{},"y":{},"colour":{}}},"cur_data":"8058b860089","visdat":{"8058b860089":["function (y) ","x"],"8058a8d2848":["function (y) ","x"],"8058394f9461":["function (y) ","x"],"8058179689ac":["function (y) ","x"],"80581c28874d":["function (y) ","x"]},"highlight":{"on":"plotly_click","persistent":false,"dynamic":false,"selectize":false,"opacityDim":0.20000000000000001,"selected":{"opacity":1},"debounce":0},"shinyEvents":["plotly_hover","plotly_click","plotly_selected","plotly_relayout","plotly_brushed","plotly_brushing","plotly_clickannotation","plotly_doubleclick","plotly_deselect","plotly_afterplot","plotly_sunburstclick"],"base_url":"https://plot.ly"},"evals":[],"jsHooks":[]}</script> ] .pull-right[ ``` #> # A tibble: 9 × 2 #> Method MASE #> <chr> <dbl> #> 1 nnetar_forec 0.351 #> 2 auto_arima_forec 0.363 #> 3 tbats_forec 0.408 #> 4 snaive_forec 0.412 #> 5 ets_forec 0.435 #> 6 naive_forec 0.53 #> 7 rw_drift_forec 0.603 #> 8 thetaf_forec 0.744 #> 9 stlm_ar_forec 0.822 ``` ] --- # Yes, diversity matters! <img src="figure/unnamed-chunk-28-1.svg" width="864" style="display: block; margin: auto;" /> --- # Yes, diversity matters! <img src="figure/unnamed-chunk-29-1.svg" width="864" style="display: block; margin: auto;" /> --- # Measuring diversity .small[ $$ `\begin{aligned} MSE_{comb} & = \frac{1}{H} \sum_{i=1}^{H}\left( \sum_{i=1}^{M}w_if_{ih} - y_{T+h}\right)^2 \\ & = \frac{1}{H}\sum_{i=1}^{H}\left[ \sum_{i=1}^{M}w_i(f_{ih} - y_{T+h})^2 - \sum_{i=1}^{M}w_i(f_{ih} - f_{ch})^2\right] \\ & = \frac{1}{H}\sum_{i=1}^{H}\left[\sum_{i=1}^{M}w_i(f_{ih} - y_{T+h})^2 - \sum_{i=1}^{M-1} \sum_{j=1,j>i}^{M}w_iw_j(f_{ih}-f_{jh})^2\right] \\ & = \sum_{i=1}^{M}w_i MSE_i - \sum_{i=1}^{M-1} \sum_{j=1,j>i}^{M}w_iw_jDiv_{i,j}, \end{aligned}` $$ `\(H\)` is the forecasting horizon, `\(M\)` is the number of forecasting methods, and `\(T\)` is the historical length. ] --- # Measuring diversity $$ `\begin{aligned} Div_{i,j}& = \frac{1}{H} \sum_{i=1}^{H} (f_{ih}-f_{jh})^2, \\ sDiv_{i,j} &= \frac{\sum\limits_{h=1}^H(f_{ih}-f_{jh})^2}{\sum\limits_{i=1}^{M-1}\sum\limits_{j=i+1}^M\left[\sum\limits_{h=1}^H(f_{ih}-f_{jh})^2\right].} \end{aligned}` $$ --- # Diversity for forecast combination <img src="figs/diversity-extraction.jpg"/> --- # Method pool <center> <img src="figs/methods.png" height="500px"/> </center> --- # Meta-learner - XGBoost algorithm. - The following optimization problem is solved to obtain the combination weights `$$\text{argmin}_{w} \sum\limits_{n = 1}^N\sum_{i=1}^{M} w({Div_n})_i \times \text{Err}_{ni},$$` where `\(Div_n\)` indicates the forecast diversity of the `\(n\)`-th time series, `\(w({Div_n})_i\)` is the combination weight assigned to method `\(i\)` for the `\(n\)`-th time series based on the diversity, and `\(\text{Err}_{ni}\)` is the error produced by method `\(i\)` for the `\(n\)`-th time series. --- # Forecasting M4 100,000 series with various frequencies. <center> <img src="figs/XGBoost.png" width="800px"/> </center> --- # Forecasting FMCG Sales of fast moving consumer goods (FMCG) from a major North American food manufacturer - Monthly data. - April 2013 to June 2017. <center> <img src="figs/FMCG.jpg" width="800px"/> </center> --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Feature-based forecasting] .white.font110[*Intermittent demand*] ]] --- # Intermittent demand <img src="figure/unnamed-chunk-30-1.svg" width="864" style="display: block; margin: auto;" /> - Several periods of zero demand. - Ubiquitous in practice - retailing, aerospace, etc. - Two sources of uncertainty. - Sporadic demand occurrence. - Demand arrival timing. --- # Intermittent demand: literature - Parametric methods: Croston, SBA, TSB, etc. - Non-parametric methods: bootstrapping methods, overlapping and non-overlapping aggregation methods, etc. - Temporal aggregation: ADIDA, IMAPA, etc. - Machine learning methods. A more detailed review can be found in Petropoulos, Apiletti, Assimakopoulos, Babai, Barrow, Taieb, Bergmeir, Bessa, Bijak, Boylan, and others (2022). --- # Forecast combination for intermittent demand 1. FIDE: Feature-based Intermittent DEmand forecasting 2. DIVIDE: DIVersity-based Intermittent DEmand forecasting --- # Intermittent demand features <center> <img src="figs/features.png" width="900px"/> </center> --- class: split-50 # Forecasting method pool .column[.content.vmiddle[ - Naive - Seasonal Naive (sNaive) - Simple Exponential Smoothing (SES) - Moving Averages (MA) - AutoRegressive Integrated Moving Average (ARIMA) - ExponenTial Smoothing (ETS) ]] .column[.content.vmiddle[ - Crostons method (CRO) - Optimized Crostons method (optCro) - SBA - TSB - ADIDA - IMAPA ] ] --- # FIDE and DIVIDE framework <center> <img src="figs/framework.jpg" width="750px"/> </center> --- # Application to Royal Air Force (RAF) data. 5000 monthly time series with high intermittence. <center> <img src="figs/RAFresults.png" width="600px"/> </center> --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Hierarchical forecasting] ]] --- # Hierarchical Time Series .pull-left[  ] .pull-right[ - Have base forecasts at all nodes. How can we make these coherent? - Bottom up ignores top nodes. - Top Down ignores bottom nodes. ] --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Wisdom of the data] .white.font110[*Subsampling seasonal time series*] ]] --- # Framework .pull-left[ <center> <img src="figs/foss.png" height="500px"/> </center> ] .pull-right[ .content-box-gray.tiny[ - Xixi Li, Fotios Petropoulos, Yanfei Kang (2022). Improving forecasting by subsampling seasonal time series. *International Journal of Production Research* 61(3): 976-992. - Yanfei Kang, Evangelos Spiliotis, Fotios Petropoulos, Nikolaos Athiniotis, Feng Li, Vassilios Assimakopoulo (2021). Déjà vu: A data-centric forecasting approach through time series cross-similarity, *Journal of Business Research* 132: 719-731. ] ] --- # Conclusions - Different perspectives of combining forecasts. - Combining forecasts from multiple models. - Forecast reconciliation. - Wisdom of the data. - Future directions. - Selecting forecasts to be combined. - Advancing the theory of nonlinear combinations. - Focusing more on probabilistic forecast combinations. --- class: center background-image: url(figs/review.jpg) background-size: cover --- class: center background-image: url(figs/ftp.jpg) background-size: cover --- class: center background-image: url(figs/ftp2.png) background-size: cover --- class: center background-image: url(figs/fppcn.png) background-size: cover --- background-image: url("assets/titlepage_buaa.png") background-position: 100% 50% background-size: 100% 100% .font300.bold[ **Thanks!** ] <br> <br> <br> .content-box-gray.font160[ <svg aria-hidden="true" role="img" viewBox="0 0 496 512" style="height:1em;width:0.97em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:#1da1f2;overflow:visible;position:relative;"><path d="M165.9 397.4c0 2-2.3 3.6-5.2 3.6-3.3.3-5.6-1.3-5.6-3.6 0-2 2.3-3.6 5.2-3.6 3-.3 5.6 1.3 5.6 3.6zm-31.1-4.5c-.7 2 1.3 4.3 4.3 4.9 2.6 1 5.6 0 6.2-2s-1.3-4.3-4.3-5.2c-2.6-.7-5.5.3-6.2 2.3zm44.2-1.7c-2.9.7-4.9 2.6-4.6 4.9.3 2 2.9 3.3 5.9 2.6 2.9-.7 4.9-2.6 4.6-4.6-.3-1.9-3-3.2-5.9-2.9zM244.8 8C106.1 8 0 113.3 0 252c0 110.9 69.8 205.8 169.5 239.2 12.8 2.3 17.3-5.6 17.3-12.1 0-6.2-.3-40.4-.3-61.4 0 0-70 15-84.7-29.8 0 0-11.4-29.1-27.8-36.6 0 0-22.9-15.7 1.6-15.4 0 0 24.9 2 38.6 25.8 21.9 38.6 58.6 27.5 72.9 20.9 2.3-16 8.8-27.1 16-33.7-55.9-6.2-112.3-14.3-112.3-110.5 0-27.5 7.6-41.3 23.6-58.9-2.6-6.5-11.1-33.3 2.6-67.9 20.9-6.5 69 27 69 27 20-5.6 41.5-8.5 62.8-8.5s42.8 2.9 62.8 8.5c0 0 48.1-33.6 69-27 13.7 34.7 5.2 61.4 2.6 67.9 16 17.7 25.8 31.5 25.8 58.9 0 96.5-58.9 104.2-114.8 110.5 9.2 7.9 17 22.9 17 46.4 0 33.7-.3 75.4-.3 83.6 0 6.5 4.6 14.4 17.3 12.1C428.2 457.8 496 362.9 496 252 496 113.3 383.5 8 244.8 8zM97.2 352.9c-1.3 1-1 3.3.7 5.2 1.6 1.6 3.9 2.3 5.2 1 1.3-1 1-3.3-.7-5.2-1.6-1.6-3.9-2.3-5.2-1zm-10.8-8.1c-.7 1.3.3 2.9 2.3 3.9 1.6 1 3.6.7 4.3-.7.7-1.3-.3-2.9-2.3-3.9-2-.6-3.6-.3-4.3.7zm32.4 35.6c-1.6 1.3-1 4.3 1.3 6.2 2.3 2.3 5.2 2.6 6.5 1 1.3-1.3.7-4.3-1.3-6.2-2.2-2.3-5.2-2.6-6.5-1zm-11.4-14.7c-1.6 1-1.6 3.6 0 5.9 1.6 2.3 4.3 3.3 5.6 2.3 1.6-1.3 1.6-3.9 0-6.2-1.4-2.3-4-3.3-5.6-2z"/></svg> [github.com/kl-lab](https://github.com/kl-lab) <svg aria-hidden="true" role="img" viewBox="0 0 512 512" style="height:1em;width:1em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:#1da1f2;overflow:visible;position:relative;"><path d="M352 256c0 22.2-1.2 43.6-3.3 64H163.3c-2.2-20.4-3.3-41.8-3.3-64s1.2-43.6 3.3-64H348.7c2.2 20.4 3.3 41.8 3.3 64zm28.8-64H503.9c5.3 20.5 8.1 41.9 8.1 64s-2.8 43.5-8.1 64H380.8c2.1-20.6 3.2-42 3.2-64s-1.1-43.4-3.2-64zm112.6-32H376.7c-10-63.9-29.8-117.4-55.3-151.6c78.3 20.7 142 77.5 171.9 151.6zm-149.1 0H167.7c6.1-36.4 15.5-68.6 27-94.7c10.5-23.6 22.2-40.7 33.5-51.5C239.4 3.2 248.7 0 256 0s16.6 3.2 27.8 13.8c11.3 10.8 23 27.9 33.5 51.5c11.6 26 20.9 58.2 27 94.7zm-209 0H18.6C48.6 85.9 112.2 29.1 190.6 8.4C165.1 42.6 145.3 96.1 135.3 160zM8.1 192H131.2c-2.1 20.6-3.2 42-3.2 64s1.1 43.4 3.2 64H8.1C2.8 299.5 0 278.1 0 256s2.8-43.5 8.1-64zM194.7 446.6c-11.6-26-20.9-58.2-27-94.6H344.3c-6.1 36.4-15.5 68.6-27 94.6c-10.5 23.6-22.2 40.7-33.5 51.5C272.6 508.8 263.3 512 256 512s-16.6-3.2-27.8-13.8c-11.3-10.8-23-27.9-33.5-51.5zM135.3 352c10 63.9 29.8 117.4 55.3 151.6C112.2 482.9 48.6 426.1 18.6 352H135.3zm358.1 0c-30 74.1-93.6 130.9-171.9 151.6c25.5-34.2 45.2-87.7 55.3-151.6H493.4z"/></svg> [yanfei.site](https://yanfei.site) <svg aria-hidden="true" role="img" viewBox="0 0 640 512" style="height:1em;width:1.25em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:#1da1f2;overflow:visible;position:relative;"><path d="M72 88a56 56 0 1 1 112 0A56 56 0 1 1 72 88zM64 245.7C54 256.9 48 271.8 48 288s6 31.1 16 42.3V245.7zm144.4-49.3C178.7 222.7 160 261.2 160 304c0 34.3 12 65.8 32 90.5V416c0 17.7-14.3 32-32 32H96c-17.7 0-32-14.3-32-32V389.2C26.2 371.2 0 332.7 0 288c0-61.9 50.1-112 112-112h32c24 0 46.2 7.5 64.4 20.3zM448 416V394.5c20-24.7 32-56.2 32-90.5c0-42.8-18.7-81.3-48.4-107.7C449.8 183.5 472 176 496 176h32c61.9 0 112 50.1 112 112c0 44.7-26.2 83.2-64 101.2V416c0 17.7-14.3 32-32 32H480c-17.7 0-32-14.3-32-32zm8-328a56 56 0 1 1 112 0A56 56 0 1 1 456 88zM576 245.7v84.7c10-11.3 16-26.1 16-42.3s-6-31.1-16-42.3zM320 32a64 64 0 1 1 0 128 64 64 0 1 1 0-128zM240 304c0 16.2 6 31 16 42.3V261.7c-10 11.3-16 26.1-16 42.3zm144-42.3v84.7c10-11.3 16-26.1 16-42.3s-6-31.1-16-42.3zM448 304c0 44.7-26.2 83.2-64 101.2V448c0 17.7-14.3 32-32 32H288c-17.7 0-32-14.3-32-32V405.2c-37.8-18-64-56.5-64-101.2c0-61.9 50.1-112 112-112h32c61.9 0 112 50.1 112 112z"/></svg> [kllab.org](https://kllab.org) ]