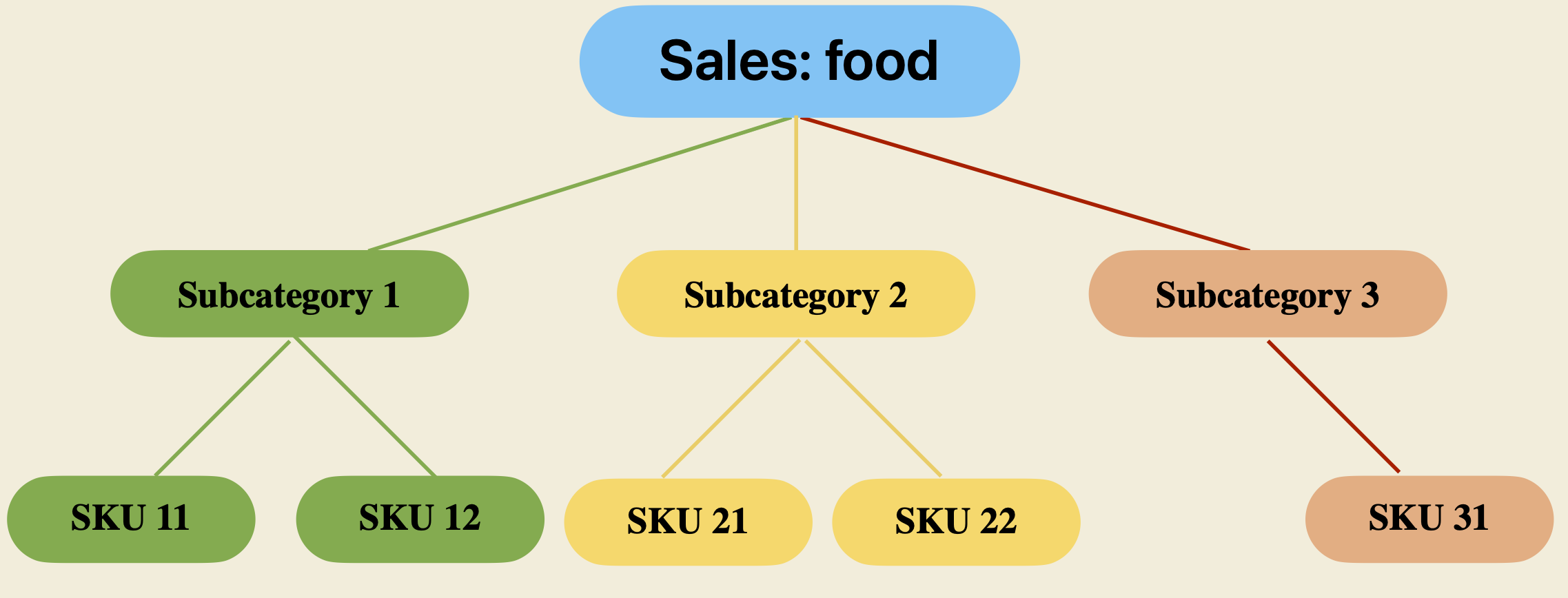

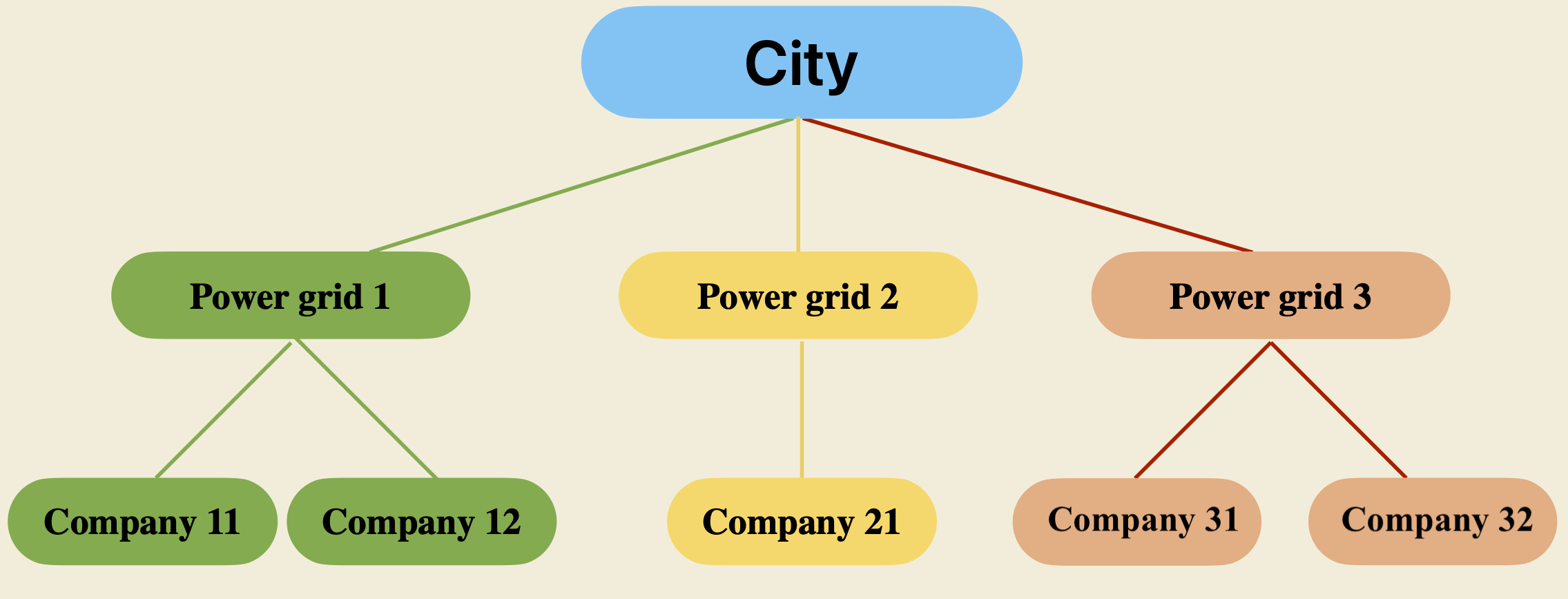

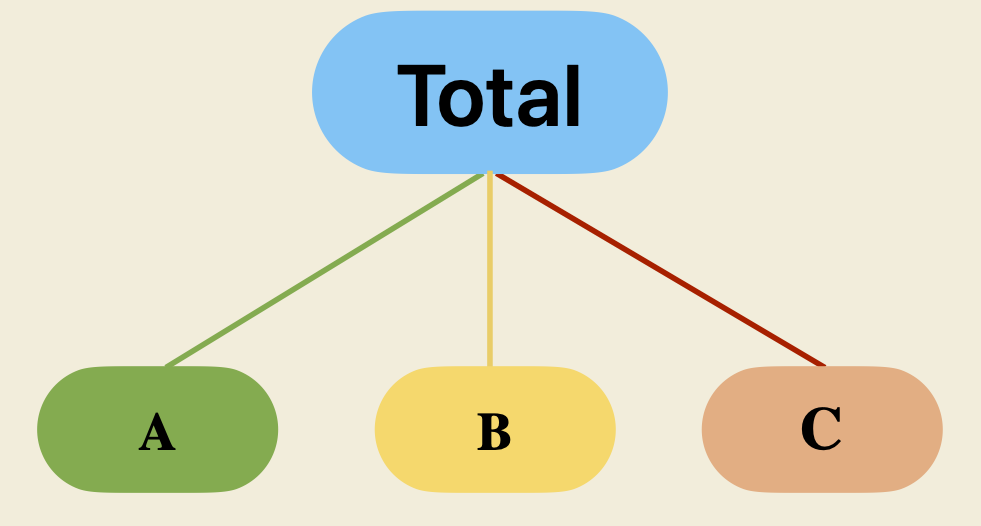

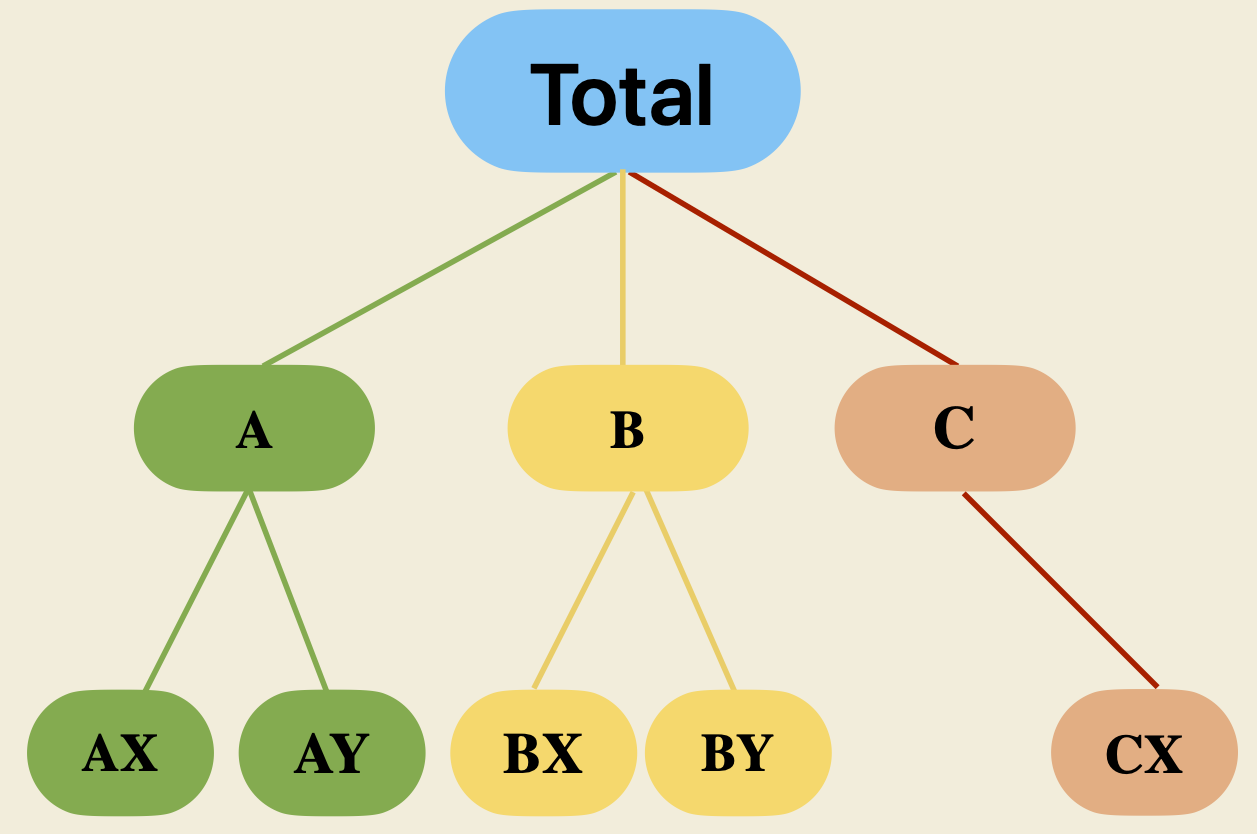

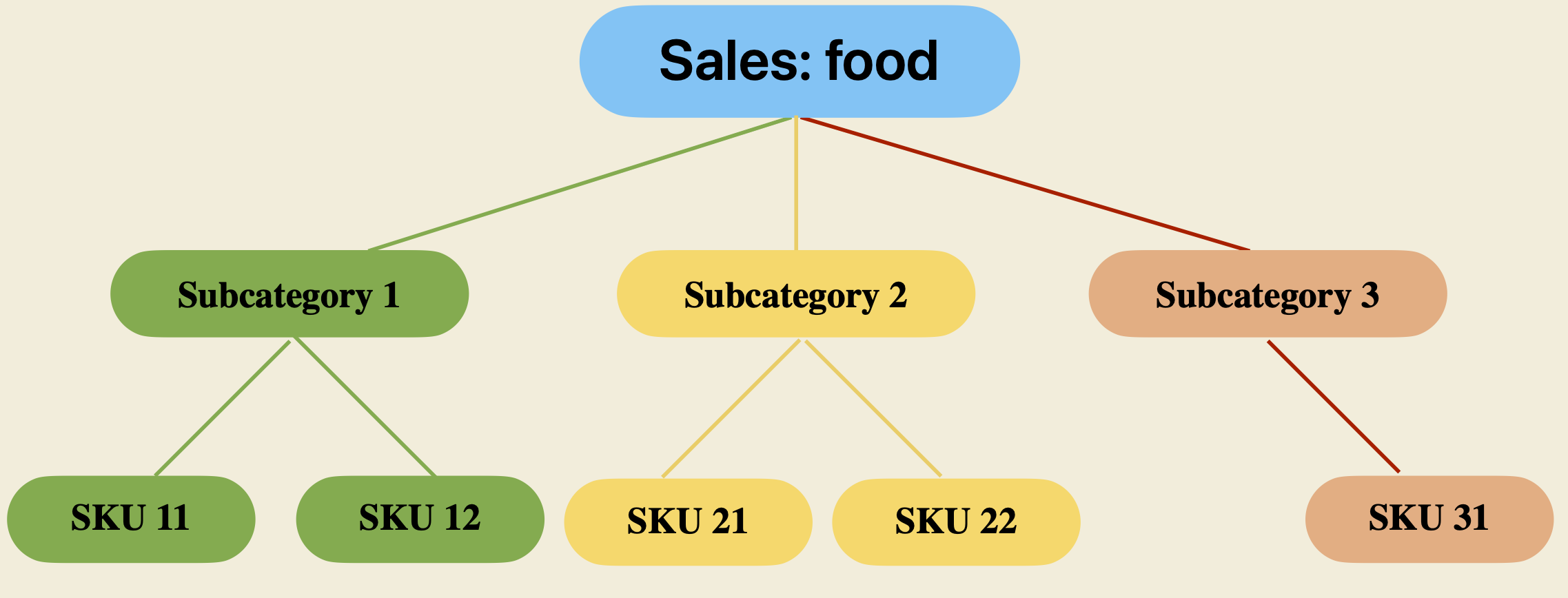

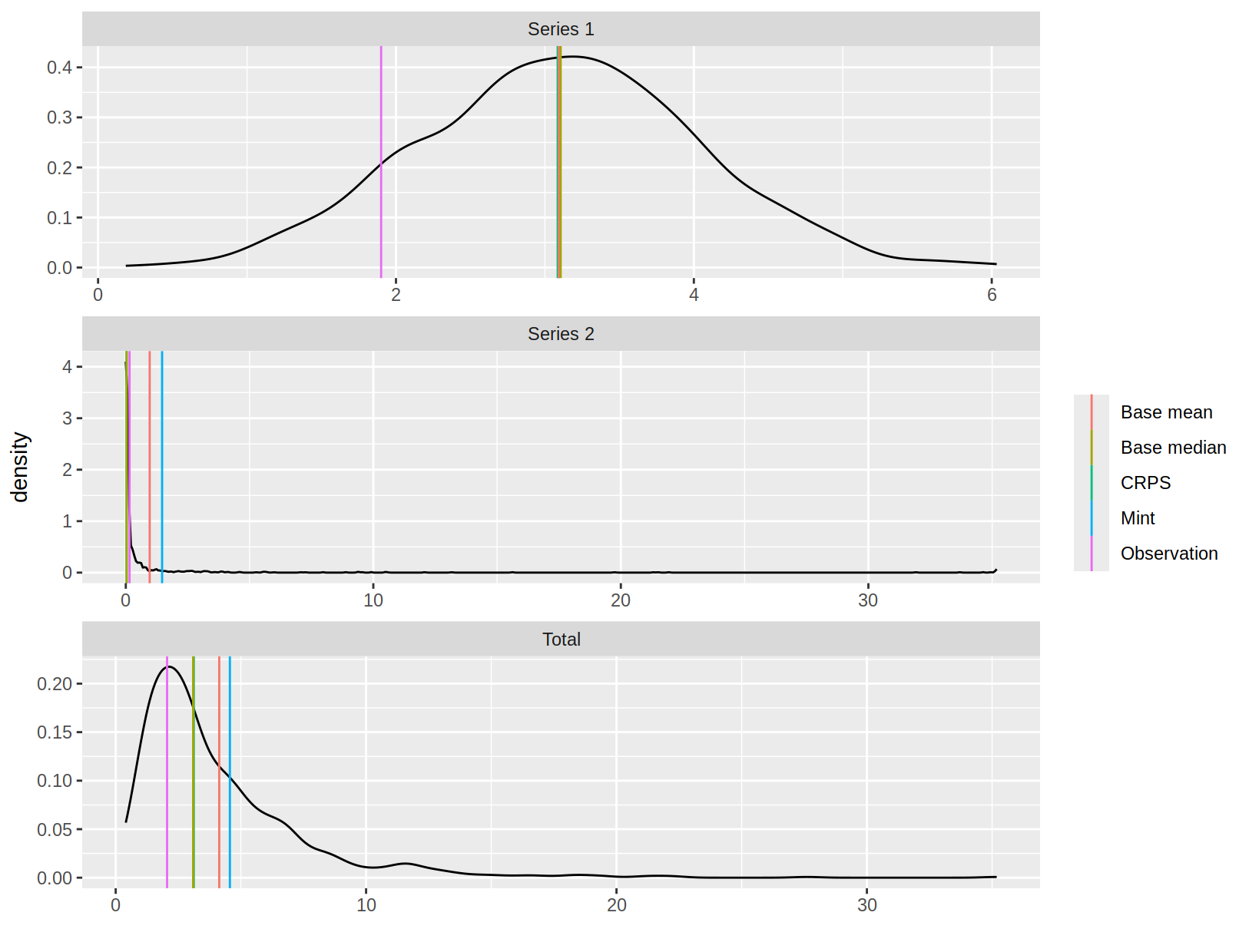

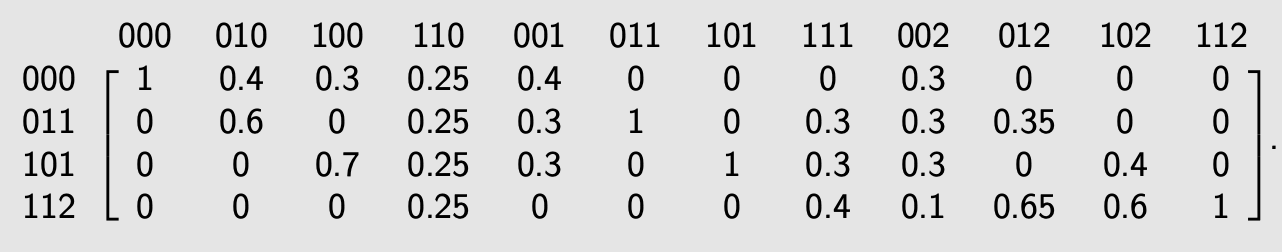

layout: true --- class: title-slide background-image: url("assets/titlebg.png") background-position: 100% 50% background-size: 100% 100% .content-box-green-trans[ .pull-left-3[  ] .pull-right-3[ # Hierarchical time series forecasting ## with optimal forecast reconciliation ### Yanfei Kang ]] --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Hierarchical time series] ]] --- class: center, hide-slide-number background-image: url(figs/retail.jpeg) background-size: cover # Retail --  -- .content-box-green.left.bold[👍 Forecasts of such hierarchies support supply chain management and production planning (Syntetos, Babai, Boylan, Kolassa, and Nikolopoulos, 2016).] --- class: center, hide-slide-number background-image: url("figs/smart-meter.jpeg") background-size: cover # Smart meter --  -- .content-box-green.left.bold[👍 Forecasts of such hierarchies support optimal grid management and resource planning.] --- # Aggregation constraints .pull-left-1[  Hierarchical series with linear constraints can be written as `$$\boldsymbol{y}_t = \boldsymbol{\color{Blue}{S}}\boldsymbol{\color{Red}{b_t}}.$$` ] -- .pull-right-2[ `\begin{aligned} \boldsymbol{y}_t =\left(\begin{array}{c} y_{\text {Total, }, t} \\ y_{A, t} \\ y_{B, t} \\ y_{C, t} \end{array}\right) =\color{Blue}{\underbrace{\left(\begin{array}{lll} 1 & 1 & 1\\ 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{array}\right)}_{\boldsymbol{S}}} \color{Red}{\underbrace{\left(\begin{array}{l} y_{A, t} \\ y_{B, t} \\ y_{C, t} \end{array}\right)}_{\boldsymbol{b_t}}}. \end{aligned}` ] --- # Aggregation constraints .pull-left-1[  Hierarchical series with linear constraints can be written as `$$\boldsymbol{y}_t = \boldsymbol{\color{Blue}{S}}\boldsymbol{\color{Red}{b_t}}.$$` ] -- .pull-right-2[ `\begin{aligned} \boldsymbol{y}_t =\left(\begin{array}{c} y_{\text {Total, }, t} \\ y_{A, t} \\ y_{B, t} \\ y_{C, t} \\ y_{AX, t} \\ y_{AY, t} \\ y_{BX, t} \\ y_{BY, t} \\ y_{CX, t} \end{array}\right) =\color{Blue}{\underbrace{\left(\begin{array}{lll} 1 & 1 & 1 & 1 & 1\\ 1 & 1 & 0 & 0 & 0\\ 0 & 0 & 1 & 1 & 0\\ 0 & 0 & 0 & 0 & 1 \\ 1 & 0 & 0 & 0 & 0\\ 0 & 1 & 0 & 0 & 0\\ 0 & 0 & 1 & 0 & 0\\ 0 & 0 & 0 & 1 & 0\\ 0 & 0 & 0 & 0 & 1 \end{array}\right)}_{\boldsymbol{S}}} \color{Red}{\underbrace{\left(\begin{array}{l} y_{AX, t} \\ y_{AY, t} \\ y_{BX, t} \\ y_{BY, t} \\ y_{CX, t} \end{array}\right)}_{\boldsymbol{b_t}}}. \end{aligned}` ] --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Hierarchical forecasting] .white.font110[*(related work)*] ]] --- # 📝 Problem .pull-left[ - We need forecasts at all levels. - Independent forecasts of all nodes will not add up. - We need *coherent* forecasts. ] .pull-right[  ] -- .content-box-green[ ### 📗 Hierarchical forecasting 20 years ago 1. Bottom up: ignores top nodes + noisy data at bottom level. 2. Top down: ignores bottom nodes + distribution of forecasts is hard. ] --- # Forecast reconciliation .center.content-box-gray[ ❓ How to make coherent forecasts using information from forecasts of **all nodes**?] -- .center[ `\(\Downarrow\)` ] .content-box-green[ ### 🙇 Forecast reconciliation (Hyndman, Ahmed, Athanasopoulos, and Shang, 2011) 1. Generate forecasts of all variables in a hierarchy (known as *base* forecasts). 2. Then *reconcile* these to ensure they respect *aggregation constraints*. ] --- # Notation - `\(\boldsymbol{\hat{y}}_{T+h|T}\)`: `\(h\)`-step **base forecasts** (incoherent) stacked in an `\(n\)`-vector, made at time `\(T\)`. `\(n\)` is the number of series in the hierarchy. .content-box-yellow[ Reconciled forecasts are of the form `$$\tilde{\boldsymbol{y}}_{T+h \mid T}=\boldsymbol{S} \boldsymbol{G} \hat{\boldsymbol{y}}_{T+h \mid T}$$` for some matrix `\(\boldsymbol{G}\)`. ] - `\(\boldsymbol{G}_{m\times n}\)`: base forecasts `\(\boldsymbol{\hat{y}}_{T+h|T}\)` `\(\Rightarrow\)` bottom-level forecasts. - `\(\boldsymbol{S}_{n\times m}\)`: bottom-level forecasts `\(\Rightarrow\)` forecasts for all levels. - `\(m\)`: number of bottom level series. --- # Forecast reconciliation .content-box-yellow[ `$$\tilde{\boldsymbol{y}}_{T+h \mid T}=\boldsymbol{S} \boldsymbol{G} \hat{\boldsymbol{y}}_{T+h \mid T}.$$` ] - If `\(\hat{\boldsymbol{y}}\)` is unbiased, then `\(\tilde{\boldsymbol{y}}\)` is unbiased iff `\(\boldsymbol{G}\boldsymbol{S} = \boldsymbol{I}_n\)`. - `\(\boldsymbol{S}\boldsymbol{G}\)` is projection matrix. - `\(\boldsymbol{V}_h=\operatorname{Var}\left[\boldsymbol{y}_{T+h}-\tilde{\boldsymbol{y}}_{T+h \mid T} \mid \boldsymbol{y}_1, \ldots, \boldsymbol{y}_T\right]=\boldsymbol{S G W}_h \boldsymbol{G}^{\prime} \boldsymbol{S}^{\prime}.\)` - `\(\boldsymbol{W}_h = \operatorname{Var}\left[\boldsymbol{y}_{T+h}-\hat{\boldsymbol{y}}_{T+h \mid T} \mid \boldsymbol{y}_1, \ldots, \boldsymbol{y}_T\right].\)` .center.content-box-gray[ Everything boils down to finding an optimal `\(\boldsymbol{G}\)`. ] --- # Minimum trace reconciliation .content-box-yellow[ ### Minimum trace (MinT) reconciliation (Shanika L. Wickramasuriya and Hyndman, 2019). The optimal `\(\boldsymbol{G}\)` that minimizes `\(\operatorname{tr}(\boldsymbol{V}_h)\)` such that `\(\boldsymbol{G}\boldsymbol{S} = \boldsymbol{I}_n\)` is given by `$$\boldsymbol{G}=\left(\boldsymbol{S}^{\prime} \boldsymbol{W}_h^{-1} \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime} \boldsymbol{W}_h^{-1}.$$` ] - Reconciled forecasts `\(\boldsymbol{\tilde{y}}_{t+h|T}\)` given by `\(\tilde{\boldsymbol{y}}_{t+h|T}=\boldsymbol{S}\left(\boldsymbol{S}^{\prime} \boldsymbol{W}_h \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime} \boldsymbol{W}_h \hat{\boldsymbol{y}}_{t+h|T}.\)` -- .center.content-box-gray[ ❓ How to estimate `\(\boldsymbol{W}_h=\text{Var}\left[\boldsymbol{y}_{T+h}-\hat{\boldsymbol{y}}_{T+h |T} | \boldsymbol{y}_1, \ldots, \boldsymbol{y}_T\right]\)`? ] --- # Choices of `\(\boldsymbol{G}\)` Different choices of `\(\boldsymbol{W}_h\)` give rise to different methods. | Reconciliation Method | `\(\boldsymbol{G}\)` | `\(\boldsymbol{W}_h\)` | | --------------- | -------------------------------------- |------------------ | | OLS | `\(\left(\boldsymbol{S}^{\prime} \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime}\)` | `\(\boldsymbol{\hat W}_{\text{ols}} = k_h \boldsymbol{I}\)` | | WLS(var) | `\(\left(\boldsymbol{S}^{\prime} \boldsymbol{\Lambda}_{V}^{-1} \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime} \boldsymbol{\Lambda}_V^{-1}\)` | `\(\boldsymbol{\hat W}_{V} = k_h \text{diag}(\hat{\boldsymbol{W}}_1) = k_h \boldsymbol{\Lambda}_V\)` | | WLS(struct) | `\(\left(\boldsymbol{S}^{\prime} \boldsymbol{\Lambda}_S^{-1} \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime} \boldsymbol{\Lambda}_S^{-1}\)` | `\(\boldsymbol{\hat W}_{S} = k_h \text{diag}(\boldsymbol{S1}) = k_h \boldsymbol{\Lambda}_S\)` | | MinT(sample) | `\(\left(\boldsymbol{S}^{\prime} \hat{\boldsymbol{W}}_{\text {sam }}^{-1} \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime} \hat{\boldsymbol{W}}_{\text {sam }}^{-1}\)` | `\(\boldsymbol{\hat W}_{\text{sam}} = k_h \hat{\boldsymbol{W}}_1\)` | | MinT(shrink) | `\(\left(\boldsymbol{S}^{\prime} \hat{\boldsymbol{W}}_{\text {shr}}^{-1} \boldsymbol{S}\right)^{-1} \boldsymbol{S}^{\prime} \hat{\boldsymbol{W}}_{\text {shr}}^{-1}\)` | `\(\boldsymbol{\hat{W}}_{\text{shr}} = \tau \text{diag}(\boldsymbol{\hat{W}_{\text{sam}}}) + (1-\tau)\boldsymbol{\hat{W}_{\text{sam}}}\)` | --- # With non-negativity - MinT reconciliation also minimizes `$$(\tilde{\boldsymbol{y}}-\hat{\boldsymbol{y}})^{\prime} \boldsymbol{W}^{-1}(\tilde{\boldsymbol{y}}-\hat{\boldsymbol{y}}).$$` - For data that cannot be negative, the minimization problem becomes .content-box-yellow[ `\begin{aligned} \underset{\boldsymbol{G}}{\min}\:(\hat{\boldsymbol{y}}-\tilde{\boldsymbol{y}})'\boldsymbol{W}^{-1}(\hat{\boldsymbol y}-\tilde{\boldsymbol{y}})\\ \textrm{s.t.}\:\:\tilde{\boldsymbol{b}}\geq 0. \end{aligned}` ] - Solved by quadratic programming (QP) using a block pivoting algorithm (Wickramasuriya, Turlach, and Hyndman, 2020). --- # Your turn .content-box-gray[ 1. Look into [tourism](https://tsibble.tidyverts.org/reference/tourism.html) data. 2. Go to **R**, and use **hts** package to forecast Australia tourism data. 3. Go to **Python**, and use **pyhts** to forecast ] --- # Complex scenarios .center.content-box-gray[ Classical forecast reconciliation methods do not necessarily work well in these complex scenarios. ] -- 1. Complex constraints `\(\Rightarrow\)` Reconciliation method that (automatically) keeps forecasts of a subset of variables immutable. 2. Complex continuous distributions `\(\Rightarrow\)` Reconciliation method suitable for any complex continuous distribution. 3. Complex discrete distributions `\(\Rightarrow\)` Reconciliation method suitable for discrete-valued data. 4. <span style="color: grey;">Complex structures `\(\Rightarrow\)` Reconciliation method that is robust in complex structures.</span> --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Hierarchical forecasting] .white.font110[*with immutable forecasts*] ]] --- # Motivation Forecast accuracy for individual variables may become `\(worse\)` after reconciling. -- .content-box-green[ ### Some operational settings in demand forecasting - **Judgemental forecasts:** forecasts of some key individual product lines are subject to careful judgemental adjustments by experts. - **Intermittency:** reconciling forecasts of non-intermittent and intermittent series may deteriorate forecast accuracy in both. - **New products:** no historical data, and thus no historical forecast errors. - **Promotional events:** series with a long history have more information on promotional effects. ] --- # Our work We want to keep forecasts of a subset of variables **immutable**. .content-box-yellow[ Find `\(\boldsymbol{\tilde{y}}\)` to minimise `$$(\tilde{\boldsymbol{y}}-\hat{\boldsymbol{y}})^{\prime} \boldsymbol{W}(\tilde{\boldsymbol{y}}-\hat{\boldsymbol{y}})$$` subject to 1. Coherence constraints 2. Immutability constraints 3. Non negativity constraints ] --- # Terminology and notation | Term | Notation | Dimension | Description | | :--------------- | :--------------------------------------|:------------ |:------------------ | | Full hierarchy | `\(\boldsymbol{y}=\boldsymbol{(w',v',u')'}\)` | `\(n\times 1\)` | All series. | | Basis | `\(\boldsymbol{b}=\boldsymbol{(v',u')'}\)`| `\(m \times 1\)` | Series used to define hierarchy, not necessarily the same as bottom level.| | Immutable | `\(\boldsymbol{u}\)` | `\(k\times 1\)`| Series with forecasts that do not change after reconciliation.| | Mutable | `\(\boldsymbol{(w',v')'}\)` | `\((m-k) \times 1\)` | Series with forecasts that do change after reconciliation.| | Non-basis | `\(\boldsymbol{w}\)` | `\((n-m) \times 1\)` | Series that can be determined if values of basis level and constraints are known.| --- # Aggregation constraints The summing matrix can be partitioned as `$$\boldsymbol{S} = \left(\begin{array}{ll} \boldsymbol{S}_1 & \boldsymbol{S}_2 \\ \boldsymbol{I}_{m-k} & \boldsymbol{0}_{(m-k)\times k} \\ \boldsymbol{0}_{k \times (m-k)} & \boldsymbol{I}_{k} \end{array}\right),$$` where `\(\boldsymbol{S}_1\)` and `\(\boldsymbol{S}_2\)` are `\((n-m)\times (m-k)\)` and `\((n-m)\times k\)` matrices. - Reconciled forecasts: `\(\boldsymbol{\tilde y}=\boldsymbol{(\tilde w',\tilde v',\tilde u')'}\)`. - Aggregation constraint: `\(\boldsymbol{\tilde{y} = S\tilde b} = \left(\begin{array}{c}\boldsymbol{S_1\tilde v + S_2 \tilde u} \\ \boldsymbol{\tilde v} \\ \boldsymbol{\tilde u}\end{array}\right)\)` `\(\Rightarrow\)` `\(\boldsymbol{\tilde w = S_1\tilde v + S_2 \tilde u}\)`. --- # Aggregation + Immutability .content-box-yellow[ Reconciled forecasts are found as the solution to the optimisation problem: `\begin{aligned} \min\limits_{\tilde{\boldsymbol{y}}} (\hat{\boldsymbol{y}} - \tilde{\boldsymbol{y}})^\prime \boldsymbol{W}^{-1}& (\hat{\boldsymbol{y}}-\tilde{\boldsymbol{y}}) \\ \textrm{s.t.} \quad \tilde{\boldsymbol{u}} &= \hat{\boldsymbol{u}},\\ \boldsymbol{S_1} \tilde{\boldsymbol{v}} + \boldsymbol{S}_2 \tilde{\boldsymbol{u}} &= \tilde{\boldsymbol{w}}, \end{aligned}` ] .center[ `\(\Downarrow\)` ] `$$\min\limits_{\tilde{\boldsymbol{v}}} (\check{\boldsymbol{\nu}} - \check{\boldsymbol{S}_1} \tilde{\boldsymbol{v}})^\prime \boldsymbol{W}_{\boldsymbol{\nu}}^{-1}(\check{\boldsymbol{\nu}} - \check{\boldsymbol{S}_1} \tilde{\boldsymbol{v}})$$` --- # Aggregation + Immutability .content-box-yellow[ ### The optimisation problem `$$\min\limits_{\tilde{\boldsymbol{v}}} (\check{\boldsymbol{\nu}} - \check{\boldsymbol{S}_1} \tilde{\boldsymbol{v}})^\prime \boldsymbol{W}_{\boldsymbol{\nu}}^{-1}(\check{\boldsymbol{\nu}} - \check{\boldsymbol{S}_1} \tilde{\boldsymbol{v}}),$$` where `$${\check{\boldsymbol{S}}_1} = \left[\begin{array}{c} \boldsymbol{S}_1 \\ \boldsymbol{I}_{m-k} \end{array}\right], \check{\boldsymbol{\nu}} = \left[\begin{array}{c} \hat{\boldsymbol{w}} - \boldsymbol{S}_2 \hat{\boldsymbol{u}} \\ \hat{\boldsymbol{v}} \end{array}\right],$$` and `\(\boldsymbol{W}_{\boldsymbol{\nu}}^{-1}\)` consists of rows and columns corresponding to mutable series in `\(\boldsymbol{W}^{-1}\)`. ] --- # Aggregation + Immutability .content-box-yellow[ ### The GLS solution `$$\tilde{\boldsymbol{v}} = (\check{\boldsymbol{S}}_1^\prime\boldsymbol{W}_{\boldsymbol{\nu}}^{-1}\check{\boldsymbol{S}}_1)^{-1}\check{\boldsymbol{S}}_1^\prime\boldsymbol{W}_{\boldsymbol{\nu}}^{-1}\check{\boldsymbol{\nu}}.$$` ] - Denote `\(\hat{\boldsymbol{G}}_1 = (\check{\boldsymbol{S}}_1^\prime\boldsymbol{W}_{\boldsymbol{\nu}}^{-1}\check{\boldsymbol{S}}_1)^{-1}\check{\boldsymbol{S}}_1^\prime\boldsymbol{W}_{\boldsymbol{\nu}}^{-1}\)`. Then we have `$$\tilde{\boldsymbol{b}} = \left[\begin{array}{c}\tilde{\boldsymbol{v}} \\ \hat{\boldsymbol{u}}\end{array}\right]=\left[\begin{array}{c} \hat{\boldsymbol{G}}_1 & \boldsymbol{0}_{(m-k)\times k} \\ \boldsymbol{0}_{k \times (n-k)} & \boldsymbol{I}_{k} \end{array}\right]\left(\boldsymbol{I}_n - \left[\begin{array}{c} \boldsymbol{0}_{(n-m)\times (n-k)} & \boldsymbol{S}_2 \\ \boldsymbol{0}_{m\times (n-k)} & \boldsymbol{0}_{m\times k} \end{array}\right]\right)\left[\begin{array}{c} \hat{\boldsymbol{w}} \\ \hat{\boldsymbol{v}} \\ \hat{\boldsymbol{u}} \end{array}\right] = \hat{\boldsymbol{G}}\hat{\boldsymbol{y}}.$$` - The **unbiased** reconciled forecasts of the hierarchy can be obtained through `\(\tilde{\boldsymbol{y}} = \boldsymbol{S}\tilde{\boldsymbol{b}} = \boldsymbol{S}\hat{\boldsymbol{G}}\hat{\boldsymbol{y}}\)`. --- # Aggregation + Immutability + Non-negativity - Our proposed method for immutable forecasts can be combined with non-negative reconciliation, then the optimisation problem is `\begin{aligned} \min\limits_{\tilde{\boldsymbol{v}}} (\check{\boldsymbol{\nu}} - \check{\boldsymbol{S}_1} \tilde{\boldsymbol{v}})^\prime \boldsymbol{W}_{\boldsymbol{\nu}}^{-1}(\check{\boldsymbol{\nu}} - \check{\boldsymbol{S}_1} \tilde{\boldsymbol{v}}) \\ \textrm{s.t.} \quad \check{\boldsymbol{S}}_1\check{\boldsymbol{v}} \geq \boldsymbol{d}, \end{aligned}` where `\(\boldsymbol{d} = [-\boldsymbol{S}_2\hat{\boldsymbol{u}} \quad \boldsymbol{0}]'\)`. - This quadratic programming problem has a unique global solution and can be solved by **quadprog** package for **R**. - For high dimensions, use the block principal pivoting (BPV) algorithm or scaled gradient projection algorithm to accelerate the solution (Wickramasuriya, Turlach, and Hyndman, 2020). --- # Forecasting sales data from Alibaba .pull-left-1[ - Three levels. - Top: Food sales. - Middle: 40 categories. - Bottom: 1905 items. - Many are intermittent. - Several spikes each year. ] .pull-right-2[ <center> <img src="figs/total_sale.jpg" height="300px"/> </center> .font50[This is supported by Alibaba Group through the Alibaba Innovative Research Program.] ] -- .center.content-box-gray[ 🙇 Aim is to forecast sales during **eight promotional events** (11.11, New year, 3.8, Anniversary, 6.18, Summer, 8.8, and 8.9) from the end of 2020 to 2021-09-12 using observations starting from 2019-01-01. ] --- # Set up .content-box-green[**Base models**] - Intermittent series (at least 60% of zeros in the last two months): simple exponential smoothing. - Others: regression model with ARIMA errors (promotion strength as a predictor) and Box Cox transformation. .content-box-green[**Immutable series**] - Top level. - Intermittent series. - Series with longer histories (more than 1 year). --- # Forecasting results (MASE, `\(WLS_v\)`) .font130[ | <div style="width:290px">Level</div> | <div style="width:150px">Base</div> | <div style="width:150px">C</div> |<div style="width:150px">CN</div> | <div style="width:150px">CI</div> | <div style="width:150px">CIN</div> | |:--------------------------------------- | ---------------:|---------------:|---------------:|--------------------:|---------------:| |Top (I) | 2.94 | 2.75 | 2.77 | 2.94 | 2.94 | | Middle (M) | 2.66|2.39|2.40|2.43|2.47| |Bottom(M) | 2.04|1.86|1.83|1.97|1.88| |Intermittent(I) | 0.11|1.52|1.52|0.11|0.11| |Longer(I) | 1.08|1.58|1.19|1.08|1.08| ] - "C" and "CN" shows improvements in the Top (I), Middle (M) and Bottom (M) levels, also leading to a severe deterioration in Intermittent (I) and Longer(I). - "CI" improves forecast accuracy for mutable series, without an associated decrease in accuracy for immutable series. "CIN" performs even better. --- # Automation of immutable series selection ### A heuristic method based on clustering - Clustering Round 1: perform `\(k\)`-means clustering for level `\(l\)`, with `\(k_l = \frac{1}{2} \times n_l\)`, where `\(n_l\)` is the number of series in level `\(l\)`. Within each cluster, select the series with higher historical base forecasting accuracy. - Clustering Round 2: denote the selected series set in Round 1 as `\(\mathcal{S}\)`, and perform clustering with `\(k\)` determined by Gap statistic. In each cluster, select the series with the highest historical base forecasting accuracy as the immutable nodes. --- # Automation of immutable series selection ### An optimisation method - Step 1: Determine the basis time series using subset selection method by Wang, Hyndman and Wickramasuriya (2024). - Step 2: Rewrite the summing and weighting matrices as `\(\boldsymbol G^*\)` and `\(\boldsymbol S^*\)`. Determine immutable series by the following optimisation problem: `\begin{aligned} \min_{\boldsymbol{G}^*, ~\boldsymbol{A}} \quad & \frac{1}{2} (\hat{\boldsymbol y} - \boldsymbol{S^* G^* y})^T \boldsymbol{W}^{-1} (\hat{\boldsymbol y} - \boldsymbol{S^* G^* y}) + \lambda_0 \sum_{j=1}^{m} \boldsymbol{A}_{jj}, \\ \text{s.t.} \quad & \boldsymbol{G^* S^* = I},\\ & \boldsymbol{G^* = (I - A) \check{G}} + \begin{pmatrix} \boldsymbol{0}_{m \times (n-m)} & \boldsymbol{I_m} \end{pmatrix}, \end{aligned}` where `\(\boldsymbol A\)` is a diagonal matrix indicating immutable nodes. `\(a_{ii} = 1\)` means that the `\(i\)`-th row of `\(\boldsymbol{(I - A) \check{G}}\)` is zeroed out. --- # Forecasting Australian tourism ### A clustering method (RMSE) <table> <thead> <tr> <th style="text-align:left;"> Level </th> <th style="text-align:left;"> Type </th> <th style="text-align:right;"> Base </th> <th style="text-align:right;"> OLS </th> <th style="text-align:right;"> WLSs </th> <th style="text-align:right;"> WLSv </th> <th style="text-align:right;"> MinT (shrinkage) </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> 1 </td> <td style="text-align:left;"> 1939.96 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> 1978.88 </td> <td style="text-align:right;"> 2316.67 </td> <td style="text-align:right;"> 2435.68 </td> <td style="text-align:right;"> 2311.33 </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> <b>1939.96</b> </td> <td style="text-align:right;"> <b>1939.96</b> </td> <td style="text-align:right;"> <b>1939.96</b> </td> <td style="text-align:right;"> <b>1939.96</b> </td> </tr> <tr> <td style="text-align:left;"> 2 </td> <td style="text-align:left;"> 417.06 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> 408.96 </td> <td style="text-align:right;"> 449.99 </td> <td style="text-align:right;"> 466.2 </td> <td style="text-align:right;"> 449.67 </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> <b>407.24</b> </td> <td style="text-align:right;"> <b>413.62</b> </td> <td style="text-align:right;"> <b>420.95</b> </td> <td style="text-align:right;"> <b>419.19</b> </td> </tr> <tr> <td style="text-align:left;"> 3 </td> <td style="text-align:left;"> 174.2475 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> <b>159.21</b> </td> <td style="text-align:right;"> 165.62 </td> <td style="text-align:right;"> 168.75 </td> <td style="text-align:right;"> <b>164.92</b> </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> 161.22 </td> <td style="text-align:right;"> <b>162.06</b> </td> <td style="text-align:right;"> <b>167.19</b> </td> <td style="text-align:right;"> 165.59 </td> </tr> <tr> <td style="text-align:left;"> 4 </td> <td style="text-align:left;"> 90.54 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> <b>87.46</b> </td> <td style="text-align:right;"> 88.67 </td> <td style="text-align:right;"> <b>88.93</b> </td> <td style="text-align:right;"> <b>87.94</b> </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> 87.9 </td> <td style="text-align:right;"> <b>88.36</b> </td> <td style="text-align:right;"> 89.47 </td> <td style="text-align:right;"> 89.94 </td> </tr> </tbody> </table> --- # Forecasting Australian tourism ### An optimisation method (RMSE) <table> <thead> <tr> <th style="text-align:left;"> Level </th> <th style="text-align:left;"> Type </th> <th style="text-align:right;"> Base </th> <th style="text-align:right;"> OLS </th> <th style="text-align:right;"> WLSs </th> <th style="text-align:right;"> WLSv </th> <th style="text-align:right;"> MinT (shrinkage) </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> 1 </td> <td style="text-align:left;"> 1939.96 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> 1978.88 </td> <td style="text-align:right;"> 2316.67 </td> <td style="text-align:right;"> 2435.68 </td> <td style="text-align:right;"> 2311.33 </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> <b>1939.96</b> </td> <td style="text-align:right;"> <b>1939.96</b> </td> <td style="text-align:right;"> <b>1939.96</b> </td> <td style="text-align:right;"> <b>1939.96</b> </td> </tr> <tr> <td style="text-align:left;"> 2 </td> <td style="text-align:left;"> 417.06 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> 408.96 </td> <td style="text-align:right;"> 449.99 </td> <td style="text-align:right;"> 466.2 </td> <td style="text-align:right;"> 449.67 </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> <b>405.2</b> </td> <td style="text-align:right;"> <b>408.47</b> </td> <td style="text-align:right;"> <b>420.35</b> </td> <td style="text-align:right;"> <b>410.79</b> </td> </tr> <tr> <td style="text-align:left;"> 3 </td> <td style="text-align:left;"> 174.2475 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> 159.21 </td> <td style="text-align:right;"> 165.62 </td> <td style="text-align:right;"> 168.75 </td> <td style="text-align:right;"> 164.92 </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> <b>158.72</b> </td> <td style="text-align:right;"> <b>158.48</b> </td> <td style="text-align:right;"> <b>161.35</b> </td> <td style="text-align:right;"> <b>158.72</b> </td> </tr> <tr> <td style="text-align:left;"> 4 </td> <td style="text-align:left;"> 90.54 </td> <td style="text-align:right;"> U </td> <td style="text-align:right;"> 87.46 </td> <td style="text-align:right;"> 88.67 </td> <td style="text-align:right;"> 88.93 </td> <td style="text-align:right;"> 87.94 </td> </tr> <tr> <td style="text-align:left;"> </td> <td style="text-align:left;"> </td> <td style="text-align:right;"> C </td> <td style="text-align:right;"> <b>87.35</b> </td> <td style="text-align:right;"> <b>87.57</b> </td> <td style="text-align:right;"> <b>87.49</b> </td> <td style="text-align:right;"> <b>86.63</b> </td> </tr> </tbody> </table> --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Hierarchical forecasting] .white.font110[*for complex continuous distributions*] ]] --- # Motivation - Times series from different levels exhibit various distributions, which are often non-normal or non-parametric. - MinT only considers the covariance matrix of the forecast distributions, neglecting other information such as their shapes. - We propose a forecast reconciliation method suitable for any complex continuous distribution. - We obtain coherent point forecasts and probabilistic forecasts by maximizing the energy score (ES) of the reconciled forecasts. --- # Reconciliation method for complex continuous distributions - Core idea: find the closest coherent point to the base forecast distributions. - We use ES (Energy Score) to measure the distance: `$$\text{ES}(\hat{\boldsymbol f}, \boldsymbol y) = \frac{1}{2} \left[ \mathbb{E}_{\hat {\boldsymbol f}} \left| \boldsymbol Y - \boldsymbol Y' \right|^\beta - \mathbb{E}_{\hat {\boldsymbol f}} \left| \boldsymbol Y - \boldsymbol y \right|^\beta \right],$$` which can be estimated by sampling from `\(\hat{\boldsymbol f}\)` via copula methods. - ES is typically used to evaluate forecast distributions. Here we assume forecast distributions are given, we find coherent points in the supports of these distributions with the largest ES. --- # Reconciled point forecasts We get reconciled point forecasts by finding the closest coherent point to the base forecast distributions: `\begin{aligned} &\max_{\boldsymbol{\tilde b}} \text{ES}(\hat{\boldsymbol f}, \boldsymbol{S\tilde b}) \\ \Rightarrow &\max_{\boldsymbol{\tilde b}} \left[ \frac{1}{N} \sum_{i=1}^N \left[ \frac{1}{2} \left| \boldsymbol{y}^{[i]} - \hat{\boldsymbol{y}}^{[i]} \right|^\beta - \left| \boldsymbol{y}^{[i]} - \boldsymbol{S\tilde b} \right|^\beta \right] \right] \\ \Rightarrow &\min_{\boldsymbol{\tilde b}} \left[ \frac{1}{N} \sum_{i=1}^N \left| \boldsymbol{y}^{[i]} - \boldsymbol{S\tilde b} \right|^\beta \right]. \end{aligned}` --- # An intuitive example .pull-left[  ] .pull-right[ - Normal distribution + log-normal distribution. - Reconciled forecasts using MinT for two heavy-tailed variables tend to shift towards the tail. - Proposed method keeps the reconciled forecasts close from the median of base forecasts. ] --- # Reconciled probabilistic forecasts To obtain reconciled probabilistic forecasts, for each sample from the base forecast distributions `\(\hat{\boldsymbol{y}}^{[i]}\)`, we optimise `\begin{aligned} &\max_{\boldsymbol{\tilde b}^{[i]}} \text{ES}(\hat{\boldsymbol f}, \boldsymbol{S\tilde b}^{[i]}) - \alpha \left\| \hat{\boldsymbol{y}}^{[i]} - \boldsymbol{S\tilde b}^{[i]} \right\|^\beta \\ \Rightarrow & \max_{\boldsymbol{\tilde b}^{[i]}} \left[ \frac{1}{N} \sum_{j=1}^N \left( \frac{1}{2} \left\| \hat{\boldsymbol{y}}^{[j]} - \hat{\boldsymbol{y}}_{[j]} \right\|^\beta - \left\| \hat{\boldsymbol{y}}^{[j]} - \boldsymbol{S\tilde b}^{[i]} \right\|^\beta - \alpha \left\| \hat{\boldsymbol{y}}^{[i]} - \boldsymbol{S\tilde b}^{[i]} \right\|^\beta \right) \right] \\ \Rightarrow & \min_{\boldsymbol{\tilde b}^{[i]}} \left[ \frac{1}{N} \sum_{j \neq i} \left\| \hat{\boldsymbol{y}}^{[j]} - \boldsymbol{S\tilde b}^{[i]} \right\|^\beta + \frac{N \alpha + 1}{N} \left\| \hat{\boldsymbol{y}}^{[i]} - \boldsymbol{S\tilde b}^{[i]} \right\|^\beta \right]. \end{aligned}` This means we find the closest coherent point near the sample that maximise ES. --- # Forecasting household electricity consumption in a region of the UK - Data: [semi-hourly household electricity usage data](https://www.ofgem.gov.uk/energy-policy-and-regulation/policy-and-regulatory-programmes/energy-demand-research-project-edrp) from the East Midlands region of the UK. - 5 levels: NUTS, NUTS1, NUTS2, NUTS3, NUTS4, and the bottom level, which respectively contain 1, 3, 11, 40, and 1582 time series. - Setup: kernel density estimation method for bottom level forecasting, and TBATS to forecast other levels. --- # Forecasting household electricity consumption in a region of the UK ### Point forecasting (MAE) | Method | NUTS | NUTS1 | NUTS3 | NUTS4 | Bottom | |-------------------|-------|-------|-------|-------|------| | Base | 33.87 | 11.30 | 3.02 | **1.21** | 0.11 | | Bottom-Up | 35.97 | 11.90 | 3.70 | 1.26 | 0.11 | | MinT (Variance) | 30.07 | 11.48 | 3.59 | 1.38 | 0.11 | | ES | **28.98** | **10.75** | **3.34** | 1.22 | **0.11** | --- # Forecasting household electricity consumption in a region of the UK ### Probabilistic forecasting (CRPS) | Method | NUTS | NUTS1 | NUTS3 | NUTS4 | Bottom | |-------------------|-------|-------|-------|-------|------| | Base | 24.38 | 7.64 | **1.91** | **0.80** | 0.08 | | Bottom-Up | 37.92 | 11.77 | 2.90 | 0.94 | 0.08 | | MinT (Variance) | 20.44 | **6.91** | 2.22 | 0.89 | 0.08 | | ES | **19.67** | 7.00 | 1.99 | 0.83 | **0.07** | --- background-image: url(figs/generation.jpg) background-size: cover .pull-middle[ .content-box-green-trans[ .large.white[Forecast reconciliation] .white.font110[*for discrete distributions*] ]] --- # Motivation - Reconciliation approaches are mostly designed for continuous-valued HTS. - Projection may produce non-integer and negative forecasts. --- # An example - `\(Y_3 = Y_1 + Y_2,\)` where `\(Y_1\)` and `\(Y_2\)` are binary. - Assume their forecasts are distributed as | Value | `\(\hat y_3\)` | `\(\hat y_1\)` | `\(\hat y_2\)` | |-----|-----|-----|-----| | 0 | 0.1 | 0.1 | 0.1 | | 1 | 0.3 | 0.9 | 0.9 | | 2 | 0.6 | 0 | 0 | - Under independence, `\(P(Y_1 = 0, Y_2=1, Y_3=0) = 0.1 \times 0.9 \times 0.1 = 0.009\)`, which assigns non-zero probability to an incoherent point. - We construct the coherent distribution by assigning mass from incoherent points to coherent points in the predictive distribution. --- # DFR: discrete forecast reconciliation - `\(q\)`-dimensional base forecasts: `\(\hat{\boldsymbol{\pi}} = [P(Y_1 = 0, Y_2 = 0, Y_3 = 0), P(Y_1 = 1, Y_2 = 0, Y_3 = 0), \dots]'.\)` - `\(r\)`-dimensional reconciled forecasts: `\(\tilde{\boldsymbol{\pi}} = [P(Y_1 = 0, Y_2 = 0, Y_3 = 0), P(Y_1 = 1, Y_2 = 0, Y_3 = 1), \dots]'.\)` - `\(\tilde{\boldsymbol\pi} = \boldsymbol{A}\hat{\boldsymbol{\pi}},\)` where `\(\boldsymbol{A}=[a_{ij}], \text{with}~\sum_{j=1}^r a_{ij} = 1, \quad 0 \leq a_{ij} \leq 1, \quad i = 1, \ldots, r, \quad j = 1, \ldots, q.\)` --- # An example of `\(A\)` - `\(\boldsymbol{A}\)` proportionally assigning the probability of each point in incoherent domain to points in the coherent domain. - Assignment restriction: the probability is only assigned to the closest coherent points. - An example of `\(A\)`:  --- # DFR: discrete forecast reconciliation - By minimising the average Brier Score of `\(\tau\)` reconciled forecasts, we can find the optimal reconciliation matrix. `\begin{aligned} &\min_{A}\frac{1}{\tau}\sum_{t=1}^{\tau}(\mathbf{A}\hat{\boldsymbol{\pi}}_t - \mathbf{z}_t)'(\mathbf{A}\hat{\boldsymbol{\pi}}_t - \mathbf{z}_t) \\ =&\min_{a_{ij}}\frac{1}{\tau}\left[\sum_{i=1}^r\left(\sum_{j=1}^q a_{ij}\hat\pi_{jt} - z_i^t\right)^2\right] \\ & \text{s.t.} \sum_{i=1}^r a_{ij} = 1, 0\leq a_{ij} \leq 1. \end{aligned}` - This is a standard quadratic programming problem. --- # Forecasting criminal offenses in Washington, D.C. - We take [the data at a census tract level](https://crimecards.dc.gov/.) resulting in 231 weekly time series. - Two-level temporal hierarchies: weekly (bottom) and monthly (top).  --- # Conclusions 1. Complex constraints `\(\Rightarrow\)` Reconciliation method that (automatically) keeps forecasts of a subset of variables immutable. 2. Complex continuous distributions `\(\Rightarrow\)` Reconciliation method suitable for any complex continuous distribution. 3. Complex discrete distributions `\(\Rightarrow\)` Reconciliation method suitable for discrete-valued data. 4. <span style="color: grey;">Complex structures `\(\Rightarrow\)` Reconciliation method that is robust in complex structures.</span> --- background-image: url("assets/titlepage_buaa.png") background-position: 100% 50% background-size: 100% 100% .font300.bold[ **Thanks!** ] <br> <br> <br> .content-box-gray.font160[ <svg aria-hidden="true" role="img" viewBox="0 0 496 512" style="height:1em;width:0.97em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:#1da1f2;overflow:visible;position:relative;"><path d="M165.9 397.4c0 2-2.3 3.6-5.2 3.6-3.3.3-5.6-1.3-5.6-3.6 0-2 2.3-3.6 5.2-3.6 3-.3 5.6 1.3 5.6 3.6zm-31.1-4.5c-.7 2 1.3 4.3 4.3 4.9 2.6 1 5.6 0 6.2-2s-1.3-4.3-4.3-5.2c-2.6-.7-5.5.3-6.2 2.3zm44.2-1.7c-2.9.7-4.9 2.6-4.6 4.9.3 2 2.9 3.3 5.9 2.6 2.9-.7 4.9-2.6 4.6-4.6-.3-1.9-3-3.2-5.9-2.9zM244.8 8C106.1 8 0 113.3 0 252c0 110.9 69.8 205.8 169.5 239.2 12.8 2.3 17.3-5.6 17.3-12.1 0-6.2-.3-40.4-.3-61.4 0 0-70 15-84.7-29.8 0 0-11.4-29.1-27.8-36.6 0 0-22.9-15.7 1.6-15.4 0 0 24.9 2 38.6 25.8 21.9 38.6 58.6 27.5 72.9 20.9 2.3-16 8.8-27.1 16-33.7-55.9-6.2-112.3-14.3-112.3-110.5 0-27.5 7.6-41.3 23.6-58.9-2.6-6.5-11.1-33.3 2.6-67.9 20.9-6.5 69 27 69 27 20-5.6 41.5-8.5 62.8-8.5s42.8 2.9 62.8 8.5c0 0 48.1-33.6 69-27 13.7 34.7 5.2 61.4 2.6 67.9 16 17.7 25.8 31.5 25.8 58.9 0 96.5-58.9 104.2-114.8 110.5 9.2 7.9 17 22.9 17 46.4 0 33.7-.3 75.4-.3 83.6 0 6.5 4.6 14.4 17.3 12.1C428.2 457.8 496 362.9 496 252 496 113.3 383.5 8 244.8 8zM97.2 352.9c-1.3 1-1 3.3.7 5.2 1.6 1.6 3.9 2.3 5.2 1 1.3-1 1-3.3-.7-5.2-1.6-1.6-3.9-2.3-5.2-1zm-10.8-8.1c-.7 1.3.3 2.9 2.3 3.9 1.6 1 3.6.7 4.3-.7.7-1.3-.3-2.9-2.3-3.9-2-.6-3.6-.3-4.3.7zm32.4 35.6c-1.6 1.3-1 4.3 1.3 6.2 2.3 2.3 5.2 2.6 6.5 1 1.3-1.3.7-4.3-1.3-6.2-2.2-2.3-5.2-2.6-6.5-1zm-11.4-14.7c-1.6 1-1.6 3.6 0 5.9 1.6 2.3 4.3 3.3 5.6 2.3 1.6-1.3 1.6-3.9 0-6.2-1.4-2.3-4-3.3-5.6-2z"/></svg> [github.com/kl-lab](https://github.com/kl-lab) <svg aria-hidden="true" role="img" viewBox="0 0 512 512" style="height:1em;width:1em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:#1da1f2;overflow:visible;position:relative;"><path d="M352 256c0 22.2-1.2 43.6-3.3 64H163.3c-2.2-20.4-3.3-41.8-3.3-64s1.2-43.6 3.3-64H348.7c2.2 20.4 3.3 41.8 3.3 64zm28.8-64H503.9c5.3 20.5 8.1 41.9 8.1 64s-2.8 43.5-8.1 64H380.8c2.1-20.6 3.2-42 3.2-64s-1.1-43.4-3.2-64zm112.6-32H376.7c-10-63.9-29.8-117.4-55.3-151.6c78.3 20.7 142 77.5 171.9 151.6zm-149.1 0H167.7c6.1-36.4 15.5-68.6 27-94.7c10.5-23.6 22.2-40.7 33.5-51.5C239.4 3.2 248.7 0 256 0s16.6 3.2 27.8 13.8c11.3 10.8 23 27.9 33.5 51.5c11.6 26 20.9 58.2 27 94.7zm-209 0H18.6C48.6 85.9 112.2 29.1 190.6 8.4C165.1 42.6 145.3 96.1 135.3 160zM8.1 192H131.2c-2.1 20.6-3.2 42-3.2 64s1.1 43.4 3.2 64H8.1C2.8 299.5 0 278.1 0 256s2.8-43.5 8.1-64zM194.7 446.6c-11.6-26-20.9-58.2-27-94.6H344.3c-6.1 36.4-15.5 68.6-27 94.6c-10.5 23.6-22.2 40.7-33.5 51.5C272.6 508.8 263.3 512 256 512s-16.6-3.2-27.8-13.8c-11.3-10.8-23-27.9-33.5-51.5zM135.3 352c10 63.9 29.8 117.4 55.3 151.6C112.2 482.9 48.6 426.1 18.6 352H135.3zm358.1 0c-30 74.1-93.6 130.9-171.9 151.6c25.5-34.2 45.2-87.7 55.3-151.6H493.4z"/></svg> [yanfei.site](https://yanfei.site) <svg aria-hidden="true" role="img" viewBox="0 0 640 512" style="height:1em;width:1.25em;vertical-align:-0.125em;margin-left:auto;margin-right:auto;font-size:inherit;fill:#1da1f2;overflow:visible;position:relative;"><path d="M72 88a56 56 0 1 1 112 0A56 56 0 1 1 72 88zM64 245.7C54 256.9 48 271.8 48 288s6 31.1 16 42.3V245.7zm144.4-49.3C178.7 222.7 160 261.2 160 304c0 34.3 12 65.8 32 90.5V416c0 17.7-14.3 32-32 32H96c-17.7 0-32-14.3-32-32V389.2C26.2 371.2 0 332.7 0 288c0-61.9 50.1-112 112-112h32c24 0 46.2 7.5 64.4 20.3zM448 416V394.5c20-24.7 32-56.2 32-90.5c0-42.8-18.7-81.3-48.4-107.7C449.8 183.5 472 176 496 176h32c61.9 0 112 50.1 112 112c0 44.7-26.2 83.2-64 101.2V416c0 17.7-14.3 32-32 32H480c-17.7 0-32-14.3-32-32zm8-328a56 56 0 1 1 112 0A56 56 0 1 1 456 88zM576 245.7v84.7c10-11.3 16-26.1 16-42.3s-6-31.1-16-42.3zM320 32a64 64 0 1 1 0 128 64 64 0 1 1 0-128zM240 304c0 16.2 6 31 16 42.3V261.7c-10 11.3-16 26.1-16 42.3zm144-42.3v84.7c10-11.3 16-26.1 16-42.3s-6-31.1-16-42.3zM448 304c0 44.7-26.2 83.2-64 101.2V448c0 17.7-14.3 32-32 32H288c-17.7 0-32-14.3-32-32V405.2c-37.8-18-64-56.5-64-101.2c0-61.9 50.1-112 112-112h32c61.9 0 112 50.1 112 112z"/></svg> [kllab.org](https://kllab.org) ]