Spark¶

Apache Spark is a multi-language engine for executing data engineering, data science, and machine learning on single-node machines or clusters.

- In 2009, Apache Spark began as a research project at UC Berkeley’s AMPLab to improve on MapReduce.

- The most widely-used engine for scalable computing: Thousands of companies, including 80% of the Fortune 500, use Apache Spark. Amost 2,000 contributors to the open source project from industry and academia.

Key features¶

Batch/streaming data: Unify the processing of your data in batches and real-time streaming, using your preferred language: Python, SQL, Scala, Java or R.

SQL analytics: Execute fast, distributed ANSI SQL queries for dashboarding and ad-hoc reporting. Runs faster than most data warehouses.

Data science at scale: Perform Exploratory Data Analysis (EDA) on petabyte-scale data without having to resort to downsampling.

Machine learning: Train machine learning algorithms on a laptop and use the same code to scale to fault-tolerant clusters of thousands of machines.

What is Spark¶

- Spark is a lightning-fast cluster computing technology, designed for fast computation.

- It is based on Hadoop MapReduce and it extends the MapReduce model to efficiently use it for more types of computations, which includes interactive queries and stream processing.

- The main feature of Spark is its in-memory cluster computing that increases the processing speed of an application. Spark also loaded data in-memory, making operations much faster than Hadoop’s on-disk storage.

- Spark is designed to cover a wide range of workloads such as batch applications, iterative algorithms, interactive queries and streaming.

Why Spark¶

Speed¶

- Run workloads 100x faster.

- Apache Spark achieves high performance for both batch and streaming data, using a state-of-the-art DAG scheduler, a query optimizer, and a physical execution engine.

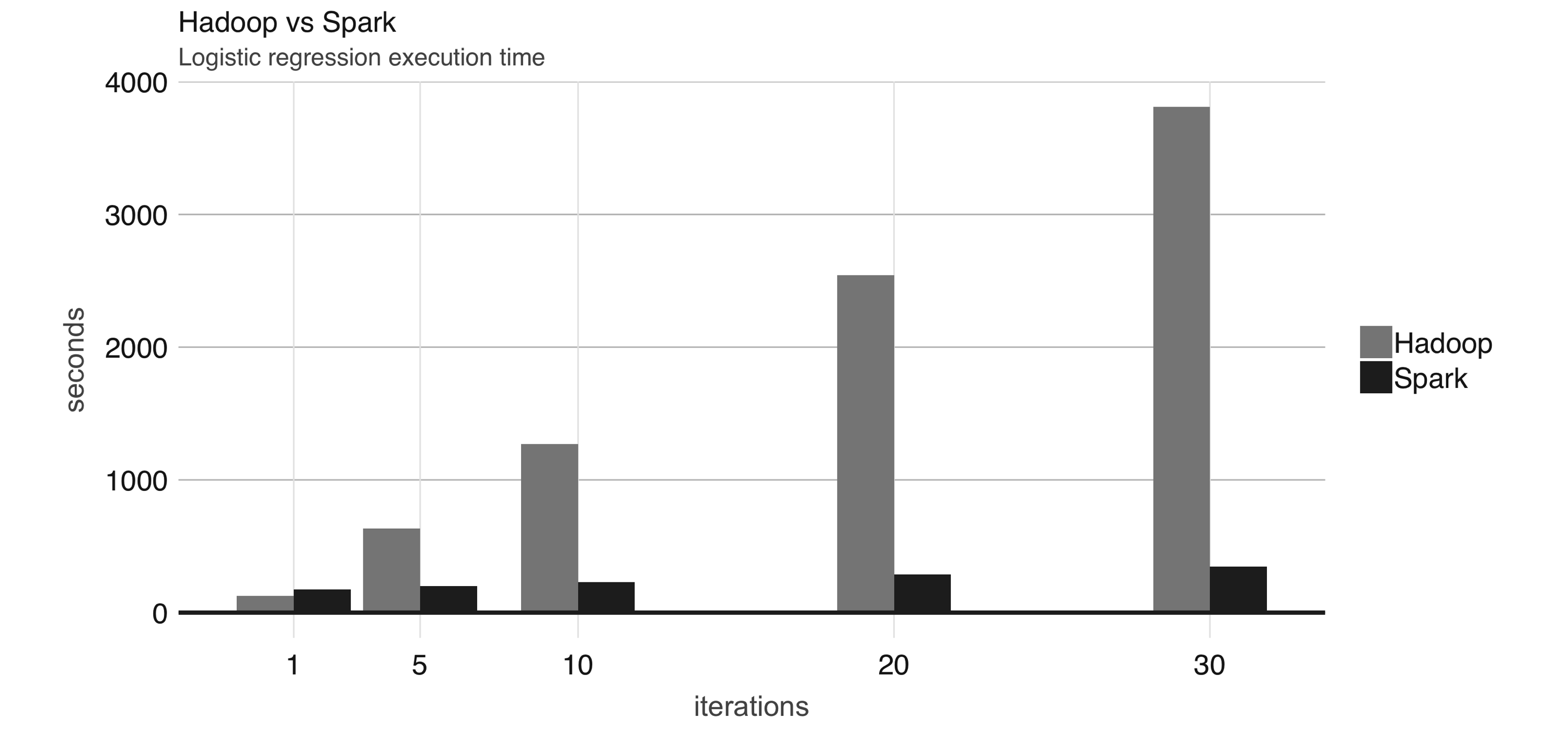

- One of the earliest results showed that running logistic regression allowed Spark to run 10 times faster than Hadoop by making use of in-memory datasets.

- To give you a sense of how much faster and efficient Spark is, it takes 72 minutes and 2,100 computers to sort 100 terabytes of data using Hadoop, but only 23 minutes and 206 computers using Spark.

Ease of Use¶

APIs in Java, Scala, Python, R, and SQL.

Spark is also easier to use than Hadoop; for instance, the word-counting MapReduce example takes about 50 lines of code in Hadoop, but it takes only 2 lines of code in Spark.

Spark supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, pandas API on Spark for pandas workloads, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for incremental computation and stream processing.

As you can see, Spark is much faster, more efficient, and easier to use than Hadoop.

Runs Everywhere¶

Spark runs on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud. It can access diverse data sources.

You can run Spark using its standalone cluster mode, on EC2, on Hadoop YARN, on Mesos, or on Kubernetes. Access data in HDFS, Alluxio, Apache Cassandra, Apache HBase, Apache Hive, and hundreds of other data sources.

Libraries of Spark¶

- Spark SQL is a module within Apache Spark that provides structured data processing capabilities through both SQL and a DataFrame API.

- Spark Streaming leverages Spark Core's fast scheduling capability to perform streaming analytics. It ingests data in mini-batches and performs RDD (Resilient Distributed Datasets) transformations on those mini-batches of data.

- MLlib fits into Spark's APIs and interoperates with NumPy in Python (as of Spark 0.9) and R libraries (as of Spark 1.5). You can use any Hadoop data source (e.g. HDFS, HBase, or local files), making it easy to plug into Hadoop workflows. MLlib contains many algorithms and utilities: classification, Clustering, Recommendation, Text mining, etc.

- GraphX a distributed graph-processing framework on top of Spark. It provides an API for expressing graph computation that can model the user-defined graphs by using Pregel abstraction API. It also provides an optimized runtime for this abstraction.

Spark RDD¶

- Resilient Distributed Datasets (RDD) is a fundamental data structure of Spark.

- It is an immutable distributed collection of objects.

- Each dataset in RDD is divided into logical partitions, which may be computed on different nodes of the cluster.

- RDDs can contain any type of Python, Java, or Scala objects, including user-defined classes.

- There are two ways to create RDDs − parallelizing an existing collection in your driver program, or referencing a dataset in an external storage system, such as a shared file system, HDFS, HBase, or any data source offering a Hadoop Input Format.

Data Sharing is Slow in MapReduce¶

- MapReduce is widely adopted for processing and generating large datasets with a parallel, distributed algorithm on a cluster. It allows users to write parallel computations, using a set of high-level operators, without having to worry about work distribution and fault tolerance.

- Unfortunately, in most current frameworks, the only way to reuse data between computations (Ex − between two MapReduce jobs) is to write it to an external stable storage system (Ex − HDFS). Although this framework provides numerous abstractions for accessing a cluster’s computational resources, users still want more.

- Both Iterative and Interactive applications require faster data sharing across parallel jobs. Data sharing is slow in MapReduce due to replication, serialization, and disk IO. Regarding storage system, most of the Hadoop applications, they spend more than 90% of the time doing HDFS read-write operations.

Data Sharing using Spark RDD¶

- Data sharing is slow in MapReduce due to replication, serialization, and disk IO. Most of the Hadoop applications, they spend more than 90% of the time doing HDFS read-write operations.

- Recognizing this problem, researchers developed a specialized framework called Apache Spark. The key idea of spark is Resilient Distributed Datasets (RDD); it supports in-memory processing computation. This means, it stores the state of memory as an object across the jobs and the object is sharable between those jobs. Data sharing in memory is 10 to 100 times faster than network and Disk.

Launching Applications with spark-submit¶

Once a user application is bundled, it can be launched using the bin/spark-submit script. This script takes care of setting up the classpath with Spark and its dependencies, and can support different cluster managers and deploy modes that Spark supports:

spark-submit \ --class <main-class> \ --master <master-url> \ --deploy-mode <deploy-mode> \ --conf <key>=<value> \ ... # other options <application-jar> \ [application-arguments]

Run Python application on a YARN cluster¶

PYSPARK_PYTHON=python3.10 spark-submit \

--master yarn \

--executor-memory 20G \

--num-executors 50 \

$SPARK_HOME/examples/src/main/python/pi.py \

1000Run Spark via Pyspark shell¶

It is also possible to launch the PySpark shell. Set

PYSPARK_PYTHONvariable to select the approperate Python when runningpysparkcommand:PYSPARK_PYTHON=python3.10 pyspark

Run spark interactively within Python¶

We will use Aliyun E-MapReduce Notebook

- You can login at https://signin.aliyun.com/buaa-sem.onaliyun.com/login.htm

- Username: student@buaa-sem.onaliyun.com

- Password: bigdata2024

- Search for "E-MapReduce"

- Go to Notebook

- Create a workspace using your full name

- Connect to an external cluster

!echo $SPARK_HOME

/opt/apps/SPARK3/spark3-current

spark, sc = %spark.get_session test

Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 24/04/15 03:54:56 WARN [Thread-4] NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 24/04/15 03:54:58 WARN [Thread-4] DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded. 24/04/15 03:54:58 WARN [Thread-4] Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

%spark.stop_session

'success stop spark application application_1709108427864_0315'