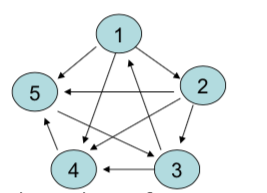

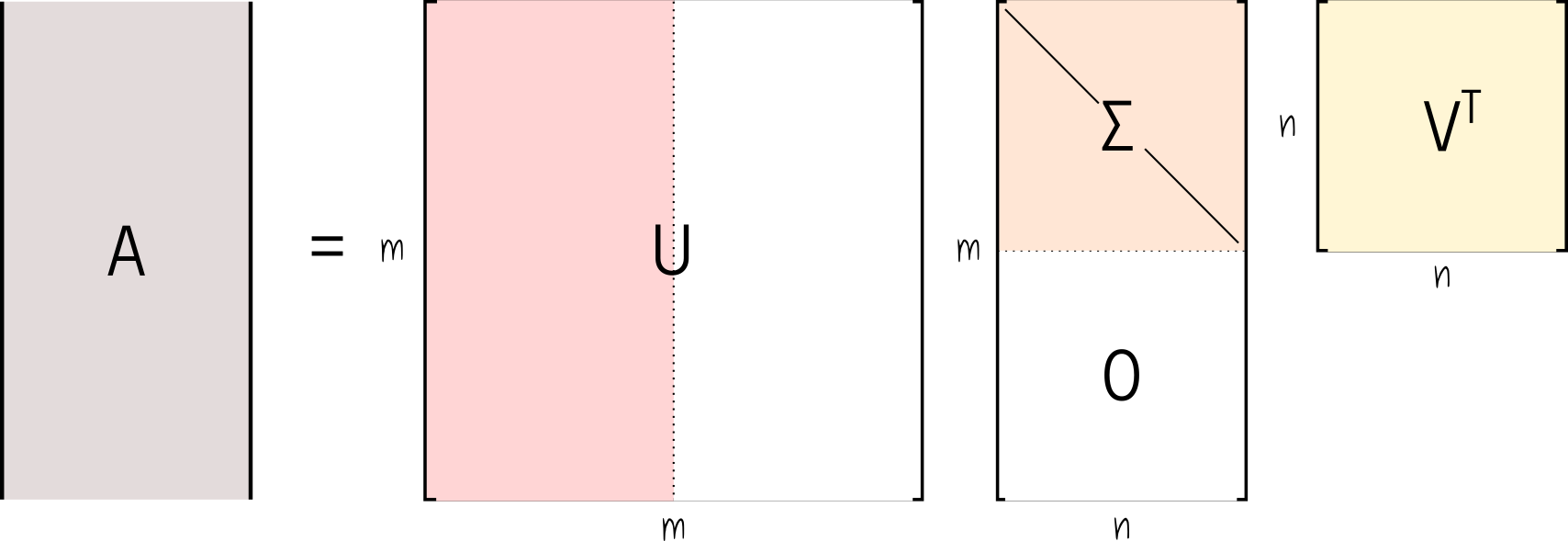

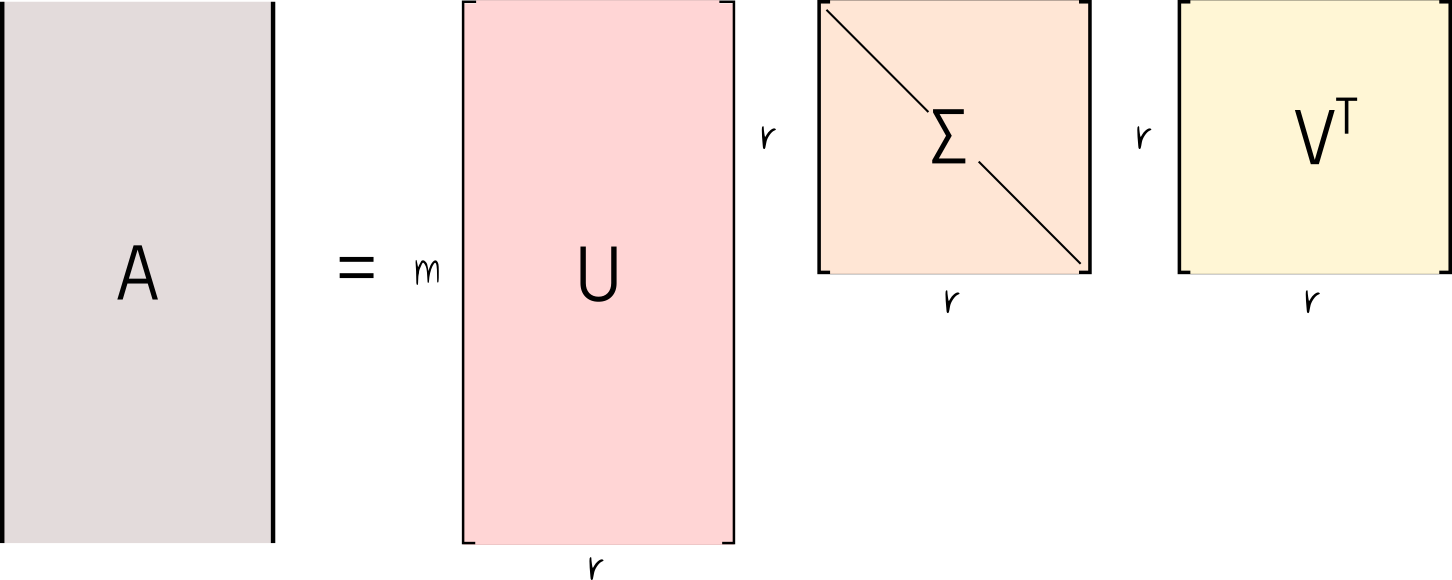

layout: true --- class: inverse, center, middle background-image: url(../figs/titlepage16-9.png) background-size: cover <br> <br> # Bayesian Statistics and Computing ## Lecture 15: Singular Value Decomposition <img src="../figs/slides.png" width="150px"/> #### *Yanfei Kang | BSC | Beihang University* --- # Why numerical linear algebra? - Difference between linear algebra and **applied** linear algebra. - Think about linear algebra in Statistics. - In curve fitting. - In image processing. - In signal processing. - etc. -- - **We need to know numerical techniques!** --- # What we will learn in this lecture - Eigenanalysis - SVD --- class: inverse, center, middle # Eigenanalysis --- # Eigenanalysis - In general, a matrix acts on a vector by changing both its magnitude and its direction. - However, a matrix may act on certain vectors by changing only their magnitude, and leaving their direction unchanged (or reversing it). - these vectors are the eigenvectors of the matrix - the scaling factor is the eigenvalue. <center> <img src="./figs/eigen.png" height="200px" /> </center> --- # Eigenanalysis - What if `\(\mathbf{A}\)` is not a square matrix? - How to find its eigenvalues and eigenvectors? - If `\(\mathbf{A}\)` is a real symmetric matrix - Only real eigenvalues - `\(n\)` distinct linearly independent eigenvectors - pairwise orthogonal - `\(\mathbf{A = Q\Lambda Q^T}\)` - When `\(A\)` is diagonalisable? - If a diagonalisation doesn't exist, there is always a triangularisation via Schur Decomposition: `\(\mathbf{A = QSQ^T}\)`. - Let us revisit together the properties of eigenvalues and eigenvectors. --- # What does eigenanalysis help to do? - Let's try to understand what `\(\mathbf{Ax} = \lambda \mathbf{x}\)` is really asking. - Can we find a pair of `\(\lambda\)` and `\(\mathbf{x}\)` such that when a matrix `\(\mathbf{A}\)` is applied to `\(\mathbf{x}\)`, it doesn't change the direction and just scales the vector? - If we can find such a pair, then everytime we do something with `\(\mathbf{Ax}\)` in some mathematical operation, we can replace it with `\(\lambda \mathbf{x}\)`. --- # What does eigenanalysis help to do? - Consider `\(\mathbf{A} = \left(\begin{array}{cc}5 & -1\\-2 & 4 \end{array}\right)\)` and `\(\mathbf{x} = \left(\begin{array}{c}1 \\-1 \end{array}\right)\)`. - What if we now want to calculate `\(\mathbf{A^{20}x}\)`? - What if we now want to calculate `\(\mathbf{A^{-1}x}\)`? --- # Advantages of eigenanalysis - It enables us to replace a matrix with a scalar when we have the opportunity to change our coordinate system to the eigenvectors of a matrix. - We can express any vector in terms of this new coordinate system. - We can use the fact that `\(\mathbf{Ax} = \lambda \mathbf{x}\)` to simplify calculations. --- # Application of Eigenanalysis: Google <center> <img src="./figs/google.png" height="500px" /> </center> --- # How to know page rank? - How does the search engine know which pages are the most important? - Google assigns a number to each individual page, expressing its importance. - This number is known as the PageRank and is computed via the eigenvalue problem `\(Aw = \lambda w\)`, where `\(A\)` is based on the link structure of the Internet. --- # Google's pagerank - Suppose we have a set of webpages `\(W\)`, with `\(|W|=n\)` as the number of webpages. - We define a connectivity (adjacency) matrix `\(A\)` as follows: `$$A_{i j}:=\left\{\begin{array}{ll} 1 / N_{i} & \text{if Page } i \text{ links to Page } j, \\ 0 & \text { otherwise.} \end{array}\right.$$` where `\(N_i\)` is the number of outlinks on Page `\(i\)`. - Typically `\(A\)` is huge (27.5 billion x 27.5 billion in 2011), but extremely sparse (lots of zero values) --- # A small example .pull-left[ Consider the small set of five webpages: <br>  ] -- .pull-right[ The connectivity matrix is `$$A =\left(\begin{array}{lllll} 0 & 1/3 & 0 & 1/3 & 1/3 \\ 0 & 0 & 1/3 & 1/3 & 1/3 \\ 1/2 & 0 & 0 & 1/2 & 0 \\ 0 & 0 & 0 & 0 & 1 \\ 0 & 0 & 1 & 0 & 0 \end{array}\right)$$` ] --- # A small example - The underlying idea for PageRank: a page is important, if other important pages link to it. - The ranking of page `\(i\)` is proportional to the weighted sum of the rankings of all pages that link to `\(i\)`: `\(r_4 = \frac{1}{3}r_1 + \frac{1}{3}r_2 + \frac{1}{2}r_3\)`. - So this is a system of `\(n = 5\)` linear equations (please write it out). - The ranking vector is treated like a probability of relevance, so we need to then rescale so that `\(\Sigma_{i=1}^n r_i = 1.\)` - Go to R and compute page ranks in this example. --- # What you need to realise for now - Finding the eigenvalues and eigenvectors of a massive matrix is computationally challenging though (don’t try to solve the characteristic polynomial!) - And you will learn numerical techniques later. --- # Further readings [Google's pagerank](https://yanfei.site/docs/bsc/pagerank.pdf). --- class: inverse, center, middle # Singular Value Decomposition (SVD) --- # Non-square matrices - Recall that if the matrix A is square (real or complex) then a diagonalisation may exist. - This is clearly very useful for easy calculation of many important problems as we saw last week. - If a diagonalisation doesn't exist, then there is always a triangularisation via Schur Decomposition. - But non-square matrices don’t have eigenvalues, so what can we do? - You are about to learn the most useful diagonal decomposition that works for all matrices: Singular Value Decomposition. --- # Singular values - Singular values are the square roots of the eigenvalues of `\(A^TA\)` which is square and symmetric. - `\(u\)` and `\(v\)` come in a pair for each singular value `\(\sigma\)`, such that `$$A v = \sigma u.$$` - `\(u\)` and `\(v\)` are eigenvectors of `\(AA^T\)` and `\(A^TA\)`, respectively. --- # Generalising Eigen-Decomposition - Eigendecomposition involves only one eigenvector for each eigenvalue (including multiplicities), stored in an orthogonal matrix `\(Q\)`, with eigenvalues on the diagonal of the matrix `\(\Lambda\)`, so that `\(A=Q\Lambda Q^T\)`. - We can generalise this now that we have singular vectors `\(u\)` and `\(v\)` for each singular value `\(\sigma\)`. --- # Singular Value Decomposition (SVD) For `\(A \in \mathcal{R}^{m \times n}\)`, there exists orthogonal matrices `$$U = [u_1, \cdots, u_m] \in \mathcal{R}^{m\times m}$$` and `$$V = [v_1, \cdots, v_n] \in \mathcal{R}^{n\times n}$$` such that `$$U^TAV = \Sigma = \text{diag}\{\sigma_1, \cdots, \sigma_p\} \in \mathcal{R}^{m\times n},$$` with `\(p = \min\{m, n\}\)` and `\(\sigma_1 \geq \dots \geq \sigma_p \geq 0\)`. Rearranging, we have `$$A = U\Sigma V^T$$`. --- # SVD  --- # SVD Try `svd()` in R. --- # Some properties of SVD - `\(\sigma_i\)` are singular values of `\(A\)`. - The non-zero singular values of `\(A\)` are the square roots of the non-zero eigenvalues of both `\(A^TA\)` and `\(AA^T\)`. - The rank of a matrix is equal to the number of non-zero singular values. - The condition number measures the degree of singularity of `\(A^TA\)`: `$$\kappa = \frac{\text{max singular value}}{\text{min singular value}}.$$` --- # Applications of SVD - In data compression, we start with a matrix A that contains perfect data, and we try to find a (lower-rank) approximation to the data that seeks to capture the principal elements of the data. - we sacrifice only the less important parts that don’t degrade data quality too much, in order to gain compression. - In noise filtering, we start with a matrix A that contains imperfect data, and we try to find a (lower-rank) approximation to the data that seeks to capture the principal elements of the data. - we give up only the less important parts that are typically noise. - So both these tasks are related, and rely on the SVD to find a suitable lower-ranked approximation to a matrix. --- class: inverse, center, middle # Matrix Approximation --- # Economy SVD The SVD of a matrix `\(A\)` in `\(\mathcal{R}_{m\times n}\)` decomposes the matrix into `$$A = U\Sigma V^T = \left(\begin{array}{cc}U_1 & U_2\end{array}\right) \left(\begin{array}{cc} D & \\& 0\end{array}\right) \left(\begin{array}{cc}V_1^T & V_2^T\end{array}\right) = U_1DV_1^T$$` where `\(D = \text{diag}\{\sigma_1, \cdots, \sigma_r\} \in \mathcal{R}^{r\times r}\)` with `\(\sigma_r > 0\)` for `\(r = \text{rank}(A)\)`. --- # Economy SVD  --- # Matrix Approximation - `\(A\)` can also be expressed as a sum of outer products, with each sum being a rank 1 matrix of dimension `\(m\times n\)` `$$A = \Sigma_{i = 1}^r \sigma_i u_i v_i^T = \sigma_1 u_1 v_1^T + \cdots + \sigma_r u_r v_r^T.$$` - We can truncate this sum when we feel that the singular values are so small that they are not contributing much. --- # Image Compression - Suppose we have a grayscale image (*128 x 128* pixels). - We can use a matrix to represent this image. - If we have a colour image, it has three matrices, with the same size as the image. Each matrix represents a color value that comprises the RGB color scale. - Each pixel is represented by an integer from 0-255. - Next, we can decompose the matrix by SVD. - By eliminating small singular values, we can approximate the matrix. - Choose the value of `\(k\)` for the low-rank approximation `\(A_k\)`. - Plotting the singular values might help identify where there is a noticeable drop in significance. --- # Reconstructing approximated image - Suppose we have chosen the value of `\(k\)` = number of singular values we wish to retain. - We can generate a new image matrix by expanding `\(A\)` using the SVD (first `\(k\)` singular values only). - If you want to use colour images, do it for R, G, B matrices separately and then reassemble. --- # Data compression .scroll-output[ ``` r library(jpeg) img <- readJPEG('./figs/svdimg.jpg') r <- img[,,1] g <- img[,,2] b <- img[,,3] img.r.svd <- svd(r) img.g.svd <- svd(g) img.b.svd <- svd(b) # Function to reconstruct the image with a limited number of singular values reconstruct_image <- function(svd_r, svd_g, svd_b, k) { # Reconstruct the individual color channels using the first k singular values r_reconstructed <- svd_r$u[, 1:k] %*% diag(svd_r$d[1:k]) %*% t(svd_r$v[, 1:k]) g_reconstructed <- svd_g$u[, 1:k] %*% diag(svd_g$d[1:k]) %*% t(svd_g$v[, 1:k]) b_reconstructed <- svd_b$u[, 1:k] %*% diag(svd_b$d[1:k]) %*% t(svd_b$v[, 1:k]) # Clip the values to be within the valid range [0, 1] r_reconstructed <- pmin(pmax(r_reconstructed, 0), 1) g_reconstructed <- pmin(pmax(g_reconstructed, 0), 1) b_reconstructed <- pmin(pmax(b_reconstructed, 0), 1) # Combine the channels back into a single image reconstructed_image <- array(0, dim = c(dim(r)[1], dim(r)[2], 3)) reconstructed_image[,,1] <- r_reconstructed reconstructed_image[,,2] <- g_reconstructed reconstructed_image[,,3] <- b_reconstructed return(reconstructed_image) } # Set the number of singular values to test singular_values <- seq(5,200,10) # Loop over different k values and plot the reconstructed images for (k in singular_values) { reconstructed <- reconstruct_image(img.r.svd, img.g.svd, img.b.svd, k) plot(1, type = "n", xlab = "", ylab = "", xlim = c(0, 1), ylim = c(0, 1), axes = FALSE, ann = FALSE, frame.plot = FALSE) rasterImage(reconstructed, 0, 0, 1, 1) title(paste("Reconstructed Image with", k, "Singular Values")) } ``` ] --- # Data compression <img src="figure/unnamed-chunk-1-.gif" width="504" style="display: block; margin: auto;" /> --- class: inverse, center, middle # Linear regression --- # Linear regression For a linear regression, `$$\mathbf{y} = \mathbf{X}\mathbf{\beta} + \mathbf{\varepsilon},~\mathbf{\epsilon}\sim N(0,\Sigma).$$` what is its OLS solution? --- # Numerical Issues - Numerically, there can be problems with solving for `\(\mathbf{\beta}\)` via the normal equations. - The inverse of `\(X^TX\)` might not exist - It is not computationally efficient to invert it for large dimensions - `\(X^TX\)` maybe ill-conditioned - The SVD can help us by providing a numerically stable pseudo-inverse, and the opportunity to identify (through the singular values) any ill-conditioning. --- # Pseudo-inverse Recall that the SVD of a matrix `\(A\)` in `\(\mathcal{R}_{m\times n}\)` decomposes the matrix into `$$A = U\Sigma V^T = \left(\begin{array}{cc}U_1 & U_2\end{array}\right) \left(\begin{array}{cc}D & \\& 0\end{array}\right) \left(\begin{array}{cc}V_1^T & V_2^T\end{array}\right) = U_1DV_1^T$$` where `\(D = \text{diag}\{\alpha_1, \cdots, \alpha_r\} \in \mathcal{R}^{r\times r}\)` with `\(\sigma_r > 0\)` for `\(r = \text{rank}(A)\)`. - The pseudo-inverse, or generalised inverse, of `\(A\)` can always be calculated, even if `\(A\)` is not invertible. `$$A^+ = V_1D^{-1}U_1^T$$` - `\(A^{+}\)` is `\(n \times m\)`. --- # Linear regression with SVD For `$$y = \mathbf{X}\mathbf{\beta} + \mathbf{\varepsilon},~\mathbf{\epsilon}\sim N(0,\Sigma),$$` the least square solution is `$$\beta = (X^TX)^{-1}X^Ty.$$` Substituting `\(X = U_1DV_1^T\)`, `$$\beta = X^{+}y = V_1D^{-1}U_1^T y = \Sigma_{i = 1}^r \frac{v_iu_i^Ty}{\sigma_i}.$$` A small singular value means trouble. A lower rank approximation should be more reliable. --- # Lets go back to our example in L0 - For example, here's a fake dataset of `\(n=5000\)` observations with `\(p=100\)` predictors. ``` r set.seed(2023-09-08) n <- 5000; p <- 100 X <- matrix(rnorm(n * p), n, p) y <- rnorm(n) ``` ``` r library(microbenchmark) microbenchmark(solve(t(X) %*% X) %*% t(X) %*% y, solve(crossprod(X), crossprod(X, y)), qr.coef(qr(X), y) ) #> Unit: milliseconds #> expr min lq mean median uq max neval #> solve(t(X) %*% X) %*% t(X) %*% y 65.75119 70.86994 80.80969 73.01452 77.33103 280.50736 100 #> solve(crossprod(X), crossprod(X, y)) 29.94246 31.34255 32.80172 32.09785 33.21004 52.39252 100 #> qr.coef(qr(X), y) 42.44848 45.33337 53.21804 47.92239 50.79790 255.11060 100 ``` --- # Colinearity ``` r W <- cbind(X, X[, 1] + rnorm(n, sd = 0.00000000000001)) solve(crossprod(W), crossprod(W, y)) #> Error in solve.default(crossprod(W), crossprod(W, y)): system is computationally singular: reciprocal condition number = 1.392e-16 ``` --- # We can use SVD and pseudo-inverse ``` r # Perform SVD on W svd_W <- svd(W) # Extract components from SVD U <- svd_W$u # m x m matrix D <- svd_W$d # Singular values (vector) V <- svd_W$v # n x n matrix # Define a threshold to filter out small singular values threshold <- 1e-10 Positive <- D > threshold # Calculate ginv W_ginv <- V[, Positive, drop = FALSE] %*% ((1/D[Positive]) * t(U[, Positive, drop = FALSE])) # You can also use # W_ginv <- MASS::ginv(W) ``` --- # We can use SVD and pseudo-inverse .scroll-output[ ``` r # Estimation W_ginv %*% y #> [,1] #> [1,] 0.0103524802 #> [2,] -0.0107798798 #> [3,] -0.0053346446 #> [4,] -0.0139042768 #> [5,] -0.0165625816 #> [6,] -0.0247368954 #> [7,] -0.0055936520 #> [8,] -0.0050670925 #> [9,] -0.0141895452 #> [10,] 0.0014650371 #> [11,] 0.0105871852 #> [12,] 0.0236855005 #> [13,] -0.0009123881 #> [14,] 0.0395699043 #> [15,] -0.0045459668 #> [16,] 0.0156426008 #> [17,] 0.0368986437 #> [18,] -0.0062241571 #> [19,] 0.0003719454 #> [20,] -0.0001045447 #> [21,] 0.0105437196 #> [22,] 0.0032069072 #> [23,] -0.0099112962 #> [24,] 0.0092091722 #> [25,] -0.0056283760 #> [26,] -0.0101836666 #> [27,] 0.0004409933 #> [28,] 0.0079016686 #> [29,] 0.0101626882 #> [30,] -0.0208409039 #> [31,] -0.0054924536 #> [32,] 0.0064271925 #> [33,] -0.0065590754 #> [34,] -0.0037863800 #> [35,] 0.0072126283 #> [36,] -0.0013686652 #> [37,] -0.0261588414 #> [38,] -0.0045260288 #> [39,] -0.0204600332 #> [40,] -0.0108791075 #> [41,] 0.0100509988 #> [42,] 0.0021846634 #> [43,] 0.0096076628 #> [44,] -0.0256744385 #> [45,] -0.0002072185 #> [46,] -0.0203092434 #> [47,] 0.0199490920 #> [48,] 0.0245456994 #> [49,] 0.0141368132 #> [50,] -0.0075821183 #> [51,] -0.0331999816 #> [52,] 0.0070728928 #> [53,] -0.0251681102 #> [54,] -0.0145501245 #> [55,] 0.0124959304 #> [56,] 0.0101724169 #> [57,] -0.0041669575 #> [58,] 0.0118368027 #> [59,] 0.0058702645 #> [60,] 0.0017322817 #> [61,] 0.0008573064 #> [62,] -0.0068535688 #> [63,] -0.0101274630 #> [64,] -0.0093643459 #> [65,] 0.0045194364 #> [66,] -0.0224489607 #> [67,] 0.0175468581 #> [68,] 0.0135508696 #> [69,] 0.0048772551 #> [70,] 0.0168445216 #> [71,] -0.0254587733 #> [72,] -0.0012700923 #> [73,] -0.0106302388 #> [74,] -0.0017762102 #> [75,] 0.0077490915 #> [76,] 0.0110904920 #> [77,] -0.0144777066 #> [78,] -0.0079745076 #> [79,] 0.0112005231 #> [80,] -0.0012697611 #> [81,] -0.0024046193 #> [82,] -0.0094265032 #> [83,] 0.0234028545 #> [84,] -0.0087827166 #> [85,] 0.0097232715 #> [86,] -0.0188332734 #> [87,] -0.0151177605 #> [88,] 0.0129435368 #> [89,] -0.0086064316 #> [90,] 0.0048073761 #> [91,] -0.0209634872 #> [92,] -0.0133873152 #> [93,] 0.0203494525 #> [94,] 0.0031885641 #> [95,] -0.0088269877 #> [96,] 0.0064179865 #> [97,] -0.0016902431 #> [98,] -0.0149829401 #> [99,] -0.0018118949 #> [100,] -0.0161594580 #> [101,] 0.0103524802 ``` ]