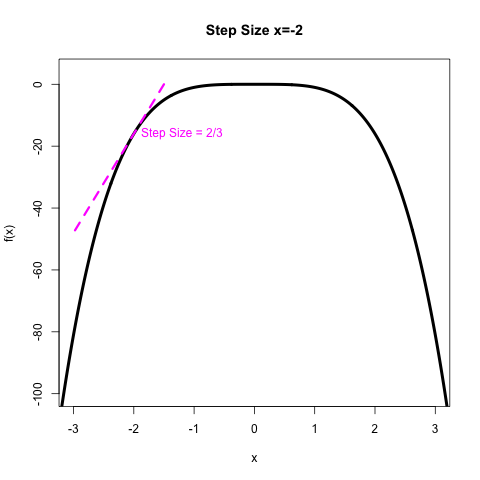

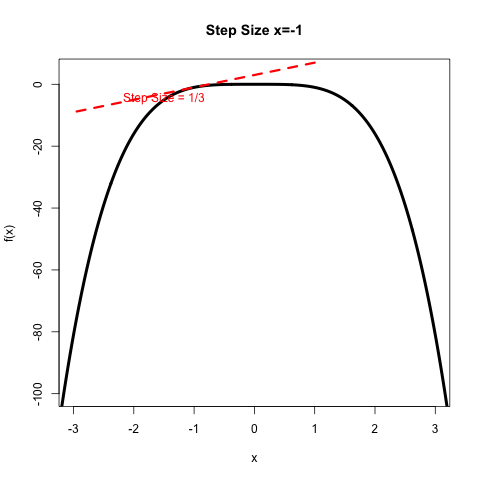

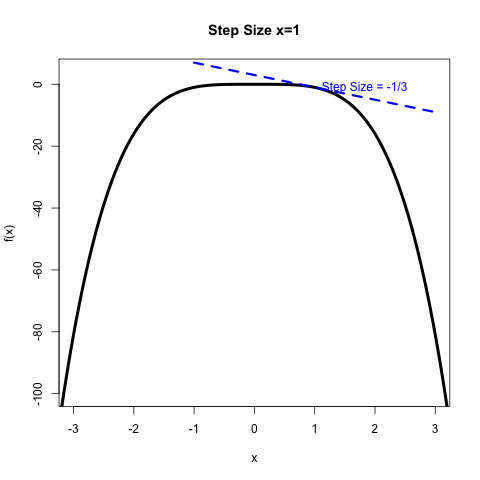

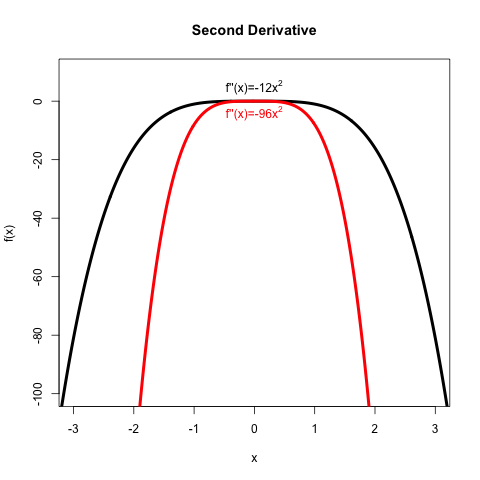

layout: true --- class: inverse, center, middle background-image: url(../figs/titlepage16-9.png) background-size: cover <br> <br> # Bayesian Statistics and Computing ## Lecture 7: Newton Method <img src="../figs/slides.png" width="150px"/> #### *Yanfei Kang | BSC | Beihang University* --- # Optimisation in Business Many problems in business require something to be minimized or maximized. - Maximizing Revenue - Minimizing Costs - Minimizing Delivery Time - Maximizing Financial Returns --- # Optimisation in Statistics - Maximum Likelihood - Least Squares - Method of Moments - Posterior Mode --- # Optimisation - Suppose we want to find a minimum or maximum of a function `\(f(x)\)`. - Sometimes `\(f(x)\)` will be very complicated. - Are there computer algorithms that can help? - Yes! --- class: inverse, center, middle # Root Finding --- # Root Finding - Consider the problem of finding the root of a function. - For the function `\(f(x)\)`, the root is the point `\(x^*\)` such that `\(f(x^*) = 0\)`. - If there is no closed form for `\(f^{-1}(x)\)` and `\(f\)` is continuous, the solution is effected by an iterative process. --- # Bisection method - The bisection algorithm is a simple method for finding the roots of one-dimensional functions. - The goal is to find a root `\(x^* \in [a, b]\)` such that `\(f(x^*) = 0\)`. - The algorithm starts with a large interval, known to contain `\(x^*\)` and then successively reduces the size of the interval until it brackets the root. --- # Bisection method - Set an initial interval `\([a,b]\)` such that `\(\text{sign}(f(a)) \neq \text{sign}(f(b))\)`. - Iterate until `\(|b-a| < \epsilon\)` or `\(|f(b) - f(a)|<\epsilon\)`. 1. Calculate `\(c = \frac{a+b}{2}\)`. 2. If `\(f(c) = 0\)`, stop and return `\(c\)`. 3. If `\(\text{sign}(f(a)) \neq \text{sign}(f(c))\)`, set `\(b \leftarrow c\)`; otherwise `\(a \leftarrow c\)`, and return to step 1. - Output `\(c\)`. --- # Animation <img src="figure/unnamed-chunk-1-.gif" width="504" style="display: block; margin: auto;" /> ``` #> [1] 4.470348e-08 ``` --- # Newton-Raphson Method - Newton-Raphson is an iterative method that begins with an initial guess of the root, proposed by Newton and Raphson nearly 500 years ago. - The method uses the derivative of the function `\(f^{'}(x)\)` as well as the original function `\(f(x)\)`. - When successful, it converges (usually) rapidly, but may fail as any other root-finding algorithm. --- # Newton-Raphson Method The method tries to solve an equation in the form of `\(f(x) = 0\)` through the iterative procedure: `$$x_{n+1} = x_n - \frac{f(x_n)}{f^{'}(x_n)}.$$` Please think about **Taylor expansion**. --- # Geometric interpretation <center> <img src="newton.gif" width="500" height="500" /> </center> --- # Stopping Rule - With each step the algorithm should get closer to the root. - However, it can run for a long time without reaching the exact root. - There must be a stopping rule otherwise the program could run forever. - Let `\(\epsilon\)` be an extremely small number e.g. `\(1 \times 10^{-10}\)` called the **tolerance level**. - If `\(|f(x^{*})| < \epsilon\)` then the solution is close enough and there is a root at `\(x^{*}\)`. --- # Example - Now find the root of `\(f(x) = x^2 - 5\)` using Newton method by hand. - Tell about its geometric interpretation. --- # Newton-Raphson Method 1. Select an initial guess `\(x_0\)`, and set `\(n = 0\)`. 2. Set `\(x_{n+1} = x_n - \frac{f(x_n)}{f^{'}(x_n)}\)`. 3. Evaluate `\(|f(x_{n+1})|\)`. 1. If `\(|f(x_{n+1})| < \epsilon\)`, then stop; 2. Otherwise set `\(n=n+1\)` and go back to step 2. --- # Convergence rate Suppose we have a sequence `\(\{x_n\}\)`, - Linear convergence: there exists `\(r \in (0,1)\)` such that `$$\frac{\|x_{n+1}-x_\infty\|}{\|x_n-x_\infty\|}\leq r.$$` - Superlinear convergence: `$$\lim_{n\rightarrow\infty}\frac{\|x_{n+1}-x_\infty\|}{\|x_n-x_\infty\|}=0.$$` - Quadratic Convergence: there exists some constant `\(M >0\)` such that `$$\frac{\|x_{n+1}-x_\infty\|}{\|x_n-x_\infty\|^2}\leq M.$$` --- # Convergence rate: Bisection - For Bisection, the error that we make in estimating the root is `\(x_n = |b_n - a_n|\)`. - `\(x_n = 2^{-n}|b_0 - a_0|\)`. - The convergence rate for Bisection method is `$$\frac{\|x_{n+1}-x_\infty\|}{\|x_n-x_\infty\|}=\frac{x_{n+1}}{x_n}= \frac{2^{-(n+1)}(b_0-a_0)}{2^{-n}(b_0-a_0)}= \frac{1}{2}.$$` - The error of the bisection algorithm converges linearly to 0. --- # Convergence rate: Newton-Raphson - Let `\(f(x)\)` have a root at `\(\alpha\)`. The Taylor approximation states that `$$f(\alpha)\approx f(x_n)+f'(x_n)(\alpha-x_n)+\frac{1}{2}f''(x_n)(\alpha-x_n)^2.$$` - The quality of the approximation depends on the function and how close `\(x_n\)` is to `\(\alpha\)`. - Since `\(\alpha\)` is a root, `\(f(\alpha)=0\)` This implies `$$0\approx f(x_n)+f'(x_n)(\alpha-x_n)+\frac{1}{2}f''(x_n)(\alpha-x_n)^2.$$` - Dividing by `\(f'(x_n)\)` and rearranging gives: `$$\frac{f(x_n)}{f'(x_n)}+(\alpha-x_n)\approx\frac{-f''(x_n)}{2f'(x_n)}(\alpha-x_n)^2.$$` --- # Convergence rate: Newton-Raphson - More rearranging `$$\alpha-\left(x_n-\frac{f(x_n)}{f'(x_n)}\right)\approx\frac{-f''(x_n)}{2f'(x_n)}(\alpha-x_n)^2.$$` - The term in brackets on the left hand side is the formula used to update `\(x\)` in the Newton Raphson method `$$(\alpha-x_{n+1})\approx\frac{-f''(x_n)}{2f'(x_n)}(\alpha-x_n)^2.$$` - This can be rewritten in terms of errors `\(e_{n+1}=\alpha-x_{n+1}\)` and `\(e_n=\alpha-x_n\)` `$$e_{n+1}\approx\frac{-f''(x_n)}{2f'(x_n)}e_n^2.$$` --- # Convergence rate: Newton-Raphson - Newton method is of quadratic convergence. - It is very fast in the neighborhood of the root. - We need to calculate the derivative (more information of `\(f\)` provided). - Sometimes swing wildly out of control and diverge. --- # Lab 1. Write R code to find the root of `\(x^2 = 5\)`. 2. Now use your Newton-Raphson code to find the root of `\(f(x) = \sqrt{|x|}\)`. Try initial value 0.25. 3. Now use your Newton-Raphson code to find the root of `\(x e^{-x^2} = 0.4(e^x + 1)^{-1} + 0.2\)`. Try initial values 0.5 and 0.6. 4. `\(f(x) = x^3 - 2x^2 - 11x + 12\)`, try starting values 2.35287527 and 2.35284172. 5. `\(f(x) = 2x^3 + 3x^2 + 5\)`, try starting values 0.5 and 0. What did you learn from mistakes? --- # Lab .scroll-output[ ```r # ===================================================== # Newton–Raphson using deriv() for automatic derivative # ===================================================== newton_raphson <- function(expr, x0, tol = 1e-8, max_iter = 100, verbose = TRUE) { # expr should be an expression, e.g. expression(x^2 - 5) var <- all.vars(expr) if (length(var) != 1) stop("Only single-variable functions supported.") # Create a function that returns both value and gradient f_deriv <- deriv(expr, var) # Initialize x <- x0 for (i in 1:max_iter) { # Evaluate f and f' val <- eval(f_deriv, envir = list(x = x)) fx <- as.numeric(val) fpx <- attr(val, "gradient") # Newton–Raphson update if (abs(fpx) < 1e-12) { warning("Derivative too close to zero. Stopping.") break } x_new <- x - fx / fpx if (verbose) cat(sprintf("Iter %d: x = %.8f, f(x) = %.8f\n", i, x_new, fx)) # Check convergence if (abs(x_new - x) < tol) { if (verbose) cat("Converged!\n") return(x_new) } x <- x_new } warning("Did not converge within max_iter.") return(x) } ``` ] --- # Lab ```r root <- newton_raphson(expression(x^2 - 5), x0 = 1) #> Iter 1: x = 3.00000000, f(x) = -4.00000000 #> Iter 2: x = 2.33333333, f(x) = 4.00000000 #> Iter 3: x = 2.23809524, f(x) = 0.44444444 #> Iter 4: x = 2.23606890, f(x) = 0.00907029 #> Iter 5: x = 2.23606798, f(x) = 0.00000411 #> Iter 6: x = 2.23606798, f(x) = 0.00000000 #> Converged! cat("Root found:", root, "\n") #> Root found: 2.236068 cat("True sqrt(5):", sqrt(5), "\n") #> True sqrt(5): 2.236068 ``` Now your turn to work on other functions. --- # Learn from Mistakes - Newton-Raphson does not always converge. - Initial guess matters. - Different starting values may converge to different roots. - Newton-Raphson doesn’t work if the first derivative is zero. - If the function is not continuously differentiable in a neighborhood of the root, it is possible that Newton's method will always diverge or fail. - Newton's method requires calculating the derivative. - Newton’s method requires some intelligence. --- # Conclusion - Why did we spend so much time on finding roots of an equation? - Isn’t this topic meant to be about optimization? - Can we change the algorithm slightly so that it works for optimization? --- class: inverse, center, middle # Optimisation --- # Finding a Maximum or Minimum - Suppose we want to find an minimum or maximum of a function `\(f(x)\)` (think about maximum likelihood estimation). - Find the derivative `\(f^{'}(x)\)` and find `\(x^{*}\)` such that `\(f^{'}(x^*) = 0\)`. - This is the same as finding a root of the first derivative. We can use the Newton Raphson algorithm on the first derivative. --- # Newton Raphson algorithm for finding local maxima or minima 1. Select an initial guess `\(x_0\)`, and set `\(n = 0\)`. 2. Set `\(x_{n+1} = x_n - \frac{f^{'}(x_n)}{f^{''}(x_n)}\)`. 3. Evaluate `\(|f^{'}(x_{n+1})|\)`. 1. If `\(|f^{'}(x_{n+1})| < \epsilon\)`, then stop; 2. Otherwise set `\(n=n+1\)` and go back to step 2. --- # Different Stopping Rules Three stopping rules can be used. - `\(|f^{'}(x_{n+1})| < \epsilon\)`. - `\(|x_{n} - x_{n-1}| < \epsilon\)`. - `\(|f(x_{n}) - f(x_{n-1})| < \epsilon\)`. --- # Intuition - Focus the step size `\(-\frac{f'(x)}{f''(x)}\)`. - The signs of the derivatives control the direction of the next step. - The size of the derivatives control the size of the next step. - Consider the concave function `\(f(x)=-x^4\)` which has `\(f'(x)=-4x^3\)` and `\(f''(x)=-12x^2\)`. There is a maximum at `\(x^{*}=0\)`. --- # Role of first derivative <center>  --- # Role of first derivative <center>  --- # Role of first derivative <center>  --- # Role of first derivative - If `\(f''(x)\)` is negative the function is locally concave, and the search is for a local maximum. - To the left of this maximum `\(f'(x)>0\)`. - Therefore `\(-\frac{f'(x)}{f''(x)}>0\)`. - The next step is to the right. - The reverse holds if `\(f'(x)<0\)`. - Large absolute values of `\(f'(x)\)` imply a steep slope. A big step is needed to get close to the optimum. The reverse hold for small absolute value of `\(f'(x)\)`. --- # Role of first derivative - If `\(f''(x)\)` is positive the function is locally convex, and the search is for a local minimum. - To the left of this maximum `\(f'(x)<0\)`. - Therefore `\(-\frac{f'(x)}{f''(x)}>0\)`. - The next step is to the right. - The reverse holds if `\(f'(x)>0\)`. - Large absolute values of `\(f'(x)\)` imply a steep slope. A big step is needed to get close to the optimum. The reverse hold for small absolute value of `\(f'(x)\)`. --- # Role of second derivative <center>  --- # Role of second derivative - Together with the sign of the first derivative, the sign of the second derivative controls the direction of the next step. - A larger second derivative (in absolute value) implies a larger curvature. - In this case smaller steps are need to stop the algorithm from overshooting. - The opposite holds for a small second derivative. --- # Lab - continuted Based on your root-finding function, write a function to find the maximum of `\(f(x) = -x^4\)`. --- class: inverse, center, middle # Multidimensional Optimization --- # Functions with more than one input - Most interesting optimization problems involve multiple inputs. - In determining the most risk efficient portfolio the return is a function of many weights (one for each asset). - In least squares estimation for a linear regression model, the sum of squares is a function of many coefficients (one for each regressor). - How do we optimize for functions `\(f({x})\)` where `\({x}\)` is a vector? --- # Derviatives - Newton's algorithm has a simple update rule based on first and second derivatives. - What do these derivatives look like when the function is `\(y=f({x})\)` where `\(y\)` is a scalar and `\(\mathbf{x}\)` is a `\(d\times 1\)` vector? --- # First derivative Simply take the partial derivatives and put them in a vector `$$\frac{\partial y}{\partial{\mathbf{x}}}= \left( \begin{array}{c} \frac{\partial y}{\partial x_1}\\ \frac{\partial y}{\partial x_2}\\ \vdots\\ \frac{\partial y}{\partial x_d} \end{array} \right).$$` This is called the gradient vector. --- # An example The function `\(y=x_1^2-x_1x_2+x_2^2+e^{x_2}\)` has gradient vector `$$\frac{\partial y}{\partial \mathbf{x}}=\left(\begin{array}{c}2 x_{1}-x_{2} \\ -x_{1}+2 x_{2}+e^{x_{2}}\end{array}\right).$$` --- # Second derivative Simply take the second order partial derivatives. This will give a matrix `$$\frac{\partial^2 y}{\partial \mathbf{x} \partial \mathbf{x}^{\prime}}=\left(\begin{array}{cccc}\frac{\partial^{2} y}{\partial x_{1}^{2}} & \frac{\partial^{2} y}{\partial x_{1} \partial x_{2}} & \cdots & \frac{\partial^{2} y}{\partial x_{1} \partial x_{d}} \\ \frac{\partial^{2} y}{\partial x_{2} \partial x_{1}} & \frac{\partial^{2} y}{\partial x_{2}^{2}} & \cdots & \frac{\partial^{2} y}{\partial x_{2} \partial x_{d}} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial^{2} y}{\partial x_{d} \partial x_{1}} & \frac{\partial^{2} y}{\partial x_{d} \partial x_{2}} & \cdots & \frac{\partial^{2} y}{\partial x_{d}^{2}}\end{array}\right).$$` This is called the Hessian matrix. --- # An example The function `\(y=x_1^2-x_1x_2+x_2^2+e^{x_2}\)` has Hessian matrix `$$\frac{\partial^2 y}{\partial \mathbf{x} \partial \mathbf{x}^{\prime}}=\left(\begin{array}{cc}2 & -1 \\ -1 & 2+e^{x_{2}}\end{array}\right).$$` --- # Newton’s algorithm for multidimensional optimization We can now generalise the update step in Newton's method: `$$\mathbf{x_{n+1}}=\mathbf{x_n}-\left(\frac{\partial^2 f({ \mathbf{x}})}{\partial {\mathbf{x}}\partial{\mathbf{x}}'}\right)^{-1} \frac{\partial f({\mathbf{x}})}{\partial {\mathbf{x}}}.$$` --- # Lab Now write code to minimise `\(y=x_1^2-x_1x_2+x_2^2+e^{x_2}\)`. --- class: inverse, center, middle # Maximum likelihood estimate for linear models --- # Maximum likelihood estimate for linear models Assume you want to make a regression model `$$y_i = \beta_0 + \beta_1x_{i} + \epsilon_i,~\text{where}~\epsilon_i \sim N(0, 1).$$` - How do we estimate the parameters? - Write down the log likelihood function with respect to the unknown parameters. - Write down the gradient for the log likelihood function. - Write down the Hessian for the log likelihood function. - Use your newton function to obtain the best parameter estimate. --- # Optimizing Using Newton's Method ```r ## Generate some data beta0 <- 1 beta1 <- 3 sigma <- 1 n <- 1000 x <- rnorm(n, 3, 1) y <- beta0 + x * beta1 + rnorm(n, mean = 0, sd = sigma) ## Make the log normal likelihood function func = function(beta){ sum((y-beta[1]-beta[2]*x)^2) } grad = function(beta){ matrix(c(sum(-2*(y-beta[1]-beta[2]*x)),sum(-2*x*(y-beta[1]-beta[2]*x))),2,1) } hess = function(beta){ matrix(c(2*length(x),2*sum(x),2*sum(x),2*sum(x^2)),2,2) } ``` --- # Optimizing Using Newton's Method ```r ## The optimization source('./RCode/newton.R') ## Newton optimization optimOut <- newton(function(beta){list(func(beta), grad(beta), hess(beta))}, c(-4, -5)) optimOut #> [,1] #> [1,] 1.035041 #> [2,] 2.993297 beta0Hat <- optimOut[1,] beta1Hat <- optimOut[2,] ``` --- # Comparison with `lm()` .pull-left[ ```r myLM <- lm(y~x) myLMCoef <- myLM$coefficients coefs <- data.frame(Method = c('Newton', 'LM'), intercept = c(beta0Hat, myLMCoef[1]), slope = c(beta1Hat, myLMCoef[2])) coefs #> Method intercept slope #> Newton 1.035041 2.993297 #> (Intercept) LM 1.035041 2.993297 ``` ```r library(ggplot2) qplot(x, y) + geom_abline(aes(slope = myLMCoef[2], intercept = myLMCoef[1], color = 'LM'), size = 3) + geom_abline(aes(slope = beta1Hat, intercept = beta0Hat, color='Newton'), linetype="dashed", size = 2) + labs(color = "Method") ``` ] .pull-right[ <img src="figure/comp-ols-plot-1.png" width="504" style="display: block; margin: auto;" /> ] --- # Newton method in R - The `nlm()` function in R implements Newton’s method for minimizing a function given a vector of starting values. - Re-do the linear regression example using newton method using `nlm()`in R. ```r nlm(func, c(-4, 5))$estimate #> [1] 1.035079 2.993284 ``` --- # References - Chapter 1 of the [advanced statistical computing](https://bookdown.org/rdpeng/advstatcomp/steepest-descent.html) book by Roger Peng. - Chapter 2 of the online Chinese textbook. - Chapters 10 and 12 of the book "Introduction to Scientific Programming and Simulation Using R".