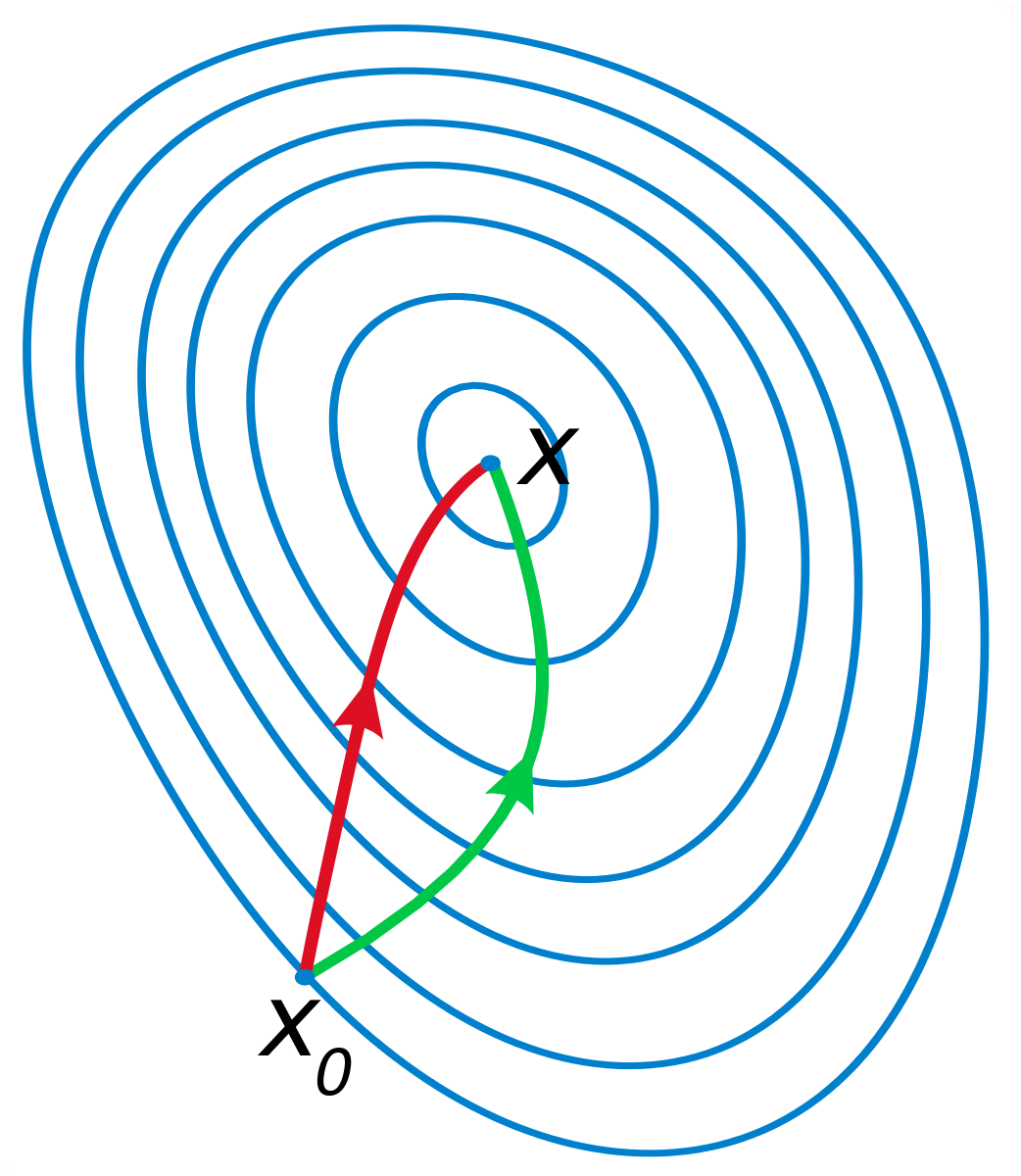

class: left, bottom, inverse, title-slide # Bayesian Statistics and Computing ## Lecture 10: Stochastic Gradient Descent ### Yanfei Kang ### 2020/03/10 (updated: 2020-03-25) --- # Stochastic gradient descent - Popular for training a wide range of models in machine learning. - Optimization is a big part of machine learning. - The goal of all supervised machine learning algorithms is to best estimate a target function that maps input data `\(X\)` onto output variables `\(y\)`. - Require a process of optimization to find the set of coefficients that result in the best estimate of the target function. - Cost function. --- class: inverse, center, middle # Gradient descent --- # Gradient descent - Gradient descent (steepest descent) is an iterative algorithm for optimization. - The most obvious direction to choose when attempting to minimize a function `\(f\)` starting at `\(x_n\)` is the direction of steepest descent, or `\(-f^{\prime}(x_n)\)`. --- # Gradient descent: 1-d function <img src="BSC-L10-sgd_files/figure-html/unnamed-chunk-1-1.png" style="display: block; margin: auto;" /> --- # Gradient descent: 2-d function <iframe src="p.html" width="100%" height="600" scrolling="yes" seamless="seamless" frameBorder="0"></iframe> --- # Gradient descent: 2-d function <img src="BSC-L10-sgd_files/figure-html/unnamed-chunk-3-1.png" style="display: block; margin: auto;" /> --- # Gradient descent algorithm Suppose we want to minimize `\(f\left(\mathbf{x}_{n}\right)\)`, where `\(f(\cdot)\)` is a function with continuous partial derivatives everywhere. Gradient descent algorithm does the following iteration. `$$\mathbf{x}_{n+1}=\mathbf{x}_{n}-\alpha \nabla f\left(\mathbf{x}_{n}\right),~\mathrm{where}~ \alpha > 0.$$` 1. Please think about the role of `\(\alpha\)`. 2. Gradient descent algorithm and Newton method? --- # Gradient descent algorithm and Newton method .pull-left[  ] .pull-right[ - A comparison of gradient descent (green) and Newton's method (red). - Newton's method uses curvature information (i.e. the second derivative) to take a more direct route. ] --- # Gradient descent algorithm - For initial value `\(\mathbf{x}_0\)`, we have `\(f(\mathbf{x}_0) \geq f(\mathbf{x}_1) \geq f(\mathbf{x}_2) \geq \cdots\)`. - Upon convergence, `\(\{\mathbf{x}_n\}\)` converges to the local min of `\(f(\mathbf{x})\)`. We can use `\(f(\mathbf{x}_{n+1})- f(\mathbf{x}_n) \leq \epsilon\)` as the stopping rule. --- # Gradient descent algorithm in R .scroll-output[ ```r f <- function(x, y) { x^2 + y^2 } dx <- function(x, y) { 2 * x } dy <- function(x, y) { 2 * y } num_iter <- 10 learning_rate <- 0.2 x_val <- 6 y_val <- 6 updates_x <- c(x_val, rep(NA, num_iter)) updates_y <- c(y_val, rep(NA, num_iter)) updates_z <- c(f(x_val, y_val), rep(NA, num_iter)) # iterate for (i in 1:num_iter) { dx_val = dx(x_val, y_val) dy_val = dy(x_val, y_val) x_val <- x_val - learning_rate * dx_val y_val <- y_val - learning_rate * dy_val z_val <- f(x_val, y_val) updates_x[i + 1] <- x_val updates_y[i + 1] <- y_val updates_z[i + 1] <- z_val } ``` ] --- # Gradient descent algorithm in R .pull-left[ ```r ## plotting m <- data.frame(x = updates_x, y = updates_y) x <- seq(-6, 6, length = 100) y <- x g <- expand.grid(x, y) z <- f(g[, 1], g[, 2]) f_long <- data.frame(x = g[, 1], y = g[, 2], z = z) library(ggplot2) ggplot(f_long, aes(x, y, z = z)) + geom_contour_filled(aes(fill = stat(level)), bins = 50) + guides(fill = FALSE) + geom_path(data = m, aes(x, y, z = 0), col = 2, arrow = arrow()) + geom_point(data = m, aes(x, y, z = 0), size = 3, col = 2) + xlab(expression(x[1])) + ylab(expression(x[2])) + ggtitle(parse(text = paste0("~ f(x[1],x[2]) == ~ x[1]^2 + x[2]^2"))) ``` ] .pull-right[ <img src="BSC-L10-sgd_files/figure-html/unnamed-chunk-5-1.png" style="display: block; margin: auto;" /> ] --- # High correlation between variables <img src="BSC-L10-sgd_files/figure-html/unnamed-chunk-6-1.png" style="display: block; margin: auto;" /> --- # Gradient descent for linear regression - Consider simple linear regression `$$y = \beta_0 + \beta_1x + \epsilon,~\mathrm{where}~\epsilon\sim N(0,1).$$` - Write out cost function. - Calculate gradient function. - How about multiple linear regression? --- # Gradient descent for linear regression in R .scroll-output[ ```r graddesc.lm <- function(X, y, beta.init, alpha = 0.1, tol = 1e-09, max.iter = 100) { beta.old <- beta.init J <- betas <- list() betas[[1]] <- beta.old J[[1]] <- lm.cost(X, y, beta.old) beta.new <- beta.old - alpha * lm.cost.grad(X, y, beta.old) betas[[2]] <- beta.new J[[2]] <- lm.cost(X, y, beta.new) iter <- 1 while ((abs(lm.cost(X, y, beta.new) - lm.cost(X, y, beta.old)) > tol) & (iter < max.iter)) { beta.old <- beta.new beta.new <- beta.old - alpha * lm.cost.grad(X, y, beta.old) iter <- iter + 1 betas[[iter + 2]] <- beta.new J[[iter + 2]] <- lm.cost(X, y, beta.new) } if (abs(lm.cost(X, y, beta.new) - lm.cost(X, y, beta.old)) > tol) { cat("Did not converge \n") } else { cat("Converged \n") cat("Iterated", iter, "times", "\n") cat("Coef: ", beta.new) return(list(coef = betas, cost = J)) } } ``` ] --- # Data generation ```r ## Generate some data beta0 <- 1 beta1 <- 3 sigma <- 1 n <- 10000 x <- rnorm(n, 3, 1) y <- beta0 + x * beta1 + rnorm(n, mean = 0, sd = sigma) X <- cbind(1, x) ``` --- # Exercise 1. Please write out the gradient function in the following code. 2. Run the code and compare with `lm()`. 3. Change the value of `\(\alpha\)` and see what happens? 4. Think about how to select `\(\alpha\)`. .scroll-output[ ```r ## Make the cost function lm.cost <- function(X, y, beta){ m <- length(y) loss <- sum((X%*%beta - y)^2)/(2*n) return(loss) } ## Calculate the gradient lm.cost.grad <- function(X, y, beta){ * ??? } gd.est <- graddesc.lm(X, y, beta.init = c(0,0), alpha = NULL, max.iter = 1000) ``` ] --- class: inverse, center, middle # Stochastic gradient descent (SGD) --- # Stochastic gradient descent (SGD) - Gradient descent can be slow to run on very large datasets. - One iteration of the gradient descent algorithm takes care of the entire dataset. - One iteration of the algorithm is called one batch and this form of gradient descent is referred to as **batch gradient descent**. - SGD updates parameters for each training sample. --- # SGD for linear regression 1. Shuffle all the training data randomly. 2. Repeat the following. - For each training sample `\(i = 1, \cdots, m\)`, `$$\beta_{n+1}= \beta_n - \alpha \nabla J(\beta_n)_i.$$` --- # Lab 8 1. Improve the R function `graddesc.lm()`, try to optimize `\(\alpha\)` in each step, instead of setting it to a constant. 2. Write a function in R for Stochastic Gradient Descent for linear regression, and test your function in the [Bodyfat](https://yanfei.site/docs/bsc/Bodyfat.csv) data. 3. Compare Gradient Descent, Stochastic Gradient Descent and Newton method. --- # Summary - GD and SGD. - When to use SGD? -- - Heaps of ML and DL methods use SGD to for training. - Simple answer: Use stochastic gradient descent when training time is the bottleneck. --- # References and further readings ### References - Section 3.1 of the [advanced statistical computing book](https://bookdown.org/rdpeng/advstatcomp/steepest-descent.html) by Roger Peng. ### Further readings - Stochastic Gradient Descent Tricks by Léon Bottou in Microsoft Research, Redmond, WA. Click [here](https://yanfei.site/docs/bsc/sgdMR.pdf) to download.