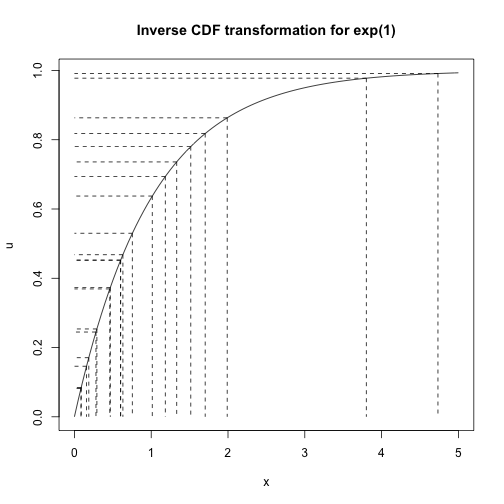

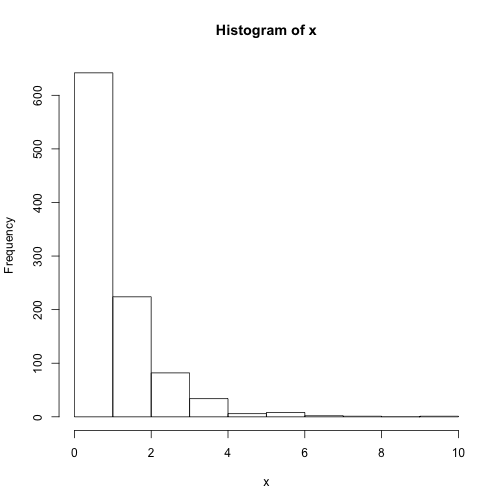

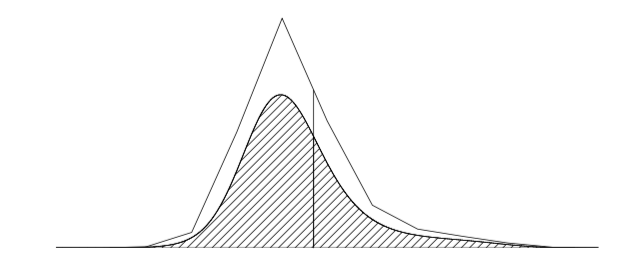

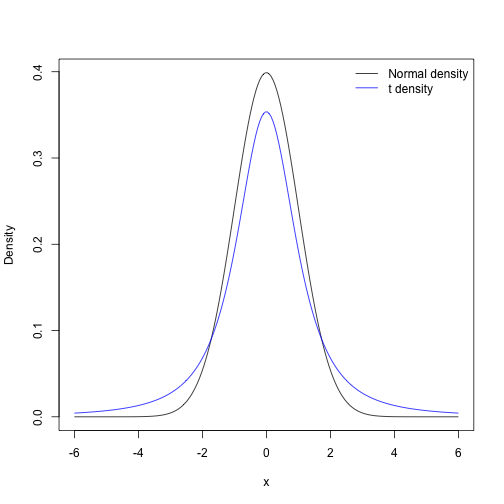

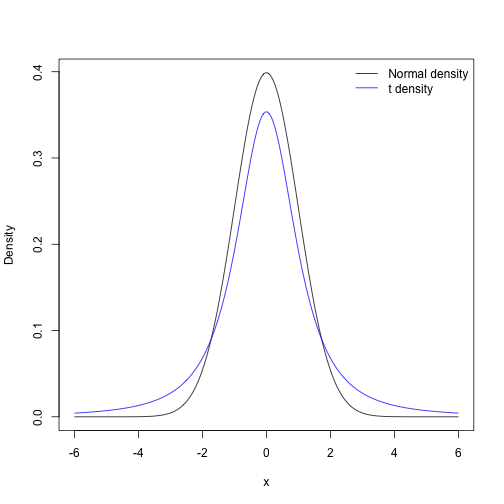

class: left, bottom, inverse, title-slide # Bayesian Statistics and Computing ## Lecture 13: Independent Monte Carlo Methods ### Yanfei Kang ### 2020/03/15 (updated: 2020-04-06) --- # Strategies to summarize posterior 1. If we have familiar distributions, we can simply **simulate** samples in R. **What if there isn’t a built in function for doing this?** 2. **Grid approximation**. - Compute values of the posterior on a grid of points. - Approximate the continuous posterior by a discrete posterior. - Applicable for one- and two-parameter problems. 3. If there are more parameters, we consider **deterministic tools**. - MAP - Bayesian CLT - Numerical Integration 4. **Monte Carlo** methods are more general. - Independent Monte Carlo - Markov Chain Monte Carlo --- class: inverse, center, middle # Direct sampling --- # Inverse CDF transformation - Suppose that we are given `\(U \sim U(0,1)\)` and want to simulate a continuous r.v. `\(X\)` with cdf `\(F_X\)`. Put `\(Y = F_{X}^{-1}(U)\)`, we have `$$F_{Y}(y)=\mathbb{P}(Y \leq y)=\mathbb{P}\left(F_{X}^{-1}(U) \leq y\right)=\mathbb{P}\left(U \leq F_{X}(y)\right)=F_{X}(y).$$` - For any continuous r.v. `\(X\)`, `\(F_{X}(X) \sim U(0,1)\)`. **Why?** ??? Define `\(Z=F_X(x)\)`, then `$$F_{Z}(x)=P\left(F_{X}(X) \leq x\right)=P\left(X \leq F_{X}^{-1}(x)\right)=F_{X}\left(F_{X}^{-1}(x)\right)=x.$$` --- # Example: exponential distribution - If `\(X \sim \exp(\lambda)\)`, then `$$F_{X}(x)=\left\{\begin{array}{ll}{0} & {\text { for } x<0} \\ {1-e^{-\lambda x}} & {\text { for } x \geq 0}\end{array}\right.$$` - How to work with inverse CDF transformation? --- # Example: exponential distribution .pull-left[ ```r lambda <- 1 u <- runif(20) x <- -1/lambda * log(1 - u) curve(1 - exp(-x), 0, 5, ylab = 'u', main = 'Inverse CDF transformation for exp(1)') for (i in 1:length(x)){ lines(c(x[i], x[i]), c(u[i], 0), lty = 'dashed') lines(c(x[i], 0), c(u[i], u[i]), lty = 'dashed') } ``` ] .pull-right[ <!-- --> ] --- # Example: exponential distribution .pull-left[ ```r # Direct sampling lambda <- 1 u <- runif(1000) x <- -1/lambda * log(1 - u) hist(x) ``` ] .pull-right[ <!-- --> ] --- class: inverse, center, middle # Rejection sampling --- # What if we can not write the inverse? ### Rejection sampling - Although we cannot easily sample from `\(f(\theta|y)\)`, there exists another density `\(g(\theta)\)` (called *proposal* density), like a Normal distribution or perhaps a `\(t\)`-distribution, from which it is easy for us to sample. - Then we can sample from `\(g(\theta)\)` directly and then "reject" the samples in a strategic way to make the resulting "non-rejected" samples look like they came from `\(f(\theta|y)\)`. --- # Rejection sampling 1. Sample `\(U \sim \operatorname{Unif}(0,1)\)`. 2. Sample `\(\theta\)` at random from the probability density proportional to `\(g(\theta)\)`. 3. If `\(U \leq \frac{f(\theta|y)}{M g(\theta)}\)`, accept `\(\theta\)` as a draw from `\(f\)`. If the drawn `\(\theta\)` is rejected, return to step 1. (With probability `\(\frac{f(\theta | y)}{M g(\theta)}\)`), accept `\(\theta\)`). The algorithm can be repeated until the desired number of samples from the target density `\(f\)` has been accepted. --- # Illustration .pull-left[  ] .pull-right[ - The top curve is an approximation function, `\(Mg(\theta)\)`, and the bottom curve is the target density, `\(f(\theta|y)\)`. - As required, `\(Mg(\theta) ≥ f(\theta|y)\)` for all `\(\theta\)`. - The vertical line indicates a single random draw `\(\theta\)` from the density proportional to `\(g\)`. - The probability that a sampled draw `\(\theta\)` is accepted is the ratio of the height of the lower curve to the height of the higher curve at the value `\(\theta\)`. ] --- # Example .pull-left[ Suppose we want to get samples from `\(\mathcal{N}(0,1)\)`, We could use the `\(t_2\)` distribution as our proposal density as it has heavier tails than the Normal. ```r curve(dnorm(x), -6, 6, xlab = "x", ylab = "Density", n = 200) curve(dt(x, 2), -6, 6, add = TRUE, col = 4, n = 200) legend("topright", c("Normal density", "t density"), col = c(1, 4), bty = "n", lty = 1) ``` ] .pull-right[ <!-- --> ] --- # Probability of `\(\theta\)` accepted `$$\begin{aligned} \mathbb{P}(\theta \text { accepted }) &=\mathbb{P}\left(U \leq \frac{f(\theta|y)}{M g(\theta)}\right) \\ &=\int \mathbb{P}\left(U \leq \frac{f(\theta|y)}{M g(\theta)} | \theta\right) g(\theta) d \theta \\ &=\int \frac{f(\theta|y)}{M g(\theta)} g(\theta) d \theta \\ &=\frac{1}{M} \end{aligned}$$` -- 1. This has implications for how we choose the proposal density `\(g\)`. 2. We want to choose `\(g\)` so that it matches `\(f\)` as closely as possible. --- # Back to the example .pull-left[ <!-- --> ] .pull-right[ `\(f/g\)` is maximized at `\(x=1\)`, so `\(M = 1.257\)`. Acceptance probability is about 0.8. ] --- ### Why rejection sampling work? It can be shown that the distribution function of the accepted values is equal to the distribution function corresponding to the target density. ### What is the problem of rejection sampling? --- # Rejection sampling - Suppose we are interested in `\(\mathbb{E}_{f}[h(\theta|y)]\)`, what do we do? - Note that in most cases, the candidates generated from `\(g\)` fall within the domain of `\(f\)`, so that they are in fact values that could plausibly come from `\(f\)`. - They are simply over- or under-represented in the frequency with which they appear. - For example, if `\(g\)` has heavier tails than `\(f\)`, then there will be too many extreme values generated from `\(g\)`. Rejection sampling simply thins out those extreme values to obtain the right proportion. - But what if we could take those rejected values and, instead of discarding them, simply downweight or upweight them in a specific way? --- # Lab 10 Use the rejection sampling algorithm to simulate from `\(N(0, 1)\)`. Try the following proposal densities. 1. Logistic Distribution. 2. Cauchy distribution. 3. Student `\(t_5\)` distribution. 4. `\(N(1,2)\)` distribution. For each proposal record the percentage of iterates that are accepted. Which is the best proposal? --- class: inverse, center, middle # Importance sampling --- # Importance sampling - We can rewrite the target `$$\mathbb{E}_{f}[h(\theta|y)]=\mathbb{E}_{g}\left[\frac{f(\theta|y)}{g(\theta)} h(\theta|y)\right].$$` - If `\(\theta_{1}, \dots, \theta_{n} \sim g\)`, we get `$$\tilde{\mu}_{n}=\frac{1}{n} \sum_{i=1}^{n} \frac{f\left(\theta_{i}|y\right)}{g\left(\theta_{i}\right)} h\left(\theta_{i}|y\right)=\frac{1}{n} \sum_{i=1}^{n} w_{i} h\left(\theta_{i}|y\right) \approx \mathbb{E}_{f}[h(\theta|y)].$$` - `\(w_i\)` are referred to as the importance weights. --- # Summary - Direct sampling - Rejection sampling - Importance sampling --- # References and further readings ### References - Chapter 10 of the BDA book by Gelman et.al. ### Further readings - Chapter 6 of Advanced Statistical Computing by Roger Peng.